first commit

32

.gitignore

vendored

Normal file

@@ -0,0 +1,32 @@

|

|||||||

|

# .gitignore

|

||||||

|

# 首先忽略所有的文件

|

||||||

|

*

|

||||||

|

# 但是不忽略目录

|

||||||

|

!*/

|

||||||

|

# 忽略一些指定的目录名

|

||||||

|

ut/

|

||||||

|

runs/

|

||||||

|

.vscode/

|

||||||

|

build/

|

||||||

|

result/

|

||||||

|

*.pyc

|

||||||

|

pretrained_model/

|

||||||

|

# 不忽略下面指定的文件类型

|

||||||

|

!*.cpp

|

||||||

|

!*.h

|

||||||

|

!*.hpp

|

||||||

|

!*.c

|

||||||

|

!.gitignore

|

||||||

|

!*.py

|

||||||

|

!*.sh

|

||||||

|

!*.npy

|

||||||

|

!*.jpg

|

||||||

|

!*.pt

|

||||||

|

!*.npy

|

||||||

|

!*.pth

|

||||||

|

!*.png

|

||||||

|

!*.md

|

||||||

|

!*.txt

|

||||||

|

!*.yaml

|

||||||

|

!*.ttf

|

||||||

|

!*.cu

|

||||||

115

CONTRIBUTING.md

Normal file

@@ -0,0 +1,115 @@

|

|||||||

|

## Contributing to YOLOv8 🚀

|

||||||

|

|

||||||

|

We love your input! We want to make contributing to YOLOv8 as easy and transparent as possible, whether it's:

|

||||||

|

|

||||||

|

- Reporting a bug

|

||||||

|

- Discussing the current state of the code

|

||||||

|

- Submitting a fix

|

||||||

|

- Proposing a new feature

|

||||||

|

- Becoming a maintainer

|

||||||

|

|

||||||

|

YOLOv8 works so well due to our combined community effort, and for every small improvement you contribute you will be

|

||||||

|

helping push the frontiers of what's possible in AI 😃!

|

||||||

|

|

||||||

|

## Submitting a Pull Request (PR) 🛠️

|

||||||

|

|

||||||

|

Submitting a PR is easy! This example shows how to submit a PR for updating `requirements.txt` in 4 steps:

|

||||||

|

|

||||||

|

### 1. Select File to Update

|

||||||

|

|

||||||

|

Select `requirements.txt` to update by clicking on it in GitHub.

|

||||||

|

|

||||||

|

<p align="center"><img width="800" alt="PR_step1" src="https://user-images.githubusercontent.com/26833433/122260847-08be2600-ced4-11eb-828b-8287ace4136c.png"></p>

|

||||||

|

|

||||||

|

### 2. Click 'Edit this file'

|

||||||

|

|

||||||

|

Button is in top-right corner.

|

||||||

|

|

||||||

|

<p align="center"><img width="800" alt="PR_step2" src="https://user-images.githubusercontent.com/26833433/122260844-06f46280-ced4-11eb-9eec-b8a24be519ca.png"></p>

|

||||||

|

|

||||||

|

### 3. Make Changes

|

||||||

|

|

||||||

|

Change `matplotlib` version from `3.2.2` to `3.3`.

|

||||||

|

|

||||||

|

<p align="center"><img width="800" alt="PR_step3" src="https://user-images.githubusercontent.com/26833433/122260853-0a87e980-ced4-11eb-9fd2-3650fb6e0842.png"></p>

|

||||||

|

|

||||||

|

### 4. Preview Changes and Submit PR

|

||||||

|

|

||||||

|

Click on the **Preview changes** tab to verify your updates. At the bottom of the screen select 'Create a **new branch**

|

||||||

|

for this commit', assign your branch a descriptive name such as `fix/matplotlib_version` and click the green **Propose

|

||||||

|

changes** button. All done, your PR is now submitted to YOLOv8 for review and approval 😃!

|

||||||

|

|

||||||

|

<p align="center"><img width="800" alt="PR_step4" src="https://user-images.githubusercontent.com/26833433/122260856-0b208000-ced4-11eb-8e8e-77b6151cbcc3.png"></p>

|

||||||

|

|

||||||

|

### PR recommendations

|

||||||

|

|

||||||

|

To allow your work to be integrated as seamlessly as possible, we advise you to:

|

||||||

|

|

||||||

|

- ✅ Verify your PR is **up-to-date** with `ultralytics/ultralytics` `main` branch. If your PR is behind you can update

|

||||||

|

your code by clicking the 'Update branch' button or by running `git pull` and `git merge main` locally.

|

||||||

|

|

||||||

|

<p align="center"><img width="751" alt="Screenshot 2022-08-29 at 22 47 15" src="https://user-images.githubusercontent.com/26833433/187295893-50ed9f44-b2c9-4138-a614-de69bd1753d7.png"></p>

|

||||||

|

|

||||||

|

- ✅ Verify all YOLOv8 Continuous Integration (CI) **checks are passing**.

|

||||||

|

|

||||||

|

<p align="center"><img width="751" alt="Screenshot 2022-08-29 at 22 47 03" src="https://user-images.githubusercontent.com/26833433/187296922-545c5498-f64a-4d8c-8300-5fa764360da6.png"></p>

|

||||||

|

|

||||||

|

- ✅ Reduce changes to the absolute **minimum** required for your bug fix or feature addition. _"It is not daily increase

|

||||||

|

but daily decrease, hack away the unessential. The closer to the source, the less wastage there is."_ — Bruce Lee

|

||||||

|

|

||||||

|

### Docstrings

|

||||||

|

|

||||||

|

Not all functions or classes require docstrings but when they do, we

|

||||||

|

follow [google-style docstrings format](https://google.github.io/styleguide/pyguide.html#38-comments-and-docstrings).

|

||||||

|

Here is an example:

|

||||||

|

|

||||||

|

```python

|

||||||

|

"""

|

||||||

|

What the function does. Performs NMS on given detection predictions.

|

||||||

|

|

||||||

|

Args:

|

||||||

|

arg1: The description of the 1st argument

|

||||||

|

arg2: The description of the 2nd argument

|

||||||

|

|

||||||

|

Returns:

|

||||||

|

What the function returns. Empty if nothing is returned.

|

||||||

|

|

||||||

|

Raises:

|

||||||

|

Exception Class: When and why this exception can be raised by the function.

|

||||||

|

"""

|

||||||

|

```

|

||||||

|

|

||||||

|

## Submitting a Bug Report 🐛

|

||||||

|

|

||||||

|

If you spot a problem with YOLOv8 please submit a Bug Report!

|

||||||

|

|

||||||

|

For us to start investigating a possible problem we need to be able to reproduce it ourselves first. We've created a few

|

||||||

|

short guidelines below to help users provide what we need in order to get started.

|

||||||

|

|

||||||

|

When asking a question, people will be better able to provide help if you provide **code** that they can easily

|

||||||

|

understand and use to **reproduce** the problem. This is referred to by community members as creating

|

||||||

|

a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example). Your code that reproduces

|

||||||

|

the problem should be:

|

||||||

|

|

||||||

|

- ✅ **Minimal** – Use as little code as possible that still produces the same problem

|

||||||

|

- ✅ **Complete** – Provide **all** parts someone else needs to reproduce your problem in the question itself

|

||||||

|

- ✅ **Reproducible** – Test the code you're about to provide to make sure it reproduces the problem

|

||||||

|

|

||||||

|

In addition to the above requirements, for [Ultralytics](https://ultralytics.com/) to provide assistance your code

|

||||||

|

should be:

|

||||||

|

|

||||||

|

- ✅ **Current** – Verify that your code is up-to-date with current

|

||||||

|

GitHub [main](https://github.com/ultralytics/ultralytics/tree/main) branch, and if necessary `git pull` or `git clone`

|

||||||

|

a new copy to ensure your problem has not already been resolved by previous commits.

|

||||||

|

- ✅ **Unmodified** – Your problem must be reproducible without any modifications to the codebase in this

|

||||||

|

repository. [Ultralytics](https://ultralytics.com/) does not provide support for custom code ⚠️.

|

||||||

|

|

||||||

|

If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛

|

||||||

|

**Bug Report** [template](https://github.com/ultralytics/ultralytics/issues/new/choose) and providing

|

||||||

|

a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example) to help us better

|

||||||

|

understand and diagnose your problem.

|

||||||

|

|

||||||

|

## License

|

||||||

|

|

||||||

|

By contributing, you agree that your contributions will be licensed under

|

||||||

|

the [GPL-3.0 license](https://choosealicense.com/licenses/gpl-3.0/)

|

||||||

49

README.md

Normal file

@@ -0,0 +1,49 @@

|

|||||||

|

## **yolov8车牌识别算法,支持12种中文车牌类型**

|

||||||

|

|

||||||

|

**环境要求: python >=3.6 pytorch >=1.7 pip install requirements.txt**

|

||||||

|

|

||||||

|

#### **图片测试demo:**

|

||||||

|

|

||||||

|

直接运行detect_plate.py 或者运行如下命令行:

|

||||||

|

|

||||||

|

```

|

||||||

|

python detect_rec_plate.py --detect_model weights/yolov8-lite-t-plate.pt --rec_model weights/plate_rec_color.pth --image_path imgs --output result

|

||||||

|

```

|

||||||

|

|

||||||

|

测试文件夹imgs,结果保存再 result 文件夹中

|

||||||

|

|

||||||

|

## **车牌检测训练**

|

||||||

|

|

||||||

|

车牌检测训练链接如下:

|

||||||

|

|

||||||

|

[车牌检测训练](https://github.com/we0091234/Chinese_license_plate_detection_recognition/tree/main/readme)

|

||||||

|

|

||||||

|

## **车牌识别训练**

|

||||||

|

|

||||||

|

车牌识别训练链接如下:

|

||||||

|

|

||||||

|

[车牌识别训练](https://github.com/we0091234/crnn_plate_recognition)

|

||||||

|

|

||||||

|

#### **支持如下:**

|

||||||

|

|

||||||

|

- [X] 1.单行蓝牌

|

||||||

|

- [X] 2.单行黄牌

|

||||||

|

- [X] 3.新能源车牌

|

||||||

|

- [X] 4.白色警用车牌

|

||||||

|

- [X] 5.教练车牌

|

||||||

|

- [X] 6.武警车牌

|

||||||

|

- [X] 7.双层黄牌

|

||||||

|

- [X] 8.双层白牌

|

||||||

|

- [X] 9.使馆车牌

|

||||||

|

- [X] 10.港澳粤Z牌

|

||||||

|

- [X] 11.双层绿牌

|

||||||

|

- [X] 12.民航车牌

|

||||||

|

|

||||||

|

## References

|

||||||

|

|

||||||

|

* [https://github.com/derronqi/yolov8-face](https://github.com/derronqi/yolov8-face)

|

||||||

|

* [https://github.com/ultralytics/ultralytics](https://github.com/ultralytics/ultralytics)

|

||||||

|

|

||||||

|

## 联系

|

||||||

|

|

||||||

|

**有问题可以提issues 或者加qq群:871797331 询问**

|

||||||

BIN

data/test.jpg

Normal file

|

After Width: | Height: | Size: 1.3 MiB |

42934

data/widerface/val/label.txt

Normal file

3226

data/widerface/val/wider_val.txt

Normal file

246

detect_rec_plate.py

Normal file

@@ -0,0 +1,246 @@

|

|||||||

|

import torch

|

||||||

|

import cv2

|

||||||

|

import numpy as np

|

||||||

|

import argparse

|

||||||

|

import copy

|

||||||

|

import time

|

||||||

|

import os

|

||||||

|

from ultralytics.nn.tasks import attempt_load_weights

|

||||||

|

from plate_recognition.plate_rec import get_plate_result,init_model,cv_imread

|

||||||

|

from plate_recognition.double_plate_split_merge import get_split_merge

|

||||||

|

from fonts.cv_puttext import cv2ImgAddText

|

||||||

|

|

||||||

|

def allFilePath(rootPath,allFIleList):# 读取文件夹内的文件,放到list

|

||||||

|

fileList = os.listdir(rootPath)

|

||||||

|

for temp in fileList:

|

||||||

|

if os.path.isfile(os.path.join(rootPath,temp)):

|

||||||

|

allFIleList.append(os.path.join(rootPath,temp))

|

||||||

|

else:

|

||||||

|

allFilePath(os.path.join(rootPath,temp),allFIleList)

|

||||||

|

|

||||||

|

def four_point_transform(image, pts): #透视变换得到车牌小图

|

||||||

|

# rect = order_points(pts)

|

||||||

|

rect = pts.astype('float32')

|

||||||

|

(tl, tr, br, bl) = rect

|

||||||

|

widthA = np.sqrt(((br[0] - bl[0]) ** 2) + ((br[1] - bl[1]) ** 2))

|

||||||

|

widthB = np.sqrt(((tr[0] - tl[0]) ** 2) + ((tr[1] - tl[1]) ** 2))

|

||||||

|

maxWidth = max(int(widthA), int(widthB))

|

||||||

|

heightA = np.sqrt(((tr[0] - br[0]) ** 2) + ((tr[1] - br[1]) ** 2))

|

||||||

|

heightB = np.sqrt(((tl[0] - bl[0]) ** 2) + ((tl[1] - bl[1]) ** 2))

|

||||||

|

maxHeight = max(int(heightA), int(heightB))

|

||||||

|

dst = np.array([

|

||||||

|

[0, 0],

|

||||||

|

[maxWidth - 1, 0],

|

||||||

|

[maxWidth - 1, maxHeight - 1],

|

||||||

|

[0, maxHeight - 1]], dtype = "float32")

|

||||||

|

M = cv2.getPerspectiveTransform(rect, dst)

|

||||||

|

warped = cv2.warpPerspective(image, M, (maxWidth, maxHeight))

|

||||||

|

return warped

|

||||||

|

|

||||||

|

|

||||||

|

def letter_box(img,size=(640,640)): #yolo 前处理 letter_box操作

|

||||||

|

h,w,_=img.shape

|

||||||

|

r=min(size[0]/h,size[1]/w)

|

||||||

|

new_h,new_w=int(h*r),int(w*r)

|

||||||

|

new_img = cv2.resize(img,(new_w,new_h))

|

||||||

|

left= int((size[1]-new_w)/2)

|

||||||

|

top=int((size[0]-new_h)/2)

|

||||||

|

right = size[1]-left-new_w

|

||||||

|

bottom=size[0]-top-new_h

|

||||||

|

img =cv2.copyMakeBorder(new_img,top,bottom,left,right,cv2.BORDER_CONSTANT,value=(114,114,114))

|

||||||

|

return img,r,left,top

|

||||||

|

|

||||||

|

def load_model(weights, device): #加载yolov8 模型

|

||||||

|

model = attempt_load_weights(weights,device=device) # load FP32 model

|

||||||

|

return model

|

||||||

|

|

||||||

|

def xywh2xyxy(det): #xywh转化为xyxy

|

||||||

|

y = det.clone()

|

||||||

|

y[:,0]=det[:,0]-det[0:,2]/2

|

||||||

|

y[:,1]=det[:,1]-det[0:,3]/2

|

||||||

|

y[:,2]=det[:,0]+det[0:,2]/2

|

||||||

|

y[:,3]=det[:,1]+det[0:,3]/2

|

||||||

|

return y

|

||||||

|

|

||||||

|

def my_nums(dets,iou_thresh): #nms操作

|

||||||

|

y = dets.clone()

|

||||||

|

y_box_score = y[:,:5]

|

||||||

|

index = torch.argsort(y_box_score[:,-1],descending=True)

|

||||||

|

keep = []

|

||||||

|

while index.size()[0]>0:

|

||||||

|

i = index[0].item()

|

||||||

|

keep.append(i)

|

||||||

|

x1=torch.maximum(y_box_score[i,0],y_box_score[index[1:],0])

|

||||||

|

y1=torch.maximum(y_box_score[i,1],y_box_score[index[1:],1])

|

||||||

|

x2=torch.minimum(y_box_score[i,2],y_box_score[index[1:],2])

|

||||||

|

y2=torch.minimum(y_box_score[i,3],y_box_score[index[1:],3])

|

||||||

|

zero_=torch.tensor(0).to(device)

|

||||||

|

w=torch.maximum(zero_,x2-x1)

|

||||||

|

h=torch.maximum(zero_,y2-y1)

|

||||||

|

inter_area = w*h

|

||||||

|

nuion_area1 =(y_box_score[i,2]-y_box_score[i,0])*(y_box_score[i,3]-y_box_score[i,1]) #计算交集

|

||||||

|

union_area2 =(y_box_score[index[1:],2]-y_box_score[index[1:],0])*(y_box_score[index[1:],3]-y_box_score[index[1:],1])#计算并集

|

||||||

|

|

||||||

|

iou = inter_area/(nuion_area1+union_area2-inter_area)#计算iou

|

||||||

|

|

||||||

|

idx = torch.where(iou<=iou_thresh)[0] #保留iou小于iou_thresh的

|

||||||

|

index=index[idx+1]

|

||||||

|

return keep

|

||||||

|

|

||||||

|

|

||||||

|

def restore_box(dets,r,left,top): #坐标还原到原图上

|

||||||

|

|

||||||

|

dets[:,[0,2,5,7,9,11]]=dets[:,[0,2,5,7,9,11]]-left

|

||||||

|

dets[:,[1,3,6,8,10,12]]= dets[:,[1,3,6,8,10,12]]-top

|

||||||

|

dets[:,:4]/=r

|

||||||

|

dets[:,5:13]/=r

|

||||||

|

|

||||||

|

return dets

|

||||||

|

# pass

|

||||||

|

|

||||||

|

def post_processing(prediction,conf,iou_thresh,r,left,top): #后处理

|

||||||

|

|

||||||

|

prediction = prediction.permute(0,2,1).squeeze(0)

|

||||||

|

xc = prediction[:, 4:6].amax(1) > conf #过滤掉小于conf的框

|

||||||

|

x = prediction[xc]

|

||||||

|

if not len(x):

|

||||||

|

return []

|

||||||

|

boxes = x[:,:4] #框

|

||||||

|

boxes = xywh2xyxy(boxes) #中心点 宽高 变为 左上 右下两个点

|

||||||

|

score,index = torch.max(x[:,4:6],dim=-1,keepdim=True) #找出得分和所属类别

|

||||||

|

x = torch.cat((boxes,score,x[:,6:14],index),dim=1) #重新组合

|

||||||

|

|

||||||

|

score = x[:,4]

|

||||||

|

keep =my_nums(x,iou_thresh)

|

||||||

|

x=x[keep]

|

||||||

|

x=restore_box(x,r,left,top)

|

||||||

|

return x

|

||||||

|

|

||||||

|

def pre_processing(img,opt,device): #前处理

|

||||||

|

img, r,left,top= letter_box(img,(opt.img_size,opt.img_size))

|

||||||

|

# print(img.shape)

|

||||||

|

img=img[:,:,::-1].transpose((2,0,1)).copy() #bgr2rgb hwc2chw

|

||||||

|

img = torch.from_numpy(img).to(device)

|

||||||

|

img = img.float()

|

||||||

|

img = img/255.0

|

||||||

|

img =img.unsqueeze(0)

|

||||||

|

return img ,r,left,top

|

||||||

|

|

||||||

|

def det_rec_plate(img,img_ori,detect_model,plate_rec_model):

|

||||||

|

result_list=[]

|

||||||

|

img,r,left,top = pre_processing(img,opt,device) #前处理

|

||||||

|

predict = detect_model(img)[0]

|

||||||

|

outputs=post_processing(predict,0.3,0.5,r,left,top) #后处理

|

||||||

|

for output in outputs:

|

||||||

|

result_dict={}

|

||||||

|

output = output.squeeze().cpu().numpy().tolist()

|

||||||

|

rect=output[:4]

|

||||||

|

rect = [int(x) for x in rect]

|

||||||

|

label = output[-1]

|

||||||

|

land_marks=np.array(output[5:13],dtype='int64').reshape(4,2)

|

||||||

|

roi_img = four_point_transform(img_ori,land_marks) #透视变换得到车牌小图

|

||||||

|

if int(label): #判断是否是双层车牌,是双牌的话进行分割后然后拼接

|

||||||

|

roi_img=get_split_merge(roi_img)

|

||||||

|

plate_number,rec_prob,plate_color,color_conf=get_plate_result(roi_img,device,plate_rec_model,is_color=True)

|

||||||

|

|

||||||

|

result_dict['plate_no']=plate_number #车牌号

|

||||||

|

result_dict['plate_color']=plate_color #车牌颜色

|

||||||

|

result_dict['rect']=rect #车牌roi区域

|

||||||

|

result_dict['detect_conf']=output[4] #检测区域得分

|

||||||

|

result_dict['landmarks']=land_marks.tolist() #车牌角点坐标

|

||||||

|

# result_dict['rec_conf']=rec_prob #每个字符的概率

|

||||||

|

result_dict['roi_height']=roi_img.shape[0] #车牌高度

|

||||||

|

# result_dict['plate_color']=plate_color

|

||||||

|

# if is_color:

|

||||||

|

result_dict['color_conf']=color_conf #颜色得分

|

||||||

|

result_dict['plate_type']=int(label) #单双层 0单层 1双层

|

||||||

|

result_list.append(result_dict)

|

||||||

|

return result_list

|

||||||

|

|

||||||

|

|

||||||

|

def draw_result(orgimg,dict_list,is_color=False): # 车牌结果画出来

|

||||||

|

result_str =""

|

||||||

|

for result in dict_list:

|

||||||

|

rect_area = result['rect']

|

||||||

|

|

||||||

|

x,y,w,h = rect_area[0],rect_area[1],rect_area[2]-rect_area[0],rect_area[3]-rect_area[1]

|

||||||

|

padding_w = 0.05*w

|

||||||

|

padding_h = 0.11*h

|

||||||

|

rect_area[0]=max(0,int(x-padding_w))

|

||||||

|

rect_area[1]=max(0,int(y-padding_h))

|

||||||

|

rect_area[2]=min(orgimg.shape[1],int(rect_area[2]+padding_w))

|

||||||

|

rect_area[3]=min(orgimg.shape[0],int(rect_area[3]+padding_h))

|

||||||

|

|

||||||

|

height_area = result['roi_height']

|

||||||

|

landmarks=result['landmarks']

|

||||||

|

result_p = result['plate_no']

|

||||||

|

if result['plate_type']==0:#单层

|

||||||

|

result_p+=" "+result['plate_color']

|

||||||

|

else: #双层

|

||||||

|

result_p+=" "+result['plate_color']+"双层"

|

||||||

|

result_str+=result_p+" "

|

||||||

|

for i in range(4): #关键点

|

||||||

|

cv2.circle(orgimg, (int(landmarks[i][0]), int(landmarks[i][1])), 5, clors[i], -1)

|

||||||

|

cv2.rectangle(orgimg,(rect_area[0],rect_area[1]),(rect_area[2],rect_area[3]),(0,0,255),2) #画框

|

||||||

|

|

||||||

|

labelSize = cv2.getTextSize(result_p,cv2.FONT_HERSHEY_SIMPLEX,0.5,1) #获得字体的大小

|

||||||

|

if rect_area[0]+labelSize[0][0]>orgimg.shape[1]: #防止显示的文字越界

|

||||||

|

rect_area[0]=int(orgimg.shape[1]-labelSize[0][0])

|

||||||

|

orgimg=cv2.rectangle(orgimg,(rect_area[0],int(rect_area[1]-round(1.6*labelSize[0][1]))),(int(rect_area[0]+round(1.2*labelSize[0][0])),rect_area[1]+labelSize[1]),(255,255,255),cv2.FILLED)#画文字框,背景白色

|

||||||

|

|

||||||

|

if len(result)>=6:

|

||||||

|

orgimg=cv2ImgAddText(orgimg,result_p,rect_area[0],int(rect_area[1]-round(1.6*labelSize[0][1])),(0,0,0),21)

|

||||||

|

# orgimg=cv2ImgAddText(orgimg,result_p,rect_area[0]-height_area,rect_area[1]-height_area-10,(0,255,0),height_area)

|

||||||

|

|

||||||

|

print(result_str)

|

||||||

|

return orgimg

|

||||||

|

|

||||||

|

|

||||||

|

if __name__ == "__main__":

|

||||||

|

parser = argparse.ArgumentParser()

|

||||||

|

parser.add_argument('--detect_model', nargs='+', type=str, default=r'weights/yolov8-lite-t-plate.pt', help='model.pt path(s)') #yolov8检测模型

|

||||||

|

parser.add_argument('--rec_model', type=str, default=r'weights/plate_rec_color.pth', help='model.pt path(s)')#车牌字符识别模型

|

||||||

|

parser.add_argument('--image_path', type=str, default=r'imgs', help='source') #待识别图片路径

|

||||||

|

parser.add_argument('--img_size', type=int, default=320, help='inference size (pixels)') #yolov8 网络模型输入大小

|

||||||

|

parser.add_argument('--output', type=str, default='result', help='source') #结果保存的文件夹

|

||||||

|

device =torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

||||||

|

|

||||||

|

clors = [(255,0,0),(0,255,0),(0,0,255),(255,255,0),(0,255,255)]

|

||||||

|

opt = parser.parse_args()

|

||||||

|

save_path = opt.output

|

||||||

|

|

||||||

|

if not os.path.exists(save_path):

|

||||||

|

os.mkdir(save_path)

|

||||||

|

|

||||||

|

detect_model = load_model(opt.detect_model, device) #初始化yolov8识别模型

|

||||||

|

plate_rec_model=init_model(device,opt.rec_model,is_color=True) #初始化识别模型

|

||||||

|

#算参数量

|

||||||

|

total = sum(p.numel() for p in detect_model.parameters())

|

||||||

|

total_1 = sum(p.numel() for p in plate_rec_model.parameters())

|

||||||

|

print("yolov8 detect params: %.2fM,rec params: %.2fM" % (total/1e6,total_1/1e6))

|

||||||

|

|

||||||

|

detect_model.eval()

|

||||||

|

# print(detect_model)

|

||||||

|

file_list = []

|

||||||

|

allFilePath(opt.image_path,file_list)

|

||||||

|

count=0

|

||||||

|

time_all = 0

|

||||||

|

time_begin=time.time()

|

||||||

|

for pic_ in file_list:

|

||||||

|

print(count,pic_,end=" ")

|

||||||

|

time_b = time.time() #开始时间

|

||||||

|

img = cv2.imread(pic_)

|

||||||

|

img_ori = copy.deepcopy(img)

|

||||||

|

result_list=det_rec_plate(img,img_ori,detect_model,plate_rec_model)

|

||||||

|

time_e=time.time()

|

||||||

|

ori_img=draw_result(img,result_list) #将结果画在图上

|

||||||

|

img_name = os.path.basename(pic_)

|

||||||

|

save_img_path = os.path.join(save_path,img_name) #图片保存的路径

|

||||||

|

time_gap = time_e-time_b #计算单个图片识别耗时

|

||||||

|

if count:

|

||||||

|

time_all+=time_gap

|

||||||

|

count+=1

|

||||||

|

cv2.imwrite(save_img_path,ori_img) #op

|

||||||

|

# print(result_list)

|

||||||

|

print(f"sumTime time is {time.time()-time_begin} s, average pic time is {time_all/(len(file_list)-1)}")

|

||||||

|

|

||||||

85

docs/README.md

Normal file

@@ -0,0 +1,85 @@

|

|||||||

|

# Ultralytics Docs

|

||||||

|

|

||||||

|

Ultralytics Docs are deployed to [https://docs.ultralytics.com](https://docs.ultralytics.com).

|

||||||

|

|

||||||

|

### Install Ultralytics package

|

||||||

|

|

||||||

|

To install the ultralytics package in developer mode, you will need to have Git and Python 3 installed on your system.

|

||||||

|

Then, follow these steps:

|

||||||

|

|

||||||

|

1. Clone the ultralytics repository to your local machine using Git:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

git clone https://github.com/ultralytics/ultralytics.git

|

||||||

|

```

|

||||||

|

|

||||||

|

2. Navigate to the root directory of the repository:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

cd ultralytics

|

||||||

|

```

|

||||||

|

|

||||||

|

3. Install the package in developer mode using pip:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

pip install -e '.[dev]'

|

||||||

|

```

|

||||||

|

|

||||||

|

This will install the ultralytics package and its dependencies in developer mode, allowing you to make changes to the

|

||||||

|

package code and have them reflected immediately in your Python environment.

|

||||||

|

|

||||||

|

Note that you may need to use the pip3 command instead of pip if you have multiple versions of Python installed on your

|

||||||

|

system.

|

||||||

|

|

||||||

|

### Building and Serving Locally

|

||||||

|

|

||||||

|

The `mkdocs serve` command is used to build and serve a local version of the MkDocs documentation site. It is typically

|

||||||

|

used during the development and testing phase of a documentation project.

|

||||||

|

|

||||||

|

```bash

|

||||||

|

mkdocs serve

|

||||||

|

```

|

||||||

|

|

||||||

|

Here is a breakdown of what this command does:

|

||||||

|

|

||||||

|

- `mkdocs`: This is the command-line interface (CLI) for the MkDocs static site generator. It is used to build and serve

|

||||||

|

MkDocs sites.

|

||||||

|

- `serve`: This is a subcommand of the `mkdocs` CLI that tells it to build and serve the documentation site locally.

|

||||||

|

- `-a`: This flag specifies the hostname and port number to bind the server to. The default value is `localhost:8000`.

|

||||||

|

- `-t`: This flag specifies the theme to use for the documentation site. The default value is `mkdocs`.

|

||||||

|

- `-s`: This flag tells the `serve` command to serve the site in silent mode, which means it will not display any log

|

||||||

|

messages or progress updates.

|

||||||

|

When you run the `mkdocs serve` command, it will build the documentation site using the files in the `docs/` directory

|

||||||

|

and serve it at the specified hostname and port number. You can then view the site by going to the URL in your web

|

||||||

|

browser.

|

||||||

|

|

||||||

|

While the site is being served, you can make changes to the documentation files and see them reflected in the live site

|

||||||

|

immediately. This is useful for testing and debugging your documentation before deploying it to a live server.

|

||||||

|

|

||||||

|

To stop the serve command and terminate the local server, you can use the `CTRL+C` keyboard shortcut.

|

||||||

|

|

||||||

|

### Deploying Your Documentation Site

|

||||||

|

|

||||||

|

To deploy your MkDocs documentation site, you will need to choose a hosting provider and a deployment method. Some

|

||||||

|

popular options include GitHub Pages, GitLab Pages, and Amazon S3.

|

||||||

|

|

||||||

|

Before you can deploy your site, you will need to configure your `mkdocs.yml` file to specify the remote host and any

|

||||||

|

other necessary deployment settings.

|

||||||

|

|

||||||

|

Once you have configured your `mkdocs.yml` file, you can use the `mkdocs deploy` command to build and deploy your site.

|

||||||

|

This command will build the documentation site using the files in the `docs/` directory and the specified configuration

|

||||||

|

file and theme, and then deploy the site to the specified remote host.

|

||||||

|

|

||||||

|

For example, to deploy your site to GitHub Pages using the gh-deploy plugin, you can use the following command:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

mkdocs gh-deploy

|

||||||

|

```

|

||||||

|

|

||||||

|

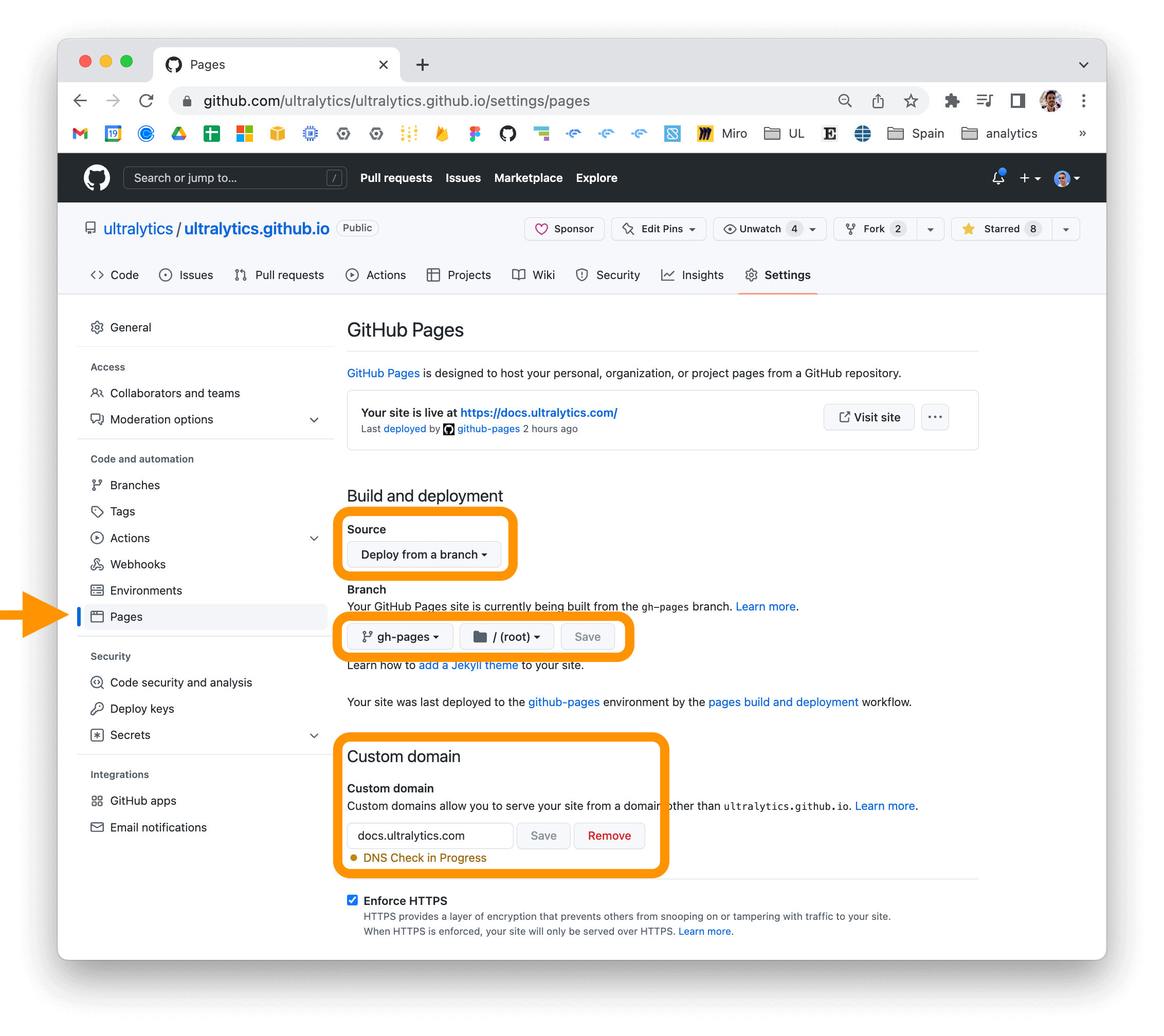

If you are using GitHub Pages, you can set a custom domain for your documentation site by going to the "Settings" page

|

||||||

|

for your repository and updating the "Custom domain" field in the "GitHub Pages" section.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

For more information on deploying your MkDocs documentation site, see

|

||||||

|

the [MkDocs documentation](https://www.mkdocs.org/user-guide/deploying-your-docs/).

|

||||||

26

docs/SECURITY.md

Normal file

@@ -0,0 +1,26 @@

|

|||||||

|

At [Ultralytics](https://ultralytics.com), the security of our users' data and systems is of utmost importance. To

|

||||||

|

ensure the safety and security of our [open-source projects](https://github.com/ultralytics), we have implemented

|

||||||

|

several measures to detect and prevent security vulnerabilities.

|

||||||

|

|

||||||

|

[](https://snyk.io/advisor/python/ultralytics)

|

||||||

|

|

||||||

|

## Snyk Scanning

|

||||||

|

|

||||||

|

We use [Snyk](https://snyk.io/advisor/python/ultralytics) to regularly scan the YOLOv8 repository for vulnerabilities

|

||||||

|

and security issues. Our goal is to identify and remediate any potential threats as soon as possible, to minimize any

|

||||||

|

risks to our users.

|

||||||

|

|

||||||

|

## GitHub CodeQL Scanning

|

||||||

|

|

||||||

|

In addition to our Snyk scans, we also use

|

||||||

|

GitHub's [CodeQL](https://docs.github.com/en/code-security/code-scanning/automatically-scanning-your-code-for-vulnerabilities-and-errors/about-code-scanning-with-codeql)

|

||||||

|

scans to proactively identify and address security vulnerabilities.

|

||||||

|

|

||||||

|

## Reporting Security Issues

|

||||||

|

|

||||||

|

If you suspect or discover a security vulnerability in the YOLOv8 repository, please let us know immediately. You can

|

||||||

|

reach out to us directly via our [contact form](https://ultralytics.com/contact) or

|

||||||

|

via [security@ultralytics.com](mailto:security@ultralytics.com). Our security team will investigate and respond as soon

|

||||||

|

as possible.

|

||||||

|

|

||||||

|

We appreciate your help in keeping the YOLOv8 repository secure and safe for everyone.

|

||||||

48

docs/app.md

Normal file

@@ -0,0 +1,48 @@

|

|||||||

|

# Ultralytics HUB App for YOLOv8

|

||||||

|

|

||||||

|

<a href="https://bit.ly/ultralytics_hub" target="_blank">

|

||||||

|

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/im/ultralytics-hub.png"></a>

|

||||||

|

<br>

|

||||||

|

<div align="center">

|

||||||

|

<a href="https://github.com/ultralytics" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-github.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://www.linkedin.com/company/ultralytics" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-linkedin.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://twitter.com/ultralytics" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-twitter.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://youtube.com/ultralytics" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-youtube.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://www.tiktok.com/@ultralytics" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-tiktok.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="2%" alt="" /></a>

|

||||||

|

<br>

|

||||||

|

<br>

|

||||||

|

<a href="https://play.google.com/store/apps/details?id=com.ultralytics.ultralytics_app" style="text-decoration:none;">

|

||||||

|

<img src="https://raw.githubusercontent.com/ultralytics/assets/master/app/google-play.svg" width="15%" alt="" /></a>

|

||||||

|

<a href="https://apps.apple.com/xk/app/ultralytics/id1583935240" style="text-decoration:none;">

|

||||||

|

<img src="https://raw.githubusercontent.com/ultralytics/assets/master/app/app-store.svg" width="15%" alt="" /></a>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

Welcome to the Ultralytics HUB app, which is designed to demonstrate the power and capabilities of the YOLOv5 and YOLOv8

|

||||||

|

models. This app is available for download on

|

||||||

|

the [Apple App Store](https://apps.apple.com/xk/app/ultralytics/id1583935240) and

|

||||||

|

the [Google Play Store](https://play.google.com/store/apps/details?id=com.ultralytics.ultralytics_app).

|

||||||

|

|

||||||

|

**To install the app, simply scan the QR code provided above**. At the moment, the app features YOLOv5 models, with

|

||||||

|

YOLOv8 models set to be available soon.

|

||||||

|

|

||||||

|

With the YOLOv5 model, you can easily detect and classify objects in images and videos with high accuracy and speed. The

|

||||||

|

model has been trained on a vast dataset and can recognize a wide range of objects, including pedestrians, traffic

|

||||||

|

signs, and cars.

|

||||||

|

|

||||||

|

Using this app, you can try out YOLOv5 on your images and videos, and observe how the model works in real-time.

|

||||||

|

Additionally, you can learn more about YOLOv5's functionality and how it can be integrated into real-world applications.

|

||||||

|

|

||||||

|

We are confident that you will enjoy using YOLOv5 and be amazed at its capabilities. Thank you for choosing Ultralytics

|

||||||

|

for your AI solutions.

|

||||||

112

docs/hub.md

Normal file

@@ -0,0 +1,112 @@

|

|||||||

|

# Ultralytics HUB

|

||||||

|

|

||||||

|

<a href="https://bit.ly/ultralytics_hub" target="_blank">

|

||||||

|

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/im/ultralytics-hub.png"></a>

|

||||||

|

<br>

|

||||||

|

<div align="center">

|

||||||

|

<a href="https://github.com/ultralytics" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-github.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://www.linkedin.com/company/ultralytics" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-linkedin.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://twitter.com/ultralytics" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-twitter.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://youtube.com/ultralytics" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-youtube.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://www.tiktok.com/@ultralytics" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-tiktok.png" width="2%" alt="" /></a>

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%" alt="" />

|

||||||

|

<a href="https://www.instagram.com/ultralytics/" style="text-decoration:none;">

|

||||||

|

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="2%" alt="" /></a>

|

||||||

|

<br>

|

||||||

|

<br>

|

||||||

|

<a href="https://github.com/ultralytics/hub/actions/workflows/ci.yaml">

|

||||||

|

<img src="https://github.com/ultralytics/hub/actions/workflows/ci.yaml/badge.svg" alt="CI CPU"></a>

|

||||||

|

<a href="https://colab.research.google.com/github/ultralytics/hub/blob/master/hub.ipynb">

|

||||||

|

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

|

||||||

|

[Ultralytics HUB](https://hub.ultralytics.com) is a new no-code online tool developed

|

||||||

|

by [Ultralytics](https://ultralytics.com), the creators of the popular [YOLOv5](https://github.com/ultralytics/yolov5)

|

||||||

|

object detection and image segmentation models. With Ultralytics HUB, users can easily train and deploy YOLO models

|

||||||

|

without any coding or technical expertise.

|

||||||

|

|

||||||

|

Ultralytics HUB is designed to be user-friendly and intuitive, with a drag-and-drop interface that allows users to

|

||||||

|

easily upload their data and select their model configurations. It also offers a range of pre-trained models and

|

||||||

|

templates to choose from, making it easy for users to get started with training their own models. Once a model is

|

||||||

|

trained, it can be easily deployed and used for real-time object detection and image segmentation tasks. Overall,

|

||||||

|

Ultralytics HUB is an essential tool for anyone looking to use YOLO for their object detection and image segmentation

|

||||||

|

projects.

|

||||||

|

|

||||||

|

**[Get started now](https://hub.ultralytics.com)** and experience the power and simplicity of Ultralytics HUB for

|

||||||

|

yourself. Sign up for a free account and start building, training, and deploying YOLOv5 and YOLOv8 models today.

|

||||||

|

|

||||||

|

## 1. Upload a Dataset

|

||||||

|

|

||||||

|

Ultralytics HUB datasets are just like YOLOv5 🚀 datasets, they use the same structure and the same label formats to keep

|

||||||

|

everything simple.

|

||||||

|

|

||||||

|

When you upload a dataset to Ultralytics HUB, make sure to **place your dataset YAML inside the dataset root directory**

|

||||||

|

as in the example shown below, and then zip for upload to https://hub.ultralytics.com/. Your **dataset YAML, directory

|

||||||

|

and zip** should all share the same name. For example, if your dataset is called 'coco6' as in our

|

||||||

|

example [ultralytics/hub/coco6.zip](https://github.com/ultralytics/hub/blob/master/coco6.zip), then you should have a

|

||||||

|

coco6.yaml inside your coco6/ directory, which should zip to create coco6.zip for upload:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

zip -r coco6.zip coco6

|

||||||

|

```

|

||||||

|

|

||||||

|

The example [coco6.zip](https://github.com/ultralytics/hub/blob/master/coco6.zip) dataset in this repository can be

|

||||||

|

downloaded and unzipped to see exactly how to structure your custom dataset.

|

||||||

|

|

||||||

|

<p align="center">

|

||||||

|

<img width="80%" src="https://user-images.githubusercontent.com/26833433/201424843-20fa081b-ad4b-4d6c-a095-e810775908d8.png" title="COCO6" />

|

||||||

|

</p>

|

||||||

|

|

||||||

|

The dataset YAML is the same standard YOLOv5 YAML format. See

|

||||||

|

the [YOLOv5 Train Custom Data tutorial](https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data) for full details.

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

|

||||||

|

path: # dataset root dir (leave empty for HUB)

|

||||||

|

train: images/train # train images (relative to 'path') 8 images

|

||||||

|

val: images/val # val images (relative to 'path') 8 images

|

||||||

|

test: # test images (optional)

|

||||||

|

|

||||||

|

# Classes

|

||||||

|

names:

|

||||||

|

0: person

|

||||||

|

1: bicycle

|

||||||

|

2: car

|

||||||

|

3: motorcycle

|

||||||

|

...

|

||||||

|

```

|

||||||

|

|

||||||

|

After zipping your dataset, sign in to [Ultralytics HUB](https://bit.ly/ultralytics_hub) and click the Datasets tab.

|

||||||

|

Click 'Upload Dataset' to upload, scan and visualize your new dataset before training new YOLOv5 models on it!

|

||||||

|

|

||||||

|

<img width="100%" alt="HUB Dataset Upload" src="https://user-images.githubusercontent.com/26833433/216763338-9a8812c8-a4e5-4362-8102-40dad7818396.png">

|

||||||

|

|

||||||

|

## 2. Train a Model

|

||||||

|

|

||||||

|

Connect to the Ultralytics HUB notebook and use your model API key to begin training!

|

||||||

|

|

||||||

|

<a href="https://colab.research.google.com/github/ultralytics/hub/blob/master/hub.ipynb" target="_blank">

|

||||||

|

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a>

|

||||||

|

|

||||||

|

## 3. Deploy to Real World

|

||||||

|

|

||||||

|

Export your model to 13 different formats, including TensorFlow, ONNX, OpenVINO, CoreML, Paddle and many others. Run

|

||||||

|

models directly on your [iOS](https://apps.apple.com/xk/app/ultralytics/id1583935240) or

|

||||||

|

[Android](https://play.google.com/store/apps/details?id=com.ultralytics.ultralytics_app) mobile device by downloading

|

||||||

|

the [Ultralytics App](https://ultralytics.com/app_install)!

|

||||||

|

|

||||||

|

## ❓ Issues

|

||||||

|

|

||||||

|

If you are a new [Ultralytics HUB](https://bit.ly/ultralytics_hub) user and have questions or comments, you are in the

|

||||||

|

right place! Please raise a [New Issue](https://github.com/ultralytics/hub/issues/new/choose) and let us know what we

|

||||||

|

can do to make your life better 😃!

|

||||||

45

docs/index.md

Normal file

@@ -0,0 +1,45 @@

|

|||||||

|

<div align="center">

|

||||||

|

<p>

|

||||||

|

<a href="https://github.com/ultralytics/ultralytics" target="_blank">

|

||||||

|

<img width="1024" src="https://raw.githubusercontent.com/ultralytics/assets/main/yolov8/banner-yolov8.png"></a>

|

||||||

|

</p>

|

||||||

|

<a href="https://github.com/ultralytics/ultralytics/actions/workflows/ci.yaml"><img src="https://github.com/ultralytics/ultralytics/actions/workflows/ci.yaml/badge.svg" alt="Ultralytics CI"></a>

|

||||||

|

<a href="https://zenodo.org/badge/latestdoi/264818686"><img src="https://zenodo.org/badge/264818686.svg" alt="YOLOv8 Citation"></a>

|

||||||

|

<a href="https://hub.docker.com/r/ultralytics/ultralytics"><img src="https://img.shields.io/docker/pulls/ultralytics/ultralytics?logo=docker" alt="Docker Pulls"></a>

|

||||||

|

<br>

|

||||||

|

<a href="https://console.paperspace.com/github/ultralytics/ultralytics"><img src="https://assets.paperspace.io/img/gradient-badge.svg" alt="Run on Gradient"/></a>

|

||||||

|

<a href="https://colab.research.google.com/github/ultralytics/ultralytics/blob/main/examples/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a>

|

||||||

|

<a href="https://www.kaggle.com/ultralytics/yolov8"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

Introducing [Ultralytics](https://ultralytics.com) [YOLOv8](https://github.com/ultralytics/ultralytics), the latest version of the acclaimed real-time object detection and image segmentation model. YOLOv8 is built on cutting-edge advancements in deep learning and computer vision, offering unparalleled performance in terms of speed and accuracy. Its streamlined design makes it suitable for various applications and easily adaptable to different hardware platforms, from edge devices to cloud APIs.

|

||||||

|

|

||||||

|

Explore the YOLOv8 Docs, a comprehensive resource designed to help you understand and utilize its features and capabilities. Whether you are a seasoned machine learning practitioner or new to the field, this hub aims to maximize YOLOv8's potential in your projects

|

||||||

|

|

||||||

|

## Where to Start

|

||||||

|

|

||||||

|

- **Install** `ultralytics` with pip and get up and running in minutes [:material-clock-fast: Get Started](quickstart.md){ .md-button }

|

||||||

|

- **Predict** new images and videos with YOLOv8 [:octicons-image-16: Predict on Images](modes/predict.md){ .md-button }

|

||||||

|

- **Train** a new YOLOv8 model on your own custom dataset [:fontawesome-solid-brain: Train a Model](modes/train.md){ .md-button }

|

||||||

|

- **Explore** YOLOv8 tasks like segment, classify, pose and track [:material-magnify-expand: Explore Tasks](tasks/index.md){ .md-button }

|

||||||

|

|

||||||

|

## YOLO: A Brief History

|

||||||

|

|

||||||

|

[YOLO](https://arxiv.org/abs/1506.02640) (You Only Look Once), a popular object detection and image segmentation model, was developed by Joseph Redmon and Ali Farhadi at the University of Washington. Launched in 2015, YOLO quickly gained popularity for its high speed and accuracy.

|

||||||

|

|

||||||

|

- [YOLOv2](https://arxiv.org/abs/1612.08242), released in 2016, improved the original model by incorporating batch normalization, anchor boxes, and dimension clusters.

|

||||||

|

- [YOLOv3](https://pjreddie.com/media/files/papers/YOLOv3.pdf), launched in 2018, further enhanced the model's performance using a more efficient backbone network, multiple anchors and spatial pyramid pooling.

|

||||||

|

- [YOLOv4](https://arxiv.org/abs/2004.10934) was released in 2020, introducing innovations like Mosaic data augmentation, a new anchor-free detection head, and a new loss function.

|

||||||

|

- [YOLOv5](https://github.com/ultralytics/yolov5) further improved the model's performance and added new features such as hyperparameter optimization, integrated experiment tracking and automatic export to popular export formats.

|

||||||

|

- [YOLOv6](https://github.com/meituan/YOLOv6) was open-sourced by Meituan in 2022 and is in use in many of the company's autonomous delivery robots.

|

||||||

|

- [YOLOv7](https://github.com/WongKinYiu/yolov7) added additional tasks such as pose estimation on the COCO keypoints dataset.

|

||||||

|

|

||||||

|

Since its launch YOLO has been employed in various applications, including autonomous vehicles, security and surveillance, and medical imaging, and has won several competitions like the COCO Object Detection Challenge and the DOTA Object Detection Challenge.

|

||||||

|

|

||||||

|

## Ultralytics YOLOv8

|

||||||

|

|

||||||

|

[Ultralytics YOLOv8](https://github.com/ultralytics/ultralytics) is the latest version of the YOLO object detection and image segmentation model. As a cutting-edge, state-of-the-art (SOTA) model, YOLOv8 builds on the success of previous versions, introducing new features and improvements for enhanced performance, flexibility, and efficiency.

|

||||||

|

|

||||||

|

YOLOv8 is designed with a strong focus on speed, size, and accuracy, making it a compelling choice for various vision AI tasks. It outperforms previous versions by incorporating innovations like a new backbone network, a new anchor-free split head, and new loss functions. These improvements enable YOLOv8 to deliver superior results, while maintaining a compact size and exceptional speed.

|

||||||

|

|

||||||

|

Additionally, YOLOv8 supports a full range of vision AI tasks, including [detection](tasks/detect.md), [segmentation](tasks/segment.md), [pose estimation](tasks/pose.md), [tracking](modes/track.md), and [classification](tasks/classify.md). This versatility allows users to leverage YOLOv8's capabilities across diverse applications and domains.

|

||||||

65

docs/modes/benchmark.md

Normal file

@@ -0,0 +1,65 @@

|

|||||||

|

<img width="1024" src="https://github.com/ultralytics/assets/raw/main/yolov8/banner-integrations.png">

|

||||||

|

|

||||||

|

**Benchmark mode** is used to profile the speed and accuracy of various export formats for YOLOv8. The benchmarks

|

||||||

|

provide information on the size of the exported format, its `mAP50-95` metrics (for object detection, segmentation and pose)

|

||||||

|

or `accuracy_top5` metrics (for classification), and the inference time in milliseconds per image across various export

|

||||||

|

formats like ONNX, OpenVINO, TensorRT and others. This information can help users choose the optimal export format for

|

||||||

|

their specific use case based on their requirements for speed and accuracy.

|

||||||

|

|

||||||

|

!!! tip "Tip"

|

||||||

|

|

||||||

|

* Export to ONNX or OpenVINO for up to 3x CPU speedup.

|

||||||

|

* Export to TensorRT for up to 5x GPU speedup.

|

||||||

|

|

||||||

|

## Usage Examples

|

||||||

|

|

||||||

|

Run YOLOv8n benchmarks on all supported export formats including ONNX, TensorRT etc. See Arguments section below for a

|

||||||

|

full list of export arguments.

|

||||||

|

|

||||||

|

!!! example ""

|

||||||

|

|

||||||

|

=== "Python"

|

||||||

|

|

||||||

|

```python

|

||||||

|

from ultralytics.yolo.utils.benchmarks import benchmark

|

||||||

|

|

||||||

|

# Benchmark

|

||||||

|

benchmark(model='yolov8n.pt', imgsz=640, half=False, device=0)

|

||||||

|

```

|

||||||

|

=== "CLI"

|

||||||

|

|

||||||

|

```bash

|

||||||

|

yolo benchmark model=yolov8n.pt imgsz=640 half=False device=0

|

||||||

|

```

|

||||||

|

|

||||||

|

## Arguments

|

||||||

|

|

||||||

|

Arguments such as `model`, `imgsz`, `half`, `device`, and `hard_fail` provide users with the flexibility to fine-tune

|

||||||

|

the benchmarks to their specific needs and compare the performance of different export formats with ease.

|

||||||

|

|

||||||

|

| Key | Value | Description |

|

||||||

|

|-------------|---------|----------------------------------------------------------------------|

|

||||||

|

| `model` | `None` | path to model file, i.e. yolov8n.pt, yolov8n.yaml |

|

||||||

|

| `imgsz` | `640` | image size as scalar or (h, w) list, i.e. (640, 480) |

|

||||||

|

| `half` | `False` | FP16 quantization |

|

||||||

|

| `device` | `None` | device to run on, i.e. cuda device=0 or device=0,1,2,3 or device=cpu |

|

||||||

|

| `hard_fail` | `False` | do not continue on error (bool), or val floor threshold (float) |

|

||||||

|

|

||||||

|

## Export Formats

|

||||||

|

|

||||||

|

Benchmarks will attempt to run automatically on all possible export formats below.

|

||||||

|

|

||||||

|

| Format | `format` Argument | Model | Metadata |

|

||||||

|

|--------------------------------------------------------------------|-------------------|---------------------------|----------|

|

||||||

|

| [PyTorch](https://pytorch.org/) | - | `yolov8n.pt` | ✅ |

|

||||||

|

| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n.torchscript` | ✅ |

|

||||||

|

| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n.onnx` | ✅ |

|

||||||

|

| [OpenVINO](https://docs.openvino.ai/latest/index.html) | `openvino` | `yolov8n_openvino_model/` | ✅ |

|

||||||

|

| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n.engine` | ✅ |

|

||||||

|

| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n.mlmodel` | ✅ |

|

||||||

|

| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n_saved_model/` | ✅ |

|

||||||

|

| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n.pb` | ❌ |

|

||||||

|

| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n.tflite` | ✅ |

|

||||||

|

| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n_edgetpu.tflite` | ✅ |

|

||||||

|

| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n_web_model/` | ✅ |

|

||||||

|

| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n_paddle_model/` | ✅ |

|

||||||

81

docs/modes/export.md

Normal file

@@ -0,0 +1,81 @@

|

|||||||

|

<img width="1024" src="https://github.com/ultralytics/assets/raw/main/yolov8/banner-integrations.png">

|

||||||

|

|

||||||

|

**Export mode** is used for exporting a YOLOv8 model to a format that can be used for deployment. In this mode, the

|

||||||

|

model is converted to a format that can be used by other software applications or hardware devices. This mode is useful

|

||||||

|

when deploying the model to production environments.

|

||||||

|

|

||||||

|

!!! tip "Tip"

|

||||||

|

|

||||||

|

* Export to ONNX or OpenVINO for up to 3x CPU speedup.

|

||||||

|

* Export to TensorRT for up to 5x GPU speedup.

|

||||||

|

|

||||||

|

## Usage Examples

|

||||||

|

|

||||||

|

Export a YOLOv8n model to a different format like ONNX or TensorRT. See Arguments section below for a full list of

|

||||||

|

export arguments.

|

||||||

|

|

||||||

|

!!! example ""

|

||||||

|

|

||||||

|

=== "Python"

|

||||||

|

|

||||||

|

```python

|

||||||

|

from ultralytics import YOLO

|

||||||

|

|

||||||

|

# Load a model

|

||||||

|

model = YOLO('yolov8n.pt') # load an official model

|

||||||

|

model = YOLO('path/to/best.pt') # load a custom trained

|

||||||

|

|

||||||

|

# Export the model

|

||||||

|

model.export(format='onnx')

|

||||||

|

```

|

||||||

|

=== "CLI"

|

||||||

|

|

||||||

|

```bash

|

||||||

|

yolo export model=yolov8n.pt format=onnx # export official model

|

||||||

|

yolo export model=path/to/best.pt format=onnx # export custom trained model

|

||||||

|

```

|

||||||

|

|

||||||

|

## Arguments

|

||||||

|

|

||||||

|

Export settings for YOLO models refer to the various configurations and options used to save or

|

||||||

|

export the model for use in other environments or platforms. These settings can affect the model's performance, size,

|

||||||

|

and compatibility with different systems. Some common YOLO export settings include the format of the exported model

|

||||||

|

file (e.g. ONNX, TensorFlow SavedModel), the device on which the model will be run (e.g. CPU, GPU), and the presence of

|

||||||

|

additional features such as masks or multiple labels per box. Other factors that may affect the export process include

|

||||||

|

the specific task the model is being used for and the requirements or constraints of the target environment or platform.

|

||||||

|

It is important to carefully consider and configure these settings to ensure that the exported model is optimized for

|

||||||

|

the intended use case and can be used effectively in the target environment.

|

||||||

|

|

||||||

|

| Key | Value | Description |

|

||||||

|

|-------------|-----------------|------------------------------------------------------|

|

||||||

|

| `format` | `'torchscript'` | format to export to |

|

||||||

|

| `imgsz` | `640` | image size as scalar or (h, w) list, i.e. (640, 480) |

|

||||||

|

| `keras` | `False` | use Keras for TF SavedModel export |

|

||||||

|

| `optimize` | `False` | TorchScript: optimize for mobile |

|

||||||

|

| `half` | `False` | FP16 quantization |

|

||||||

|

| `int8` | `False` | INT8 quantization |

|

||||||

|

| `dynamic` | `False` | ONNX/TF/TensorRT: dynamic axes |

|

||||||

|

| `simplify` | `False` | ONNX: simplify model |

|

||||||

|

| `opset` | `None` | ONNX: opset version (optional, defaults to latest) |

|

||||||

|

| `workspace` | `4` | TensorRT: workspace size (GB) |

|

||||||

|

| `nms` | `False` | CoreML: add NMS |

|

||||||

|

|

||||||

|

## Export Formats

|

||||||

|

|

||||||

|

Available YOLOv8 export formats are in the table below. You can export to any format using the `format` argument,

|

||||||

|

i.e. `format='onnx'` or `format='engine'`.

|

||||||

|

|

||||||

|

| Format | `format` Argument | Model | Metadata |

|

||||||

|

|--------------------------------------------------------------------|-------------------|---------------------------|----------|

|

||||||

|

| [PyTorch](https://pytorch.org/) | - | `yolov8n.pt` | ✅ |

|

||||||

|

| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n.torchscript` | ✅ |

|

||||||

|

| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n.onnx` | ✅ |

|

||||||

|

| [OpenVINO](https://docs.openvino.ai/latest/index.html) | `openvino` | `yolov8n_openvino_model/` | ✅ |

|

||||||

|

| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n.engine` | ✅ |

|

||||||

|

| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n.mlmodel` | ✅ |

|

||||||

|

| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n_saved_model/` | ✅ |

|

||||||

|

| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n.pb` | ❌ |

|

||||||

|

| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n.tflite` | ✅ |

|

||||||

|

| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n_edgetpu.tflite` | ✅ |

|

||||||

|

| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n_web_model/` | ✅ |

|

||||||

|

| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n_paddle_model/` | ✅ |

|

||||||

62

docs/modes/index.md

Normal file

@@ -0,0 +1,62 @@

|

|||||||

|

# Ultralytics YOLOv8 Modes

|

||||||

|

|

||||||

|

<img width="1024" src="https://github.com/ultralytics/assets/raw/main/yolov8/banner-integrations.png">

|

||||||

|

|

||||||

|

Ultralytics YOLOv8 supports several **modes** that can be used to perform different tasks. These modes are:

|

||||||

|

|

||||||

|

**Train**: For training a YOLOv8 model on a custom dataset.

|

||||||

|

**Val**: For validating a YOLOv8 model after it has been trained.

|

||||||

|

**Predict**: For making predictions using a trained YOLOv8 model on new images or videos.

|

||||||

|

**Export**: For exporting a YOLOv8 model to a format that can be used for deployment.

|

||||||

|

**Track**: For tracking objects in real-time using a YOLOv8 model.

|

||||||

|

**Benchmark**: For benchmarking YOLOv8 exports (ONNX, TensorRT, etc.) speed and accuracy.

|

||||||

|

|

||||||

|

## [Train](train.md)

|

||||||

|

|

||||||

|

Train mode is used for training a YOLOv8 model on a custom dataset. In this mode, the model is trained using the

|

||||||

|

specified dataset and hyperparameters. The training process involves optimizing the model's parameters so that it can

|

||||||

|

accurately predict the classes and locations of objects in an image.

|

||||||

|

|

||||||

|

[Train Examples](train.md){ .md-button .md-button--primary}

|

||||||

|

|

||||||

|

## [Val](val.md)

|

||||||

|

|

||||||

|

Val mode is used for validating a YOLOv8 model after it has been trained. In this mode, the model is evaluated on a

|

||||||

|

validation set to measure its accuracy and generalization performance. This mode can be used to tune the hyperparameters

|

||||||

|

of the model to improve its performance.

|

||||||

|

|

||||||

|

[Val Examples](val.md){ .md-button .md-button--primary}

|

||||||

|

|

||||||

|

## [Predict](predict.md)

|

||||||

|

|

||||||

|

Predict mode is used for making predictions using a trained YOLOv8 model on new images or videos. In this mode, the

|

||||||

|

model is loaded from a checkpoint file, and the user can provide images or videos to perform inference. The model

|

||||||

|