+

+ +

+

+ +

+

+

+## 2. Train a Model

+

+Connect to the Ultralytics HUB notebook and use your model API key to begin training!

+

+

+

+

+## 2. Train a Model

+

+Connect to the Ultralytics HUB notebook and use your model API key to begin training!

+

+

+ +

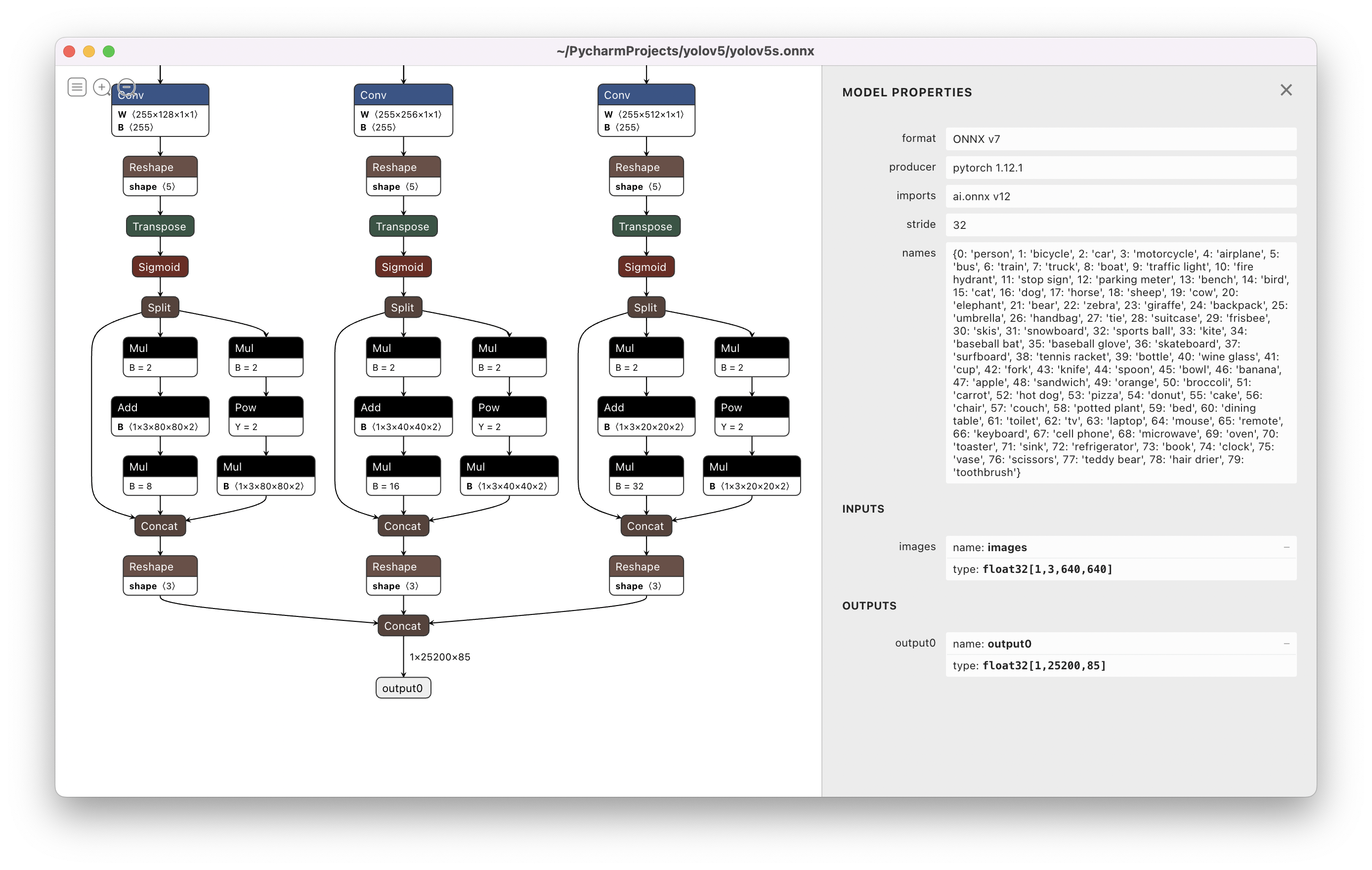

+**Benchmark mode** is used to profile the speed and accuracy of various export formats for YOLOv8. The benchmarks

+provide information on the size of the exported format, its `mAP50-95` metrics (for object detection, segmentation and pose)

+or `accuracy_top5` metrics (for classification), and the inference time in milliseconds per image across various export

+formats like ONNX, OpenVINO, TensorRT and others. This information can help users choose the optimal export format for

+their specific use case based on their requirements for speed and accuracy.

+

+!!! tip "Tip"

+

+ * Export to ONNX or OpenVINO for up to 3x CPU speedup.

+ * Export to TensorRT for up to 5x GPU speedup.

+

+## Usage Examples

+

+Run YOLOv8n benchmarks on all supported export formats including ONNX, TensorRT etc. See Arguments section below for a

+full list of export arguments.

+

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics.yolo.utils.benchmarks import benchmark

+

+ # Benchmark

+ benchmark(model='yolov8n.pt', imgsz=640, half=False, device=0)

+ ```

+ === "CLI"

+

+ ```bash

+ yolo benchmark model=yolov8n.pt imgsz=640 half=False device=0

+ ```

+

+## Arguments

+

+Arguments such as `model`, `imgsz`, `half`, `device`, and `hard_fail` provide users with the flexibility to fine-tune

+the benchmarks to their specific needs and compare the performance of different export formats with ease.

+

+| Key | Value | Description |

+|-------------|---------|----------------------------------------------------------------------|

+| `model` | `None` | path to model file, i.e. yolov8n.pt, yolov8n.yaml |

+| `imgsz` | `640` | image size as scalar or (h, w) list, i.e. (640, 480) |

+| `half` | `False` | FP16 quantization |

+| `device` | `None` | device to run on, i.e. cuda device=0 or device=0,1,2,3 or device=cpu |

+| `hard_fail` | `False` | do not continue on error (bool), or val floor threshold (float) |

+

+## Export Formats

+

+Benchmarks will attempt to run automatically on all possible export formats below.

+

+| Format | `format` Argument | Model | Metadata |

+|--------------------------------------------------------------------|-------------------|---------------------------|----------|

+| [PyTorch](https://pytorch.org/) | - | `yolov8n.pt` | ✅ |

+| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n.torchscript` | ✅ |

+| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n.onnx` | ✅ |

+| [OpenVINO](https://docs.openvino.ai/latest/index.html) | `openvino` | `yolov8n_openvino_model/` | ✅ |

+| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n.engine` | ✅ |

+| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n.mlmodel` | ✅ |

+| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n_saved_model/` | ✅ |

+| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n.pb` | ❌ |

+| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n.tflite` | ✅ |

+| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n_edgetpu.tflite` | ✅ |

+| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n_web_model/` | ✅ |

+| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n_paddle_model/` | ✅ |

diff --git a/docs/modes/export.md b/docs/modes/export.md

new file mode 100644

index 0000000..f454466

--- /dev/null

+++ b/docs/modes/export.md

@@ -0,0 +1,81 @@

+

+

+**Benchmark mode** is used to profile the speed and accuracy of various export formats for YOLOv8. The benchmarks

+provide information on the size of the exported format, its `mAP50-95` metrics (for object detection, segmentation and pose)

+or `accuracy_top5` metrics (for classification), and the inference time in milliseconds per image across various export

+formats like ONNX, OpenVINO, TensorRT and others. This information can help users choose the optimal export format for

+their specific use case based on their requirements for speed and accuracy.

+

+!!! tip "Tip"

+

+ * Export to ONNX or OpenVINO for up to 3x CPU speedup.

+ * Export to TensorRT for up to 5x GPU speedup.

+

+## Usage Examples

+

+Run YOLOv8n benchmarks on all supported export formats including ONNX, TensorRT etc. See Arguments section below for a

+full list of export arguments.

+

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics.yolo.utils.benchmarks import benchmark

+

+ # Benchmark

+ benchmark(model='yolov8n.pt', imgsz=640, half=False, device=0)

+ ```

+ === "CLI"

+

+ ```bash

+ yolo benchmark model=yolov8n.pt imgsz=640 half=False device=0

+ ```

+

+## Arguments

+

+Arguments such as `model`, `imgsz`, `half`, `device`, and `hard_fail` provide users with the flexibility to fine-tune

+the benchmarks to their specific needs and compare the performance of different export formats with ease.

+

+| Key | Value | Description |

+|-------------|---------|----------------------------------------------------------------------|

+| `model` | `None` | path to model file, i.e. yolov8n.pt, yolov8n.yaml |

+| `imgsz` | `640` | image size as scalar or (h, w) list, i.e. (640, 480) |

+| `half` | `False` | FP16 quantization |

+| `device` | `None` | device to run on, i.e. cuda device=0 or device=0,1,2,3 or device=cpu |

+| `hard_fail` | `False` | do not continue on error (bool), or val floor threshold (float) |

+

+## Export Formats

+

+Benchmarks will attempt to run automatically on all possible export formats below.

+

+| Format | `format` Argument | Model | Metadata |

+|--------------------------------------------------------------------|-------------------|---------------------------|----------|

+| [PyTorch](https://pytorch.org/) | - | `yolov8n.pt` | ✅ |

+| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n.torchscript` | ✅ |

+| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n.onnx` | ✅ |

+| [OpenVINO](https://docs.openvino.ai/latest/index.html) | `openvino` | `yolov8n_openvino_model/` | ✅ |

+| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n.engine` | ✅ |

+| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n.mlmodel` | ✅ |

+| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n_saved_model/` | ✅ |

+| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n.pb` | ❌ |

+| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n.tflite` | ✅ |

+| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n_edgetpu.tflite` | ✅ |

+| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n_web_model/` | ✅ |

+| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n_paddle_model/` | ✅ |

diff --git a/docs/modes/export.md b/docs/modes/export.md

new file mode 100644

index 0000000..f454466

--- /dev/null

+++ b/docs/modes/export.md

@@ -0,0 +1,81 @@

+ +

+**Export mode** is used for exporting a YOLOv8 model to a format that can be used for deployment. In this mode, the

+model is converted to a format that can be used by other software applications or hardware devices. This mode is useful

+when deploying the model to production environments.

+

+!!! tip "Tip"

+

+ * Export to ONNX or OpenVINO for up to 3x CPU speedup.

+ * Export to TensorRT for up to 5x GPU speedup.

+

+## Usage Examples

+

+Export a YOLOv8n model to a different format like ONNX or TensorRT. See Arguments section below for a full list of

+export arguments.

+

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load an official model

+ model = YOLO('path/to/best.pt') # load a custom trained

+

+ # Export the model

+ model.export(format='onnx')

+ ```

+ === "CLI"

+

+ ```bash

+ yolo export model=yolov8n.pt format=onnx # export official model

+ yolo export model=path/to/best.pt format=onnx # export custom trained model

+ ```

+

+## Arguments

+

+Export settings for YOLO models refer to the various configurations and options used to save or

+export the model for use in other environments or platforms. These settings can affect the model's performance, size,

+and compatibility with different systems. Some common YOLO export settings include the format of the exported model

+file (e.g. ONNX, TensorFlow SavedModel), the device on which the model will be run (e.g. CPU, GPU), and the presence of

+additional features such as masks or multiple labels per box. Other factors that may affect the export process include

+the specific task the model is being used for and the requirements or constraints of the target environment or platform.

+It is important to carefully consider and configure these settings to ensure that the exported model is optimized for

+the intended use case and can be used effectively in the target environment.

+

+| Key | Value | Description |

+|-------------|-----------------|------------------------------------------------------|

+| `format` | `'torchscript'` | format to export to |

+| `imgsz` | `640` | image size as scalar or (h, w) list, i.e. (640, 480) |

+| `keras` | `False` | use Keras for TF SavedModel export |

+| `optimize` | `False` | TorchScript: optimize for mobile |

+| `half` | `False` | FP16 quantization |

+| `int8` | `False` | INT8 quantization |

+| `dynamic` | `False` | ONNX/TF/TensorRT: dynamic axes |

+| `simplify` | `False` | ONNX: simplify model |

+| `opset` | `None` | ONNX: opset version (optional, defaults to latest) |

+| `workspace` | `4` | TensorRT: workspace size (GB) |

+| `nms` | `False` | CoreML: add NMS |

+

+## Export Formats

+

+Available YOLOv8 export formats are in the table below. You can export to any format using the `format` argument,

+i.e. `format='onnx'` or `format='engine'`.

+

+| Format | `format` Argument | Model | Metadata |

+|--------------------------------------------------------------------|-------------------|---------------------------|----------|

+| [PyTorch](https://pytorch.org/) | - | `yolov8n.pt` | ✅ |

+| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n.torchscript` | ✅ |

+| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n.onnx` | ✅ |

+| [OpenVINO](https://docs.openvino.ai/latest/index.html) | `openvino` | `yolov8n_openvino_model/` | ✅ |

+| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n.engine` | ✅ |

+| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n.mlmodel` | ✅ |

+| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n_saved_model/` | ✅ |

+| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n.pb` | ❌ |

+| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n.tflite` | ✅ |

+| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n_edgetpu.tflite` | ✅ |

+| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n_web_model/` | ✅ |

+| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n_paddle_model/` | ✅ |

diff --git a/docs/modes/index.md b/docs/modes/index.md

new file mode 100644

index 0000000..1ca2383

--- /dev/null

+++ b/docs/modes/index.md

@@ -0,0 +1,62 @@

+# Ultralytics YOLOv8 Modes

+

+

+

+**Export mode** is used for exporting a YOLOv8 model to a format that can be used for deployment. In this mode, the

+model is converted to a format that can be used by other software applications or hardware devices. This mode is useful

+when deploying the model to production environments.

+

+!!! tip "Tip"

+

+ * Export to ONNX or OpenVINO for up to 3x CPU speedup.

+ * Export to TensorRT for up to 5x GPU speedup.

+

+## Usage Examples

+

+Export a YOLOv8n model to a different format like ONNX or TensorRT. See Arguments section below for a full list of

+export arguments.

+

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load an official model

+ model = YOLO('path/to/best.pt') # load a custom trained

+

+ # Export the model

+ model.export(format='onnx')

+ ```

+ === "CLI"

+

+ ```bash

+ yolo export model=yolov8n.pt format=onnx # export official model

+ yolo export model=path/to/best.pt format=onnx # export custom trained model

+ ```

+

+## Arguments

+

+Export settings for YOLO models refer to the various configurations and options used to save or

+export the model for use in other environments or platforms. These settings can affect the model's performance, size,

+and compatibility with different systems. Some common YOLO export settings include the format of the exported model

+file (e.g. ONNX, TensorFlow SavedModel), the device on which the model will be run (e.g. CPU, GPU), and the presence of

+additional features such as masks or multiple labels per box. Other factors that may affect the export process include

+the specific task the model is being used for and the requirements or constraints of the target environment or platform.

+It is important to carefully consider and configure these settings to ensure that the exported model is optimized for

+the intended use case and can be used effectively in the target environment.

+

+| Key | Value | Description |

+|-------------|-----------------|------------------------------------------------------|

+| `format` | `'torchscript'` | format to export to |

+| `imgsz` | `640` | image size as scalar or (h, w) list, i.e. (640, 480) |

+| `keras` | `False` | use Keras for TF SavedModel export |

+| `optimize` | `False` | TorchScript: optimize for mobile |

+| `half` | `False` | FP16 quantization |

+| `int8` | `False` | INT8 quantization |

+| `dynamic` | `False` | ONNX/TF/TensorRT: dynamic axes |

+| `simplify` | `False` | ONNX: simplify model |

+| `opset` | `None` | ONNX: opset version (optional, defaults to latest) |

+| `workspace` | `4` | TensorRT: workspace size (GB) |

+| `nms` | `False` | CoreML: add NMS |

+

+## Export Formats

+

+Available YOLOv8 export formats are in the table below. You can export to any format using the `format` argument,

+i.e. `format='onnx'` or `format='engine'`.

+

+| Format | `format` Argument | Model | Metadata |

+|--------------------------------------------------------------------|-------------------|---------------------------|----------|

+| [PyTorch](https://pytorch.org/) | - | `yolov8n.pt` | ✅ |

+| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n.torchscript` | ✅ |

+| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n.onnx` | ✅ |

+| [OpenVINO](https://docs.openvino.ai/latest/index.html) | `openvino` | `yolov8n_openvino_model/` | ✅ |

+| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n.engine` | ✅ |

+| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n.mlmodel` | ✅ |

+| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n_saved_model/` | ✅ |

+| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n.pb` | ❌ |

+| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n.tflite` | ✅ |

+| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n_edgetpu.tflite` | ✅ |

+| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n_web_model/` | ✅ |

+| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n_paddle_model/` | ✅ |

diff --git a/docs/modes/index.md b/docs/modes/index.md

new file mode 100644

index 0000000..1ca2383

--- /dev/null

+++ b/docs/modes/index.md

@@ -0,0 +1,62 @@

+# Ultralytics YOLOv8 Modes

+

+ +

+Ultralytics YOLOv8 supports several **modes** that can be used to perform different tasks. These modes are:

+

+**Train**: For training a YOLOv8 model on a custom dataset.

+**Val**: For validating a YOLOv8 model after it has been trained.

+**Predict**: For making predictions using a trained YOLOv8 model on new images or videos.

+**Export**: For exporting a YOLOv8 model to a format that can be used for deployment.

+**Track**: For tracking objects in real-time using a YOLOv8 model.

+**Benchmark**: For benchmarking YOLOv8 exports (ONNX, TensorRT, etc.) speed and accuracy.

+

+## [Train](train.md)

+

+Train mode is used for training a YOLOv8 model on a custom dataset. In this mode, the model is trained using the

+specified dataset and hyperparameters. The training process involves optimizing the model's parameters so that it can

+accurately predict the classes and locations of objects in an image.

+

+[Train Examples](train.md){ .md-button .md-button--primary}

+

+## [Val](val.md)

+

+Val mode is used for validating a YOLOv8 model after it has been trained. In this mode, the model is evaluated on a

+validation set to measure its accuracy and generalization performance. This mode can be used to tune the hyperparameters

+of the model to improve its performance.

+

+[Val Examples](val.md){ .md-button .md-button--primary}

+

+## [Predict](predict.md)

+

+Predict mode is used for making predictions using a trained YOLOv8 model on new images or videos. In this mode, the

+model is loaded from a checkpoint file, and the user can provide images or videos to perform inference. The model

+predicts the classes and locations of objects in the input images or videos.

+

+[Predict Examples](predict.md){ .md-button .md-button--primary}

+

+## [Export](export.md)

+

+Export mode is used for exporting a YOLOv8 model to a format that can be used for deployment. In this mode, the model is

+converted to a format that can be used by other software applications or hardware devices. This mode is useful when

+deploying the model to production environments.

+

+[Export Examples](export.md){ .md-button .md-button--primary}

+

+## [Track](track.md)

+

+Track mode is used for tracking objects in real-time using a YOLOv8 model. In this mode, the model is loaded from a

+checkpoint file, and the user can provide a live video stream to perform real-time object tracking. This mode is useful

+for applications such as surveillance systems or self-driving cars.

+

+[Track Examples](track.md){ .md-button .md-button--primary}

+

+## [Benchmark](benchmark.md)

+

+Benchmark mode is used to profile the speed and accuracy of various export formats for YOLOv8. The benchmarks provide

+information on the size of the exported format, its `mAP50-95` metrics (for object detection, segmentation and pose)

+or `accuracy_top5` metrics (for classification), and the inference time in milliseconds per image across various export

+formats like ONNX, OpenVINO, TensorRT and others. This information can help users choose the optimal export format for

+their specific use case based on their requirements for speed and accuracy.

+

+[Benchmark Examples](benchmark.md){ .md-button .md-button--primary}

diff --git a/docs/modes/predict.md b/docs/modes/predict.md

new file mode 100644

index 0000000..30f8743

--- /dev/null

+++ b/docs/modes/predict.md

@@ -0,0 +1,276 @@

+

+

+Ultralytics YOLOv8 supports several **modes** that can be used to perform different tasks. These modes are:

+

+**Train**: For training a YOLOv8 model on a custom dataset.

+**Val**: For validating a YOLOv8 model after it has been trained.

+**Predict**: For making predictions using a trained YOLOv8 model on new images or videos.

+**Export**: For exporting a YOLOv8 model to a format that can be used for deployment.

+**Track**: For tracking objects in real-time using a YOLOv8 model.

+**Benchmark**: For benchmarking YOLOv8 exports (ONNX, TensorRT, etc.) speed and accuracy.

+

+## [Train](train.md)

+

+Train mode is used for training a YOLOv8 model on a custom dataset. In this mode, the model is trained using the

+specified dataset and hyperparameters. The training process involves optimizing the model's parameters so that it can

+accurately predict the classes and locations of objects in an image.

+

+[Train Examples](train.md){ .md-button .md-button--primary}

+

+## [Val](val.md)

+

+Val mode is used for validating a YOLOv8 model after it has been trained. In this mode, the model is evaluated on a

+validation set to measure its accuracy and generalization performance. This mode can be used to tune the hyperparameters

+of the model to improve its performance.

+

+[Val Examples](val.md){ .md-button .md-button--primary}

+

+## [Predict](predict.md)

+

+Predict mode is used for making predictions using a trained YOLOv8 model on new images or videos. In this mode, the

+model is loaded from a checkpoint file, and the user can provide images or videos to perform inference. The model

+predicts the classes and locations of objects in the input images or videos.

+

+[Predict Examples](predict.md){ .md-button .md-button--primary}

+

+## [Export](export.md)

+

+Export mode is used for exporting a YOLOv8 model to a format that can be used for deployment. In this mode, the model is

+converted to a format that can be used by other software applications or hardware devices. This mode is useful when

+deploying the model to production environments.

+

+[Export Examples](export.md){ .md-button .md-button--primary}

+

+## [Track](track.md)

+

+Track mode is used for tracking objects in real-time using a YOLOv8 model. In this mode, the model is loaded from a

+checkpoint file, and the user can provide a live video stream to perform real-time object tracking. This mode is useful

+for applications such as surveillance systems or self-driving cars.

+

+[Track Examples](track.md){ .md-button .md-button--primary}

+

+## [Benchmark](benchmark.md)

+

+Benchmark mode is used to profile the speed and accuracy of various export formats for YOLOv8. The benchmarks provide

+information on the size of the exported format, its `mAP50-95` metrics (for object detection, segmentation and pose)

+or `accuracy_top5` metrics (for classification), and the inference time in milliseconds per image across various export

+formats like ONNX, OpenVINO, TensorRT and others. This information can help users choose the optimal export format for

+their specific use case based on their requirements for speed and accuracy.

+

+[Benchmark Examples](benchmark.md){ .md-button .md-button--primary}

diff --git a/docs/modes/predict.md b/docs/modes/predict.md

new file mode 100644

index 0000000..30f8743

--- /dev/null

+++ b/docs/modes/predict.md

@@ -0,0 +1,276 @@

+ +

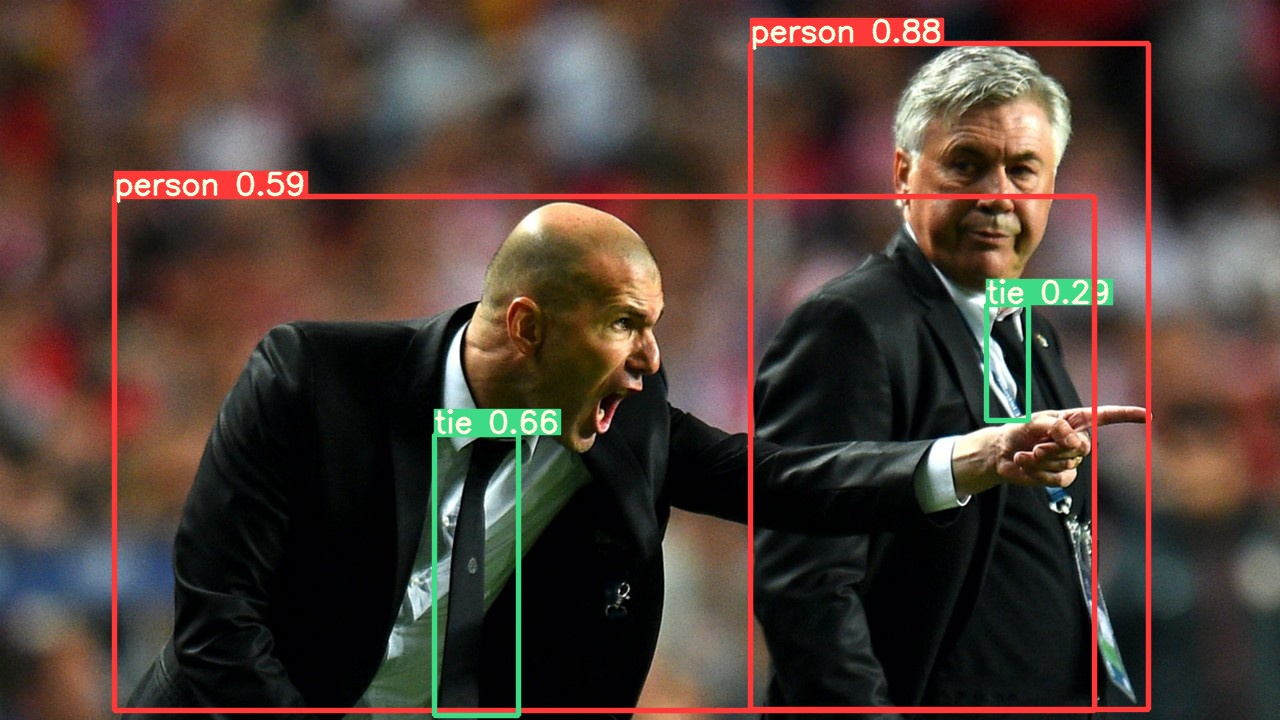

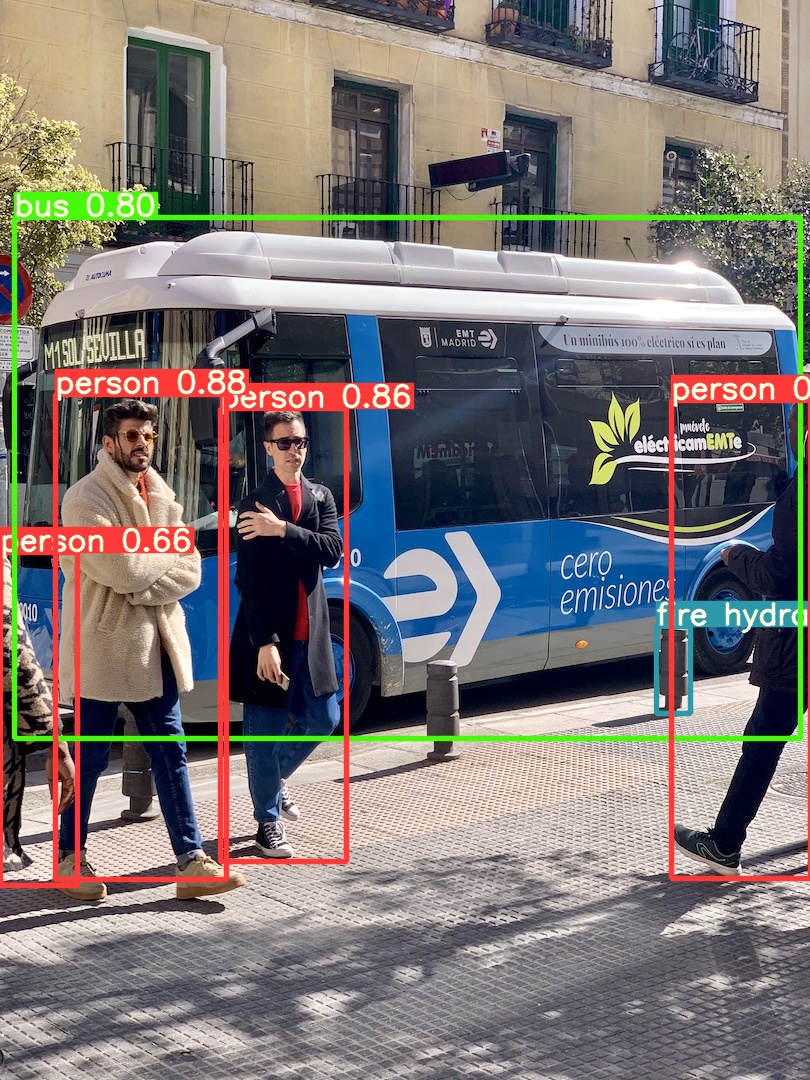

+YOLOv8 **predict mode** can generate predictions for various tasks, returning either a list of `Results` objects or a

+memory-efficient generator of `Results` objects when using the streaming mode. Enable streaming mode by

+passing `stream=True` in the predictor's call method.

+

+!!! example "Predict"

+

+ === "Return a list with `Stream=False`"

+ ```python

+ inputs = [img, img] # list of numpy arrays

+ results = model(inputs) # list of Results objects

+

+ for result in results:

+ boxes = result.boxes # Boxes object for bbox outputs

+ masks = result.masks # Masks object for segmentation masks outputs

+ probs = result.probs # Class probabilities for classification outputs

+ ```

+

+ === "Return a generator with `Stream=True`"

+ ```python

+ inputs = [img, img] # list of numpy arrays

+ results = model(inputs, stream=True) # generator of Results objects

+

+ for result in results:

+ boxes = result.boxes # Boxes object for bbox outputs

+ masks = result.masks # Masks object for segmentation masks outputs

+ probs = result.probs # Class probabilities for classification outputs

+ ```

+

+!!! tip "Tip"

+

+ Streaming mode with `stream=True` should be used for long videos or large predict sources, otherwise results will accumuate in memory and will eventually cause out-of-memory errors.

+

+## Sources

+

+YOLOv8 can accept various input sources, as shown in the table below. This includes images, URLs, PIL images, OpenCV,

+numpy arrays, torch tensors, CSV files, videos, directories, globs, YouTube videos, and streams. The table indicates

+whether each source can be used in streaming mode with `stream=True` ✅ and an example argument for each source.

+

+| source | model(arg) | type | notes |

+|-------------|--------------------------------------------|----------------|------------------|

+| image | `'im.jpg'` | `str`, `Path` | |

+| URL | `'https://ultralytics.com/images/bus.jpg'` | `str` | |

+| screenshot | `'screen'` | `str` | |

+| PIL | `Image.open('im.jpg')` | `PIL.Image` | HWC, RGB |

+| OpenCV | `cv2.imread('im.jpg')[:,:,::-1]` | `np.ndarray` | HWC, BGR to RGB |

+| numpy | `np.zeros((640,1280,3))` | `np.ndarray` | HWC |

+| torch | `torch.zeros(16,3,320,640)` | `torch.Tensor` | BCHW, RGB |

+| CSV | `'sources.csv'` | `str`, `Path` | RTSP, RTMP, HTTP |

+| video ✅ | `'vid.mp4'` | `str`, `Path` | |

+| directory ✅ | `'path/'` | `str`, `Path` | |

+| glob ✅ | `'path/*.jpg'` | `str` | Use `*` operator |

+| YouTube ✅ | `'https://youtu.be/Zgi9g1ksQHc'` | `str` | |

+| stream ✅ | `'rtsp://example.com/media.mp4'` | `str` | RTSP, RTMP, HTTP |

+

+

+## Arguments

+`model.predict` accepts multiple arguments that control the predction operation. These arguments can be passed directly to `model.predict`:

+!!! example

+ ```

+ model.predict(source, save=True, imgsz=320, conf=0.5)

+ ```

+

+All supported arguments:

+

+| Key | Value | Description |

+|------------------|------------------------|----------------------------------------------------------|

+| `source` | `'ultralytics/assets'` | source directory for images or videos |

+| `conf` | `0.25` | object confidence threshold for detection |

+| `iou` | `0.7` | intersection over union (IoU) threshold for NMS |

+| `half` | `False` | use half precision (FP16) |

+| `device` | `None` | device to run on, i.e. cuda device=0/1/2/3 or device=cpu |

+| `show` | `False` | show results if possible |

+| `save` | `False` | save images with results |

+| `save_txt` | `False` | save results as .txt file |

+| `save_conf` | `False` | save results with confidence scores |

+| `save_crop` | `False` | save cropped images with results |

+| `hide_labels` | `False` | hide labels |

+| `hide_conf` | `False` | hide confidence scores |

+| `max_det` | `300` | maximum number of detections per image |

+| `vid_stride` | `False` | video frame-rate stride |

+| `line_thickness` | `3` | bounding box thickness (pixels) |

+| `visualize` | `False` | visualize model features |

+| `augment` | `False` | apply image augmentation to prediction sources |

+| `agnostic_nms` | `False` | class-agnostic NMS |

+| `retina_masks` | `False` | use high-resolution segmentation masks |

+| `classes` | `None` | filter results by class, i.e. class=0, or class=[0,2,3] |

+| `boxes` | `True` | Show boxes in segmentation predictions |

+

+## Image and Video Formats

+

+YOLOv8 supports various image and video formats, as specified

+in [yolo/data/utils.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/yolo/data/utils.py). See the

+tables below for the valid suffixes and example predict commands.

+

+### Image Suffixes

+

+| Image Suffixes | Example Predict Command | Reference |

+|----------------|----------------------------------|-------------------------------------------------------------------------------|

+| .bmp | `yolo predict source=image.bmp` | [Microsoft BMP File Format](https://en.wikipedia.org/wiki/BMP_file_format) |

+| .dng | `yolo predict source=image.dng` | [Adobe DNG](https://www.adobe.com/products/photoshop/extend.displayTab2.html) |

+| .jpeg | `yolo predict source=image.jpeg` | [JPEG](https://en.wikipedia.org/wiki/JPEG) |

+| .jpg | `yolo predict source=image.jpg` | [JPEG](https://en.wikipedia.org/wiki/JPEG) |

+| .mpo | `yolo predict source=image.mpo` | [Multi Picture Object](https://fileinfo.com/extension/mpo) |

+| .png | `yolo predict source=image.png` | [Portable Network Graphics](https://en.wikipedia.org/wiki/PNG) |

+| .tif | `yolo predict source=image.tif` | [Tag Image File Format](https://en.wikipedia.org/wiki/TIFF) |

+| .tiff | `yolo predict source=image.tiff` | [Tag Image File Format](https://en.wikipedia.org/wiki/TIFF) |

+| .webp | `yolo predict source=image.webp` | [WebP](https://en.wikipedia.org/wiki/WebP) |

+| .pfm | `yolo predict source=image.pfm` | [Portable FloatMap](https://en.wikipedia.org/wiki/Netpbm#File_formats) |

+

+### Video Suffixes

+

+| Video Suffixes | Example Predict Command | Reference |

+|----------------|----------------------------------|----------------------------------------------------------------------------------|

+| .asf | `yolo predict source=video.asf` | [Advanced Systems Format](https://en.wikipedia.org/wiki/Advanced_Systems_Format) |

+| .avi | `yolo predict source=video.avi` | [Audio Video Interleave](https://en.wikipedia.org/wiki/Audio_Video_Interleave) |

+| .gif | `yolo predict source=video.gif` | [Graphics Interchange Format](https://en.wikipedia.org/wiki/GIF) |

+| .m4v | `yolo predict source=video.m4v` | [MPEG-4 Part 14](https://en.wikipedia.org/wiki/M4V) |

+| .mkv | `yolo predict source=video.mkv` | [Matroska](https://en.wikipedia.org/wiki/Matroska) |

+| .mov | `yolo predict source=video.mov` | [QuickTime File Format](https://en.wikipedia.org/wiki/QuickTime_File_Format) |

+| .mp4 | `yolo predict source=video.mp4` | [MPEG-4 Part 14 - Wikipedia](https://en.wikipedia.org/wiki/MPEG-4_Part_14) |

+| .mpeg | `yolo predict source=video.mpeg` | [MPEG-1 Part 2](https://en.wikipedia.org/wiki/MPEG-1) |

+| .mpg | `yolo predict source=video.mpg` | [MPEG-1 Part 2](https://en.wikipedia.org/wiki/MPEG-1) |

+| .ts | `yolo predict source=video.ts` | [MPEG Transport Stream](https://en.wikipedia.org/wiki/MPEG_transport_stream) |

+| .wmv | `yolo predict source=video.wmv` | [Windows Media Video](https://en.wikipedia.org/wiki/Windows_Media_Video) |

+| .webm | `yolo predict source=video.webm` | [WebM Project](https://en.wikipedia.org/wiki/WebM) |

+

+## Working with Results

+

+The `Results` object contains the following components:

+

+- `Results.boxes`: `Boxes` object with properties and methods for manipulating bounding boxes

+- `Results.masks`: `Masks` object for indexing masks or getting segment coordinates

+- `Results.probs`: `torch.Tensor` containing class probabilities or logits

+- `Results.orig_img`: Original image loaded in memory

+- `Results.path`: `Path` containing the path to the input image

+

+Each result is composed of a `torch.Tensor` by default, which allows for easy manipulation:

+

+!!! example "Results"

+

+ ```python

+ results = results.cuda()

+ results = results.cpu()

+ results = results.to('cpu')

+ results = results.numpy()

+ ```

+

+### Boxes

+

+`Boxes` object can be used to index, manipulate, and convert bounding boxes to different formats. Box format conversion

+operations are cached, meaning they're only calculated once per object, and those values are reused for future calls.

+

+- Indexing a `Boxes` object returns a `Boxes` object:

+

+!!! example "Boxes"

+

+ ```python

+ results = model(img)

+ boxes = results[0].boxes

+ box = boxes[0] # returns one box

+ box.xyxy

+ ```

+

+- Properties and conversions

+

+!!! example "Boxes Properties"

+

+ ```python

+ boxes.xyxy # box with xyxy format, (N, 4)

+ boxes.xywh # box with xywh format, (N, 4)

+ boxes.xyxyn # box with xyxy format but normalized, (N, 4)

+ boxes.xywhn # box with xywh format but normalized, (N, 4)

+ boxes.conf # confidence score, (N, 1)

+ boxes.cls # cls, (N, 1)

+ boxes.data # raw bboxes tensor, (N, 6) or boxes.boxes

+ ```

+

+### Masks

+

+`Masks` object can be used index, manipulate and convert masks to segments. The segment conversion operation is cached.

+

+!!! example "Masks"

+

+ ```python

+ results = model(inputs)

+ masks = results[0].masks # Masks object

+ masks.xy # x, y segments (pixels), List[segment] * N

+ masks.xyn # x, y segments (normalized), List[segment] * N

+ masks.data # raw masks tensor, (N, H, W) or masks.masks

+ ```

+

+### probs

+

+`probs` attribute of `Results` class is a `Tensor` containing class probabilities of a classification operation.

+

+!!! example "Probs"

+

+ ```python

+ results = model(inputs)

+ results[0].probs # cls prob, (num_class, )

+ ```

+

+Class reference documentation for `Results` module and its components can be found [here](../reference/results.md)

+

+## Plotting results

+

+You can use `plot()` function of `Result` object to plot results on in image object. It plots all components(boxes,

+masks, classification logits, etc.) found in the results object

+

+!!! example "Plotting"

+

+ ```python

+ res = model(img)

+ res_plotted = res[0].plot()

+ cv2.imshow("result", res_plotted)

+ ```

+| Argument | Description |

+| ----------- | ------------- |

+| `conf (bool)` | Whether to plot the detection confidence score. |

+| `line_width (float, optional)` | The line width of the bounding boxes. If None, it is scaled to the image size. |

+| `font_size (float, optional)` | The font size of the text. If None, it is scaled to the image size. |

+| `font (str)` | The font to use for the text. |

+| `pil (bool)` | Whether to return the image as a PIL Image. |

+| `example (str)` | An example string to display. Useful for indicating the expected format of the output. |

+| `img (numpy.ndarray)` | Plot to another image. if not, plot to original image. |

+| `labels (bool)` | Whether to plot the label of bounding boxes. |

+| `boxes (bool)` | Whether to plot the bounding boxes. |

+| `masks (bool)` | Whether to plot the masks. |

+| `probs (bool)` | Whether to plot classification probability. |

+

+

+## Streaming Source `for`-loop

+

+Here's a Python script using OpenCV (cv2) and YOLOv8 to run inference on video frames. This script assumes you have already installed the necessary packages (opencv-python and ultralytics).

+

+!!! example "Streaming for-loop"

+

+ ```python

+ import cv2

+ from ultralytics import YOLO

+

+ # Load the YOLOv8 model

+ model = YOLO('yolov8n.pt')

+

+ # Open the video file

+ video_path = "path/to/your/video/file.mp4"

+ cap = cv2.VideoCapture(video_path)

+

+ # Loop through the video frames

+ while cap.isOpened():

+ # Read a frame from the video

+ success, frame = cap.read()

+

+ if success:

+ # Run YOLOv8 inference on the frame

+ results = model(frame)

+

+ # Visualize the results on the frame

+ annotated_frame = results[0].plot()

+

+ # Display the annotated frame

+ cv2.imshow("YOLOv8 Inference", annotated_frame)

+

+ # Break the loop if 'q' is pressed

+ if cv2.waitKey(1) & 0xFF == ord("q"):

+ break

+ else:

+ # Break the loop if the end of the video is reached

+ break

+

+ # Release the video capture object and close the display window

+ cap.release()

+ cv2.destroyAllWindows()

+ ```

\ No newline at end of file

diff --git a/docs/modes/track.md b/docs/modes/track.md

new file mode 100644

index 0000000..8058f38

--- /dev/null

+++ b/docs/modes/track.md

@@ -0,0 +1,96 @@

+

+

+YOLOv8 **predict mode** can generate predictions for various tasks, returning either a list of `Results` objects or a

+memory-efficient generator of `Results` objects when using the streaming mode. Enable streaming mode by

+passing `stream=True` in the predictor's call method.

+

+!!! example "Predict"

+

+ === "Return a list with `Stream=False`"

+ ```python

+ inputs = [img, img] # list of numpy arrays

+ results = model(inputs) # list of Results objects

+

+ for result in results:

+ boxes = result.boxes # Boxes object for bbox outputs

+ masks = result.masks # Masks object for segmentation masks outputs

+ probs = result.probs # Class probabilities for classification outputs

+ ```

+

+ === "Return a generator with `Stream=True`"

+ ```python

+ inputs = [img, img] # list of numpy arrays

+ results = model(inputs, stream=True) # generator of Results objects

+

+ for result in results:

+ boxes = result.boxes # Boxes object for bbox outputs

+ masks = result.masks # Masks object for segmentation masks outputs

+ probs = result.probs # Class probabilities for classification outputs

+ ```

+

+!!! tip "Tip"

+

+ Streaming mode with `stream=True` should be used for long videos or large predict sources, otherwise results will accumuate in memory and will eventually cause out-of-memory errors.

+

+## Sources

+

+YOLOv8 can accept various input sources, as shown in the table below. This includes images, URLs, PIL images, OpenCV,

+numpy arrays, torch tensors, CSV files, videos, directories, globs, YouTube videos, and streams. The table indicates

+whether each source can be used in streaming mode with `stream=True` ✅ and an example argument for each source.

+

+| source | model(arg) | type | notes |

+|-------------|--------------------------------------------|----------------|------------------|

+| image | `'im.jpg'` | `str`, `Path` | |

+| URL | `'https://ultralytics.com/images/bus.jpg'` | `str` | |

+| screenshot | `'screen'` | `str` | |

+| PIL | `Image.open('im.jpg')` | `PIL.Image` | HWC, RGB |

+| OpenCV | `cv2.imread('im.jpg')[:,:,::-1]` | `np.ndarray` | HWC, BGR to RGB |

+| numpy | `np.zeros((640,1280,3))` | `np.ndarray` | HWC |

+| torch | `torch.zeros(16,3,320,640)` | `torch.Tensor` | BCHW, RGB |

+| CSV | `'sources.csv'` | `str`, `Path` | RTSP, RTMP, HTTP |

+| video ✅ | `'vid.mp4'` | `str`, `Path` | |

+| directory ✅ | `'path/'` | `str`, `Path` | |

+| glob ✅ | `'path/*.jpg'` | `str` | Use `*` operator |

+| YouTube ✅ | `'https://youtu.be/Zgi9g1ksQHc'` | `str` | |

+| stream ✅ | `'rtsp://example.com/media.mp4'` | `str` | RTSP, RTMP, HTTP |

+

+

+## Arguments

+`model.predict` accepts multiple arguments that control the predction operation. These arguments can be passed directly to `model.predict`:

+!!! example

+ ```

+ model.predict(source, save=True, imgsz=320, conf=0.5)

+ ```

+

+All supported arguments:

+

+| Key | Value | Description |

+|------------------|------------------------|----------------------------------------------------------|

+| `source` | `'ultralytics/assets'` | source directory for images or videos |

+| `conf` | `0.25` | object confidence threshold for detection |

+| `iou` | `0.7` | intersection over union (IoU) threshold for NMS |

+| `half` | `False` | use half precision (FP16) |

+| `device` | `None` | device to run on, i.e. cuda device=0/1/2/3 or device=cpu |

+| `show` | `False` | show results if possible |

+| `save` | `False` | save images with results |

+| `save_txt` | `False` | save results as .txt file |

+| `save_conf` | `False` | save results with confidence scores |

+| `save_crop` | `False` | save cropped images with results |

+| `hide_labels` | `False` | hide labels |

+| `hide_conf` | `False` | hide confidence scores |

+| `max_det` | `300` | maximum number of detections per image |

+| `vid_stride` | `False` | video frame-rate stride |

+| `line_thickness` | `3` | bounding box thickness (pixels) |

+| `visualize` | `False` | visualize model features |

+| `augment` | `False` | apply image augmentation to prediction sources |

+| `agnostic_nms` | `False` | class-agnostic NMS |

+| `retina_masks` | `False` | use high-resolution segmentation masks |

+| `classes` | `None` | filter results by class, i.e. class=0, or class=[0,2,3] |

+| `boxes` | `True` | Show boxes in segmentation predictions |

+

+## Image and Video Formats

+

+YOLOv8 supports various image and video formats, as specified

+in [yolo/data/utils.py](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/yolo/data/utils.py). See the

+tables below for the valid suffixes and example predict commands.

+

+### Image Suffixes

+

+| Image Suffixes | Example Predict Command | Reference |

+|----------------|----------------------------------|-------------------------------------------------------------------------------|

+| .bmp | `yolo predict source=image.bmp` | [Microsoft BMP File Format](https://en.wikipedia.org/wiki/BMP_file_format) |

+| .dng | `yolo predict source=image.dng` | [Adobe DNG](https://www.adobe.com/products/photoshop/extend.displayTab2.html) |

+| .jpeg | `yolo predict source=image.jpeg` | [JPEG](https://en.wikipedia.org/wiki/JPEG) |

+| .jpg | `yolo predict source=image.jpg` | [JPEG](https://en.wikipedia.org/wiki/JPEG) |

+| .mpo | `yolo predict source=image.mpo` | [Multi Picture Object](https://fileinfo.com/extension/mpo) |

+| .png | `yolo predict source=image.png` | [Portable Network Graphics](https://en.wikipedia.org/wiki/PNG) |

+| .tif | `yolo predict source=image.tif` | [Tag Image File Format](https://en.wikipedia.org/wiki/TIFF) |

+| .tiff | `yolo predict source=image.tiff` | [Tag Image File Format](https://en.wikipedia.org/wiki/TIFF) |

+| .webp | `yolo predict source=image.webp` | [WebP](https://en.wikipedia.org/wiki/WebP) |

+| .pfm | `yolo predict source=image.pfm` | [Portable FloatMap](https://en.wikipedia.org/wiki/Netpbm#File_formats) |

+

+### Video Suffixes

+

+| Video Suffixes | Example Predict Command | Reference |

+|----------------|----------------------------------|----------------------------------------------------------------------------------|

+| .asf | `yolo predict source=video.asf` | [Advanced Systems Format](https://en.wikipedia.org/wiki/Advanced_Systems_Format) |

+| .avi | `yolo predict source=video.avi` | [Audio Video Interleave](https://en.wikipedia.org/wiki/Audio_Video_Interleave) |

+| .gif | `yolo predict source=video.gif` | [Graphics Interchange Format](https://en.wikipedia.org/wiki/GIF) |

+| .m4v | `yolo predict source=video.m4v` | [MPEG-4 Part 14](https://en.wikipedia.org/wiki/M4V) |

+| .mkv | `yolo predict source=video.mkv` | [Matroska](https://en.wikipedia.org/wiki/Matroska) |

+| .mov | `yolo predict source=video.mov` | [QuickTime File Format](https://en.wikipedia.org/wiki/QuickTime_File_Format) |

+| .mp4 | `yolo predict source=video.mp4` | [MPEG-4 Part 14 - Wikipedia](https://en.wikipedia.org/wiki/MPEG-4_Part_14) |

+| .mpeg | `yolo predict source=video.mpeg` | [MPEG-1 Part 2](https://en.wikipedia.org/wiki/MPEG-1) |

+| .mpg | `yolo predict source=video.mpg` | [MPEG-1 Part 2](https://en.wikipedia.org/wiki/MPEG-1) |

+| .ts | `yolo predict source=video.ts` | [MPEG Transport Stream](https://en.wikipedia.org/wiki/MPEG_transport_stream) |

+| .wmv | `yolo predict source=video.wmv` | [Windows Media Video](https://en.wikipedia.org/wiki/Windows_Media_Video) |

+| .webm | `yolo predict source=video.webm` | [WebM Project](https://en.wikipedia.org/wiki/WebM) |

+

+## Working with Results

+

+The `Results` object contains the following components:

+

+- `Results.boxes`: `Boxes` object with properties and methods for manipulating bounding boxes

+- `Results.masks`: `Masks` object for indexing masks or getting segment coordinates

+- `Results.probs`: `torch.Tensor` containing class probabilities or logits

+- `Results.orig_img`: Original image loaded in memory

+- `Results.path`: `Path` containing the path to the input image

+

+Each result is composed of a `torch.Tensor` by default, which allows for easy manipulation:

+

+!!! example "Results"

+

+ ```python

+ results = results.cuda()

+ results = results.cpu()

+ results = results.to('cpu')

+ results = results.numpy()

+ ```

+

+### Boxes

+

+`Boxes` object can be used to index, manipulate, and convert bounding boxes to different formats. Box format conversion

+operations are cached, meaning they're only calculated once per object, and those values are reused for future calls.

+

+- Indexing a `Boxes` object returns a `Boxes` object:

+

+!!! example "Boxes"

+

+ ```python

+ results = model(img)

+ boxes = results[0].boxes

+ box = boxes[0] # returns one box

+ box.xyxy

+ ```

+

+- Properties and conversions

+

+!!! example "Boxes Properties"

+

+ ```python

+ boxes.xyxy # box with xyxy format, (N, 4)

+ boxes.xywh # box with xywh format, (N, 4)

+ boxes.xyxyn # box with xyxy format but normalized, (N, 4)

+ boxes.xywhn # box with xywh format but normalized, (N, 4)

+ boxes.conf # confidence score, (N, 1)

+ boxes.cls # cls, (N, 1)

+ boxes.data # raw bboxes tensor, (N, 6) or boxes.boxes

+ ```

+

+### Masks

+

+`Masks` object can be used index, manipulate and convert masks to segments. The segment conversion operation is cached.

+

+!!! example "Masks"

+

+ ```python

+ results = model(inputs)

+ masks = results[0].masks # Masks object

+ masks.xy # x, y segments (pixels), List[segment] * N

+ masks.xyn # x, y segments (normalized), List[segment] * N

+ masks.data # raw masks tensor, (N, H, W) or masks.masks

+ ```

+

+### probs

+

+`probs` attribute of `Results` class is a `Tensor` containing class probabilities of a classification operation.

+

+!!! example "Probs"

+

+ ```python

+ results = model(inputs)

+ results[0].probs # cls prob, (num_class, )

+ ```

+

+Class reference documentation for `Results` module and its components can be found [here](../reference/results.md)

+

+## Plotting results

+

+You can use `plot()` function of `Result` object to plot results on in image object. It plots all components(boxes,

+masks, classification logits, etc.) found in the results object

+

+!!! example "Plotting"

+

+ ```python

+ res = model(img)

+ res_plotted = res[0].plot()

+ cv2.imshow("result", res_plotted)

+ ```

+| Argument | Description |

+| ----------- | ------------- |

+| `conf (bool)` | Whether to plot the detection confidence score. |

+| `line_width (float, optional)` | The line width of the bounding boxes. If None, it is scaled to the image size. |

+| `font_size (float, optional)` | The font size of the text. If None, it is scaled to the image size. |

+| `font (str)` | The font to use for the text. |

+| `pil (bool)` | Whether to return the image as a PIL Image. |

+| `example (str)` | An example string to display. Useful for indicating the expected format of the output. |

+| `img (numpy.ndarray)` | Plot to another image. if not, plot to original image. |

+| `labels (bool)` | Whether to plot the label of bounding boxes. |

+| `boxes (bool)` | Whether to plot the bounding boxes. |

+| `masks (bool)` | Whether to plot the masks. |

+| `probs (bool)` | Whether to plot classification probability. |

+

+

+## Streaming Source `for`-loop

+

+Here's a Python script using OpenCV (cv2) and YOLOv8 to run inference on video frames. This script assumes you have already installed the necessary packages (opencv-python and ultralytics).

+

+!!! example "Streaming for-loop"

+

+ ```python

+ import cv2

+ from ultralytics import YOLO

+

+ # Load the YOLOv8 model

+ model = YOLO('yolov8n.pt')

+

+ # Open the video file

+ video_path = "path/to/your/video/file.mp4"

+ cap = cv2.VideoCapture(video_path)

+

+ # Loop through the video frames

+ while cap.isOpened():

+ # Read a frame from the video

+ success, frame = cap.read()

+

+ if success:

+ # Run YOLOv8 inference on the frame

+ results = model(frame)

+

+ # Visualize the results on the frame

+ annotated_frame = results[0].plot()

+

+ # Display the annotated frame

+ cv2.imshow("YOLOv8 Inference", annotated_frame)

+

+ # Break the loop if 'q' is pressed

+ if cv2.waitKey(1) & 0xFF == ord("q"):

+ break

+ else:

+ # Break the loop if the end of the video is reached

+ break

+

+ # Release the video capture object and close the display window

+ cap.release()

+ cv2.destroyAllWindows()

+ ```

\ No newline at end of file

diff --git a/docs/modes/track.md b/docs/modes/track.md

new file mode 100644

index 0000000..8058f38

--- /dev/null

+++ b/docs/modes/track.md

@@ -0,0 +1,96 @@

+ +

+Object tracking is a task that involves identifying the location and class of objects, then assigning a unique ID to

+that detection in video streams.

+

+The output of tracker is the same as detection with an added object ID.

+

+## Available Trackers

+

+The following tracking algorithms have been implemented and can be enabled by passing `tracker=tracker_type.yaml`

+

+* [BoT-SORT](https://github.com/NirAharon/BoT-SORT) - `botsort.yaml`

+* [ByteTrack](https://github.com/ifzhang/ByteTrack) - `bytetrack.yaml`

+

+The default tracker is BoT-SORT.

+

+## Tracking

+

+Use a trained YOLOv8n/YOLOv8n-seg model to run tracker on video streams.

+

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load an official detection model

+ model = YOLO('yolov8n-seg.pt') # load an official segmentation model

+ model = YOLO('path/to/best.pt') # load a custom model

+

+ # Track with the model

+ results = model.track(source="https://youtu.be/Zgi9g1ksQHc", show=True)

+ results = model.track(source="https://youtu.be/Zgi9g1ksQHc", show=True, tracker="bytetrack.yaml")

+ ```

+ === "CLI"

+

+ ```bash

+ yolo track model=yolov8n.pt source="https://youtu.be/Zgi9g1ksQHc" # official detection model

+ yolo track model=yolov8n-seg.pt source=... # official segmentation model

+ yolo track model=path/to/best.pt source=... # custom model

+ yolo track model=path/to/best.pt tracker="bytetrack.yaml" # bytetrack tracker

+

+ ```

+

+As in the above usage, we support both the detection and segmentation models for tracking and the only thing you need to

+do is loading the corresponding (detection or segmentation) model.

+

+## Configuration

+

+### Tracking

+

+Tracking shares the configuration with predict, i.e `conf`, `iou`, `show`. More configurations please refer

+to [predict page](https://docs.ultralytics.com/modes/predict/).

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ model = YOLO('yolov8n.pt')

+ results = model.track(source="https://youtu.be/Zgi9g1ksQHc", conf=0.3, iou=0.5, show=True)

+ ```

+ === "CLI"

+

+ ```bash

+ yolo track model=yolov8n.pt source="https://youtu.be/Zgi9g1ksQHc" conf=0.3, iou=0.5 show

+

+ ```

+

+### Tracker

+

+We also support using a modified tracker config file, just copy a config file i.e `custom_tracker.yaml`

+from [ultralytics/tracker/cfg](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/tracker/cfg) and modify

+any configurations(expect the `tracker_type`) you need to.

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ model = YOLO('yolov8n.pt')

+ results = model.track(source="https://youtu.be/Zgi9g1ksQHc", tracker='custom_tracker.yaml')

+ ```

+ === "CLI"

+

+ ```bash

+ yolo track model=yolov8n.pt source="https://youtu.be/Zgi9g1ksQHc" tracker='custom_tracker.yaml'

+ ```

+

+Please refer to [ultralytics/tracker/cfg](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/tracker/cfg)

+page

+

diff --git a/docs/modes/train.md b/docs/modes/train.md

new file mode 100644

index 0000000..a9275a0

--- /dev/null

+++ b/docs/modes/train.md

@@ -0,0 +1,99 @@

+

+

+Object tracking is a task that involves identifying the location and class of objects, then assigning a unique ID to

+that detection in video streams.

+

+The output of tracker is the same as detection with an added object ID.

+

+## Available Trackers

+

+The following tracking algorithms have been implemented and can be enabled by passing `tracker=tracker_type.yaml`

+

+* [BoT-SORT](https://github.com/NirAharon/BoT-SORT) - `botsort.yaml`

+* [ByteTrack](https://github.com/ifzhang/ByteTrack) - `bytetrack.yaml`

+

+The default tracker is BoT-SORT.

+

+## Tracking

+

+Use a trained YOLOv8n/YOLOv8n-seg model to run tracker on video streams.

+

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load an official detection model

+ model = YOLO('yolov8n-seg.pt') # load an official segmentation model

+ model = YOLO('path/to/best.pt') # load a custom model

+

+ # Track with the model

+ results = model.track(source="https://youtu.be/Zgi9g1ksQHc", show=True)

+ results = model.track(source="https://youtu.be/Zgi9g1ksQHc", show=True, tracker="bytetrack.yaml")

+ ```

+ === "CLI"

+

+ ```bash

+ yolo track model=yolov8n.pt source="https://youtu.be/Zgi9g1ksQHc" # official detection model

+ yolo track model=yolov8n-seg.pt source=... # official segmentation model

+ yolo track model=path/to/best.pt source=... # custom model

+ yolo track model=path/to/best.pt tracker="bytetrack.yaml" # bytetrack tracker

+

+ ```

+

+As in the above usage, we support both the detection and segmentation models for tracking and the only thing you need to

+do is loading the corresponding (detection or segmentation) model.

+

+## Configuration

+

+### Tracking

+

+Tracking shares the configuration with predict, i.e `conf`, `iou`, `show`. More configurations please refer

+to [predict page](https://docs.ultralytics.com/modes/predict/).

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ model = YOLO('yolov8n.pt')

+ results = model.track(source="https://youtu.be/Zgi9g1ksQHc", conf=0.3, iou=0.5, show=True)

+ ```

+ === "CLI"

+

+ ```bash

+ yolo track model=yolov8n.pt source="https://youtu.be/Zgi9g1ksQHc" conf=0.3, iou=0.5 show

+

+ ```

+

+### Tracker

+

+We also support using a modified tracker config file, just copy a config file i.e `custom_tracker.yaml`

+from [ultralytics/tracker/cfg](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/tracker/cfg) and modify

+any configurations(expect the `tracker_type`) you need to.

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ model = YOLO('yolov8n.pt')

+ results = model.track(source="https://youtu.be/Zgi9g1ksQHc", tracker='custom_tracker.yaml')

+ ```

+ === "CLI"

+

+ ```bash

+ yolo track model=yolov8n.pt source="https://youtu.be/Zgi9g1ksQHc" tracker='custom_tracker.yaml'

+ ```

+

+Please refer to [ultralytics/tracker/cfg](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/tracker/cfg)

+page

+

diff --git a/docs/modes/train.md b/docs/modes/train.md

new file mode 100644

index 0000000..a9275a0

--- /dev/null

+++ b/docs/modes/train.md

@@ -0,0 +1,99 @@

+ +

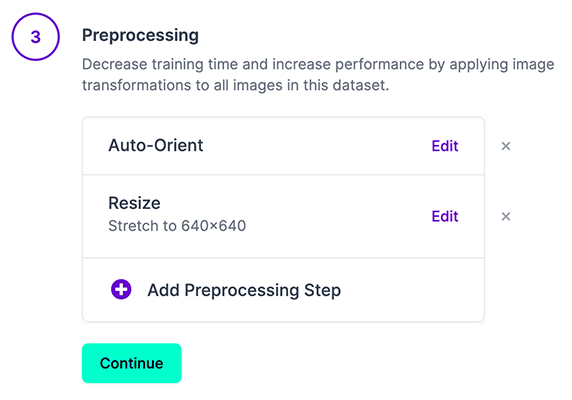

+**Train mode** is used for training a YOLOv8 model on a custom dataset. In this mode, the model is trained using the

+specified dataset and hyperparameters. The training process involves optimizing the model's parameters so that it can

+accurately predict the classes and locations of objects in an image.

+

+!!! tip "Tip"

+

+ * YOLOv8 datasets like COCO, VOC, ImageNet and many others automatically download on first use, i.e. `yolo train data=coco.yaml`

+

+## Usage Examples

+

+Train YOLOv8n on the COCO128 dataset for 100 epochs at image size 640. See Arguments section below for a full list of

+training arguments.

+

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.yaml') # build a new model from YAML

+ model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO('yolov8n.yaml').load('yolov8n.pt') # build from YAML and transfer weights

+

+ # Train the model

+ model.train(data='coco128.yaml', epochs=100, imgsz=640)

+ ```

+ === "CLI"

+

+ ```bash

+ # Build a new model from YAML and start training from scratch

+ yolo detect train data=coco128.yaml model=yolov8n.yaml epochs=100 imgsz=640

+

+ # Start training from a pretrained *.pt model

+ yolo detect train data=coco128.yaml model=yolov8n.pt epochs=100 imgsz=640

+

+ # Build a new model from YAML, transfer pretrained weights to it and start training

+ yolo detect train data=coco128.yaml model=yolov8n.yaml pretrained=yolov8n.pt epochs=100 imgsz=640

+ ```

+

+## Arguments

+

+Training settings for YOLO models refer to the various hyperparameters and configurations used to train the model on a

+dataset. These settings can affect the model's performance, speed, and accuracy. Some common YOLO training settings

+include the batch size, learning rate, momentum, and weight decay. Other factors that may affect the training process

+include the choice of optimizer, the choice of loss function, and the size and composition of the training dataset. It

+is important to carefully tune and experiment with these settings to achieve the best possible performance for a given

+task.

+

+| Key | Value | Description |

+|-------------------|----------|-----------------------------------------------------------------------------|

+| `model` | `None` | path to model file, i.e. yolov8n.pt, yolov8n.yaml |

+| `data` | `None` | path to data file, i.e. coco128.yaml |

+| `epochs` | `100` | number of epochs to train for |

+| `patience` | `50` | epochs to wait for no observable improvement for early stopping of training |

+| `batch` | `16` | number of images per batch (-1 for AutoBatch) |

+| `imgsz` | `640` | size of input images as integer or w,h |

+| `save` | `True` | save train checkpoints and predict results |

+| `save_period` | `-1` | Save checkpoint every x epochs (disabled if < 1) |

+| `cache` | `False` | True/ram, disk or False. Use cache for data loading |

+| `device` | `None` | device to run on, i.e. cuda device=0 or device=0,1,2,3 or device=cpu |

+| `workers` | `8` | number of worker threads for data loading (per RANK if DDP) |

+| `project` | `None` | project name |

+| `name` | `None` | experiment name |

+| `exist_ok` | `False` | whether to overwrite existing experiment |

+| `pretrained` | `False` | whether to use a pretrained model |

+| `optimizer` | `'SGD'` | optimizer to use, choices=['SGD', 'Adam', 'AdamW', 'RMSProp'] |

+| `verbose` | `False` | whether to print verbose output |

+| `seed` | `0` | random seed for reproducibility |

+| `deterministic` | `True` | whether to enable deterministic mode |

+| `single_cls` | `False` | train multi-class data as single-class |

+| `image_weights` | `False` | use weighted image selection for training |

+| `rect` | `False` | rectangular training with each batch collated for minimum padding |

+| `cos_lr` | `False` | use cosine learning rate scheduler |

+| `close_mosaic` | `10` | disable mosaic augmentation for final 10 epochs |

+| `resume` | `False` | resume training from last checkpoint |

+| `amp` | `True` | Automatic Mixed Precision (AMP) training, choices=[True, False] |

+| `lr0` | `0.01` | initial learning rate (i.e. SGD=1E-2, Adam=1E-3) |

+| `lrf` | `0.01` | final learning rate (lr0 * lrf) |

+| `momentum` | `0.937` | SGD momentum/Adam beta1 |

+| `weight_decay` | `0.0005` | optimizer weight decay 5e-4 |

+| `warmup_epochs` | `3.0` | warmup epochs (fractions ok) |

+| `warmup_momentum` | `0.8` | warmup initial momentum |

+| `warmup_bias_lr` | `0.1` | warmup initial bias lr |

+| `box` | `7.5` | box loss gain |

+| `cls` | `0.5` | cls loss gain (scale with pixels) |

+| `dfl` | `1.5` | dfl loss gain |

+| `pose` | `12.0` | pose loss gain (pose-only) |

+| `kobj` | `2.0` | keypoint obj loss gain (pose-only) |

+| `fl_gamma` | `0.0` | focal loss gamma (efficientDet default gamma=1.5) |

+| `label_smoothing` | `0.0` | label smoothing (fraction) |

+| `nbs` | `64` | nominal batch size |

+| `overlap_mask` | `True` | masks should overlap during training (segment train only) |

+| `mask_ratio` | `4` | mask downsample ratio (segment train only) |

+| `dropout` | `0.0` | use dropout regularization (classify train only) |

+| `val` | `True` | validate/test during training |

diff --git a/docs/modes/val.md b/docs/modes/val.md

new file mode 100644

index 0000000..b0a866d

--- /dev/null

+++ b/docs/modes/val.md

@@ -0,0 +1,86 @@

+

+

+**Train mode** is used for training a YOLOv8 model on a custom dataset. In this mode, the model is trained using the

+specified dataset and hyperparameters. The training process involves optimizing the model's parameters so that it can

+accurately predict the classes and locations of objects in an image.

+

+!!! tip "Tip"

+

+ * YOLOv8 datasets like COCO, VOC, ImageNet and many others automatically download on first use, i.e. `yolo train data=coco.yaml`

+

+## Usage Examples

+

+Train YOLOv8n on the COCO128 dataset for 100 epochs at image size 640. See Arguments section below for a full list of

+training arguments.

+

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.yaml') # build a new model from YAML

+ model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO('yolov8n.yaml').load('yolov8n.pt') # build from YAML and transfer weights

+

+ # Train the model

+ model.train(data='coco128.yaml', epochs=100, imgsz=640)

+ ```

+ === "CLI"

+

+ ```bash

+ # Build a new model from YAML and start training from scratch

+ yolo detect train data=coco128.yaml model=yolov8n.yaml epochs=100 imgsz=640

+

+ # Start training from a pretrained *.pt model

+ yolo detect train data=coco128.yaml model=yolov8n.pt epochs=100 imgsz=640

+

+ # Build a new model from YAML, transfer pretrained weights to it and start training

+ yolo detect train data=coco128.yaml model=yolov8n.yaml pretrained=yolov8n.pt epochs=100 imgsz=640

+ ```

+

+## Arguments

+

+Training settings for YOLO models refer to the various hyperparameters and configurations used to train the model on a

+dataset. These settings can affect the model's performance, speed, and accuracy. Some common YOLO training settings

+include the batch size, learning rate, momentum, and weight decay. Other factors that may affect the training process

+include the choice of optimizer, the choice of loss function, and the size and composition of the training dataset. It

+is important to carefully tune and experiment with these settings to achieve the best possible performance for a given

+task.

+

+| Key | Value | Description |

+|-------------------|----------|-----------------------------------------------------------------------------|

+| `model` | `None` | path to model file, i.e. yolov8n.pt, yolov8n.yaml |

+| `data` | `None` | path to data file, i.e. coco128.yaml |

+| `epochs` | `100` | number of epochs to train for |

+| `patience` | `50` | epochs to wait for no observable improvement for early stopping of training |

+| `batch` | `16` | number of images per batch (-1 for AutoBatch) |

+| `imgsz` | `640` | size of input images as integer or w,h |

+| `save` | `True` | save train checkpoints and predict results |

+| `save_period` | `-1` | Save checkpoint every x epochs (disabled if < 1) |

+| `cache` | `False` | True/ram, disk or False. Use cache for data loading |

+| `device` | `None` | device to run on, i.e. cuda device=0 or device=0,1,2,3 or device=cpu |

+| `workers` | `8` | number of worker threads for data loading (per RANK if DDP) |

+| `project` | `None` | project name |

+| `name` | `None` | experiment name |

+| `exist_ok` | `False` | whether to overwrite existing experiment |

+| `pretrained` | `False` | whether to use a pretrained model |

+| `optimizer` | `'SGD'` | optimizer to use, choices=['SGD', 'Adam', 'AdamW', 'RMSProp'] |

+| `verbose` | `False` | whether to print verbose output |

+| `seed` | `0` | random seed for reproducibility |

+| `deterministic` | `True` | whether to enable deterministic mode |

+| `single_cls` | `False` | train multi-class data as single-class |

+| `image_weights` | `False` | use weighted image selection for training |

+| `rect` | `False` | rectangular training with each batch collated for minimum padding |

+| `cos_lr` | `False` | use cosine learning rate scheduler |

+| `close_mosaic` | `10` | disable mosaic augmentation for final 10 epochs |

+| `resume` | `False` | resume training from last checkpoint |

+| `amp` | `True` | Automatic Mixed Precision (AMP) training, choices=[True, False] |

+| `lr0` | `0.01` | initial learning rate (i.e. SGD=1E-2, Adam=1E-3) |

+| `lrf` | `0.01` | final learning rate (lr0 * lrf) |

+| `momentum` | `0.937` | SGD momentum/Adam beta1 |

+| `weight_decay` | `0.0005` | optimizer weight decay 5e-4 |

+| `warmup_epochs` | `3.0` | warmup epochs (fractions ok) |

+| `warmup_momentum` | `0.8` | warmup initial momentum |

+| `warmup_bias_lr` | `0.1` | warmup initial bias lr |

+| `box` | `7.5` | box loss gain |

+| `cls` | `0.5` | cls loss gain (scale with pixels) |

+| `dfl` | `1.5` | dfl loss gain |

+| `pose` | `12.0` | pose loss gain (pose-only) |

+| `kobj` | `2.0` | keypoint obj loss gain (pose-only) |

+| `fl_gamma` | `0.0` | focal loss gamma (efficientDet default gamma=1.5) |

+| `label_smoothing` | `0.0` | label smoothing (fraction) |

+| `nbs` | `64` | nominal batch size |

+| `overlap_mask` | `True` | masks should overlap during training (segment train only) |

+| `mask_ratio` | `4` | mask downsample ratio (segment train only) |

+| `dropout` | `0.0` | use dropout regularization (classify train only) |

+| `val` | `True` | validate/test during training |

diff --git a/docs/modes/val.md b/docs/modes/val.md

new file mode 100644

index 0000000..b0a866d

--- /dev/null

+++ b/docs/modes/val.md

@@ -0,0 +1,86 @@

+ +

+**Val mode** is used for validating a YOLOv8 model after it has been trained. In this mode, the model is evaluated on a

+validation set to measure its accuracy and generalization performance. This mode can be used to tune the hyperparameters

+of the model to improve its performance.

+

+!!! tip "Tip"

+

+ * YOLOv8 models automatically remember their training settings, so you can validate a model at the same image size and on the original dataset easily with just `yolo val model=yolov8n.pt` or `model('yolov8n.pt').val()`

+

+## Usage Examples

+

+Validate trained YOLOv8n model accuracy on the COCO128 dataset. No argument need to passed as the `model` retains it's

+training `data` and arguments as model attributes. See Arguments section below for a full list of export arguments.

+

+!!! example ""

+

+ === "Python"

+

+ ```python

+ from ultralytics import YOLO

+

+ # Load a model

+ model = YOLO('yolov8n.pt') # load an official model

+ model = YOLO('path/to/best.pt') # load a custom model

+

+ # Validate the model

+ metrics = model.val() # no arguments needed, dataset and settings remembered

+ metrics.box.map # map50-95

+ metrics.box.map50 # map50

+ metrics.box.map75 # map75

+ metrics.box.maps # a list contains map50-95 of each category

+ ```

+ === "CLI"

+

+ ```bash

+ yolo detect val model=yolov8n.pt # val official model

+ yolo detect val model=path/to/best.pt # val custom model

+ ```

+

+## Arguments

+

+Validation settings for YOLO models refer to the various hyperparameters and configurations used to

+evaluate the model's performance on a validation dataset. These settings can affect the model's performance, speed, and

+accuracy. Some common YOLO validation settings include the batch size, the frequency with which validation is performed

+during training, and the metrics used to evaluate the model's performance. Other factors that may affect the validation

+process include the size and composition of the validation dataset and the specific task the model is being used for. It

+is important to carefully tune and experiment with these settings to ensure that the model is performing well on the

+validation dataset and to detect and prevent overfitting.

+

+| Key | Value | Description |

+|---------------|---------|--------------------------------------------------------------------|

+| `data` | `None` | path to data file, i.e. coco128.yaml |

+| `imgsz` | `640` | image size as scalar or (h, w) list, i.e. (640, 480) |

+| `batch` | `16` | number of images per batch (-1 for AutoBatch) |

+| `save_json` | `False` | save results to JSON file |

+| `save_hybrid` | `False` | save hybrid version of labels (labels + additional predictions) |

+| `conf` | `0.001` | object confidence threshold for detection |

+| `iou` | `0.6` | intersection over union (IoU) threshold for NMS |

+| `max_det` | `300` | maximum number of detections per image |

+| `half` | `True` | use half precision (FP16) |

+| `device` | `None` | device to run on, i.e. cuda device=0/1/2/3 or device=cpu |

+| `dnn` | `False` | use OpenCV DNN for ONNX inference |

+| `plots` | `False` | show plots during training |

+| `rect` | `False` | rectangular val with each batch collated for minimum padding |

+| `split` | `val` | dataset split to use for validation, i.e. 'val', 'test' or 'train' |

+

+## Export Formats

+

+Available YOLOv8 export formats are in the table below. You can export to any format using the `format` argument,

+i.e. `format='onnx'` or `format='engine'`.

+

+| Format | `format` Argument | Model | Metadata |

+|--------------------------------------------------------------------|-------------------|---------------------------|----------|

+| [PyTorch](https://pytorch.org/) | - | `yolov8n.pt` | ✅ |

+| [TorchScript](https://pytorch.org/docs/stable/jit.html) | `torchscript` | `yolov8n.torchscript` | ✅ |

+| [ONNX](https://onnx.ai/) | `onnx` | `yolov8n.onnx` | ✅ |

+| [OpenVINO](https://docs.openvino.ai/latest/index.html) | `openvino` | `yolov8n_openvino_model/` | ✅ |

+| [TensorRT](https://developer.nvidia.com/tensorrt) | `engine` | `yolov8n.engine` | ✅ |

+| [CoreML](https://github.com/apple/coremltools) | `coreml` | `yolov8n.mlmodel` | ✅ |

+| [TF SavedModel](https://www.tensorflow.org/guide/saved_model) | `saved_model` | `yolov8n_saved_model/` | ✅ |

+| [TF GraphDef](https://www.tensorflow.org/api_docs/python/tf/Graph) | `pb` | `yolov8n.pb` | ❌ |

+| [TF Lite](https://www.tensorflow.org/lite) | `tflite` | `yolov8n.tflite` | ✅ |

+| [TF Edge TPU](https://coral.ai/docs/edgetpu/models-intro/) | `edgetpu` | `yolov8n_edgetpu.tflite` | ✅ |

+| [TF.js](https://www.tensorflow.org/js) | `tfjs` | `yolov8n_web_model/` | ✅ |

+| [PaddlePaddle](https://github.com/PaddlePaddle) | `paddle` | `yolov8n_paddle_model/` | ✅ |

diff --git a/docs/quickstart.md b/docs/quickstart.md

new file mode 100644

index 0000000..8725b77

--- /dev/null

+++ b/docs/quickstart.md

@@ -0,0 +1,133 @@

+## Install

+

+Install YOLOv8 via the `ultralytics` pip package for the latest stable release or by cloning

+the [https://github.com/ultralytics/ultralytics](https://github.com/ultralytics/ultralytics) repository for the most

+up-to-date version.

+

+!!! example "Install"

+

+ === "pip install (recommended)"

+ ```bash

+ pip install ultralytics

+ ```

+

+ === "git clone (for development)"

+ ```bash

+ git clone https://github.com/ultralytics/ultralytics

+ cd ultralytics

+ pip install -e .

+ ```

+

+See the `ultralytics` [requirements.txt](https://github.com/ultralytics/ultralytics/blob/main/requirements.txt) file for a list of dependencies. Note that `pip` automatically installs all required dependencies.

+

+!!! tip "Tip"

+

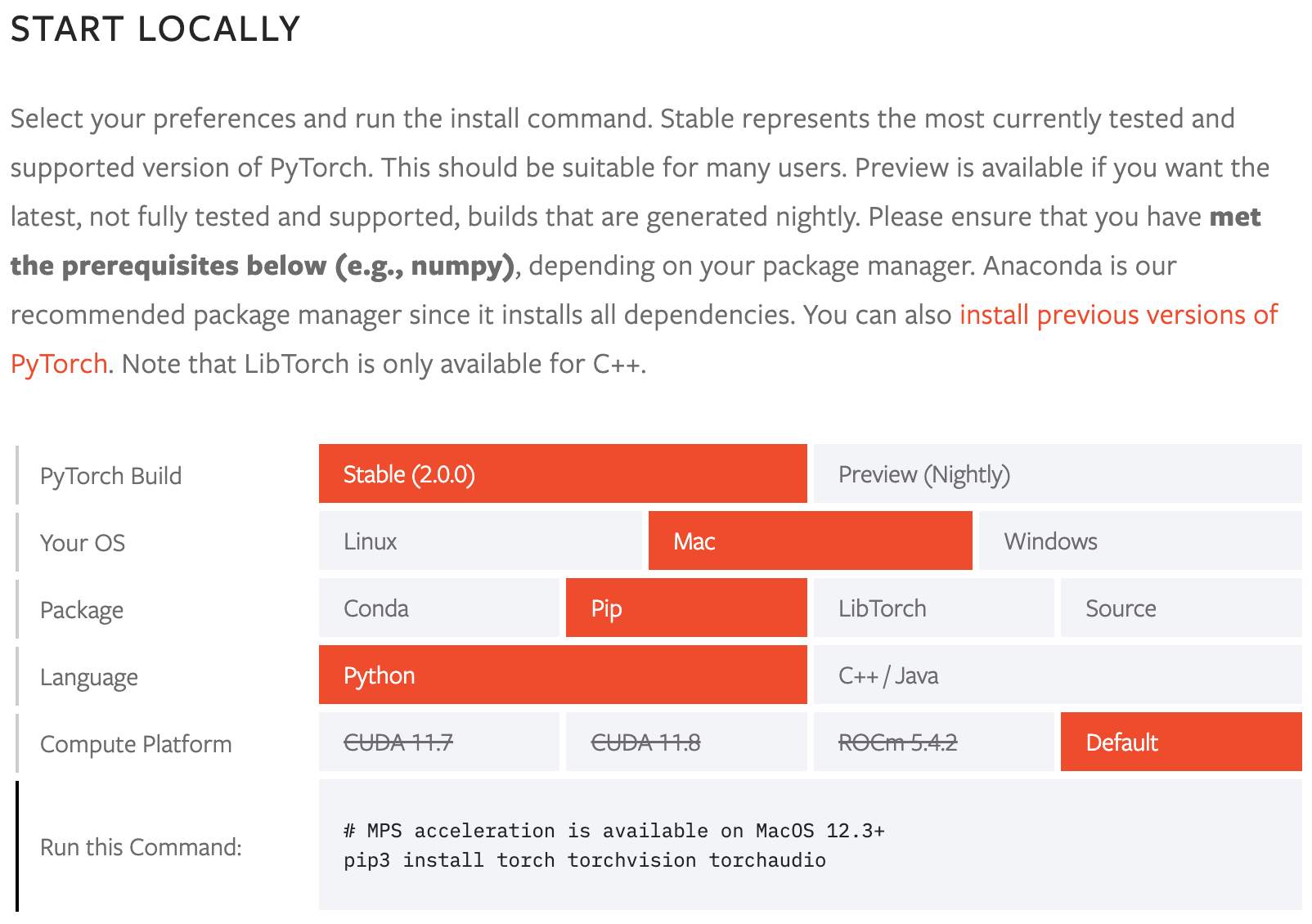

+ PyTorch requirements vary by operating system and CUDA requirements, so it's recommended to install PyTorch first following instructions at [https://pytorch.org/get-started/locally](https://pytorch.org/get-started/locally).

+

+

+

+

+**Val mode** is used for validating a YOLOv8 model after it has been trained. In this mode, the model is evaluated on a

+validation set to measure its accuracy and generalization performance. This mode can be used to tune the hyperparameters

+of the model to improve its performance.