mirror of

https://github.com/libp2p/go-libp2p.git

synced 2025-09-26 20:21:26 +08:00

net2: separate protocols/services out.

using a placeholder net2 package so tests continue to pass. Will be swapped atomically into main code.

This commit is contained in:

17

net2/README.md

Normal file

17

net2/README.md

Normal file

@@ -0,0 +1,17 @@

|

||||

# Network

|

||||

|

||||

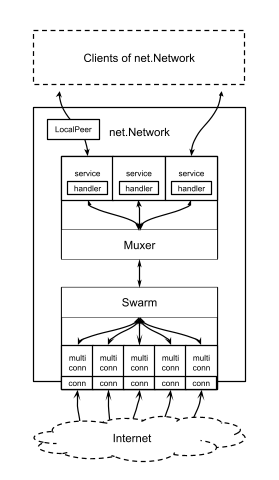

The IPFS Network package handles all of the peer-to-peer networking. It connects to other hosts, it encrypts communications, it muxes messages between the network's client services and target hosts. It has multiple subcomponents:

|

||||

|

||||

- `Conn` - a connection to a single Peer

|

||||

- `MultiConn` - a set of connections to a single Peer

|

||||

- `SecureConn` - an encrypted (tls-like) connection

|

||||

- `Swarm` - holds connections to Peers, multiplexes from/to each `MultiConn`

|

||||

- `Muxer` - multiplexes between `Services` and `Swarm`. Handles `Requet/Reply`.

|

||||

- `Service` - connects between an outside client service and Network.

|

||||

- `Handler` - the client service part that handles requests

|

||||

|

||||

It looks a bit like this:

|

||||

|

||||

<center>

|

||||

|

||||

</center>

|

||||

157

net2/conn/conn.go

Normal file

157

net2/conn/conn.go

Normal file

@@ -0,0 +1,157 @@

|

||||

package conn

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"net"

|

||||

"time"

|

||||

|

||||

context "github.com/jbenet/go-ipfs/Godeps/_workspace/src/code.google.com/p/go.net/context"

|

||||

msgio "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-msgio"

|

||||

mpool "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-msgio/mpool"

|

||||

ma "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-multiaddr"

|

||||

manet "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-multiaddr-net"

|

||||

|

||||

ic "github.com/jbenet/go-ipfs/p2p/crypto"

|

||||

peer "github.com/jbenet/go-ipfs/p2p/peer"

|

||||

u "github.com/jbenet/go-ipfs/util"

|

||||

eventlog "github.com/jbenet/go-ipfs/util/eventlog"

|

||||

)

|

||||

|

||||

var log = eventlog.Logger("conn")

|

||||

|

||||

// ReleaseBuffer puts the given byte array back into the buffer pool,

|

||||

// first verifying that it is the correct size

|

||||

func ReleaseBuffer(b []byte) {

|

||||

log.Debugf("Releasing buffer! (cap,size = %d, %d)", cap(b), len(b))

|

||||

mpool.ByteSlicePool.Put(uint32(cap(b)), b)

|

||||

}

|

||||

|

||||

// singleConn represents a single connection to another Peer (IPFS Node).

|

||||

type singleConn struct {

|

||||

local peer.ID

|

||||

remote peer.ID

|

||||

maconn manet.Conn

|

||||

msgrw msgio.ReadWriteCloser

|

||||

}

|

||||

|

||||

// newConn constructs a new connection

|

||||

func newSingleConn(ctx context.Context, local, remote peer.ID, maconn manet.Conn) (Conn, error) {

|

||||

|

||||

conn := &singleConn{

|

||||

local: local,

|

||||

remote: remote,

|

||||

maconn: maconn,

|

||||

msgrw: msgio.NewReadWriter(maconn),

|

||||

}

|

||||

|

||||

log.Debugf("newSingleConn %p: %v to %v", conn, local, remote)

|

||||

return conn, nil

|

||||

}

|

||||

|

||||

// close is the internal close function, called by ContextCloser.Close

|

||||

func (c *singleConn) Close() error {

|

||||

log.Debugf("%s closing Conn with %s", c.local, c.remote)

|

||||

// close underlying connection

|

||||

return c.msgrw.Close()

|

||||

}

|

||||

|

||||

// ID is an identifier unique to this connection.

|

||||

func (c *singleConn) ID() string {

|

||||

return ID(c)

|

||||

}

|

||||

|

||||

func (c *singleConn) String() string {

|

||||

return String(c, "singleConn")

|

||||

}

|

||||

|

||||

func (c *singleConn) LocalAddr() net.Addr {

|

||||

return c.maconn.LocalAddr()

|

||||

}

|

||||

|

||||

func (c *singleConn) RemoteAddr() net.Addr {

|

||||

return c.maconn.RemoteAddr()

|

||||

}

|

||||

|

||||

func (c *singleConn) LocalPrivateKey() ic.PrivKey {

|

||||

return nil

|

||||

}

|

||||

|

||||

func (c *singleConn) RemotePublicKey() ic.PubKey {

|

||||

return nil

|

||||

}

|

||||

|

||||

func (c *singleConn) SetDeadline(t time.Time) error {

|

||||

return c.maconn.SetDeadline(t)

|

||||

}

|

||||

func (c *singleConn) SetReadDeadline(t time.Time) error {

|

||||

return c.maconn.SetReadDeadline(t)

|

||||

}

|

||||

|

||||

func (c *singleConn) SetWriteDeadline(t time.Time) error {

|

||||

return c.maconn.SetWriteDeadline(t)

|

||||

}

|

||||

|

||||

// LocalMultiaddr is the Multiaddr on this side

|

||||

func (c *singleConn) LocalMultiaddr() ma.Multiaddr {

|

||||

return c.maconn.LocalMultiaddr()

|

||||

}

|

||||

|

||||

// RemoteMultiaddr is the Multiaddr on the remote side

|

||||

func (c *singleConn) RemoteMultiaddr() ma.Multiaddr {

|

||||

return c.maconn.RemoteMultiaddr()

|

||||

}

|

||||

|

||||

// LocalPeer is the Peer on this side

|

||||

func (c *singleConn) LocalPeer() peer.ID {

|

||||

return c.local

|

||||

}

|

||||

|

||||

// RemotePeer is the Peer on the remote side

|

||||

func (c *singleConn) RemotePeer() peer.ID {

|

||||

return c.remote

|

||||

}

|

||||

|

||||

// Read reads data, net.Conn style

|

||||

func (c *singleConn) Read(buf []byte) (int, error) {

|

||||

return c.msgrw.Read(buf)

|

||||

}

|

||||

|

||||

// Write writes data, net.Conn style

|

||||

func (c *singleConn) Write(buf []byte) (int, error) {

|

||||

return c.msgrw.Write(buf)

|

||||

}

|

||||

|

||||

func (c *singleConn) NextMsgLen() (int, error) {

|

||||

return c.msgrw.NextMsgLen()

|

||||

}

|

||||

|

||||

// ReadMsg reads data, net.Conn style

|

||||

func (c *singleConn) ReadMsg() ([]byte, error) {

|

||||

return c.msgrw.ReadMsg()

|

||||

}

|

||||

|

||||

// WriteMsg writes data, net.Conn style

|

||||

func (c *singleConn) WriteMsg(buf []byte) error {

|

||||

return c.msgrw.WriteMsg(buf)

|

||||

}

|

||||

|

||||

// ReleaseMsg releases a buffer

|

||||

func (c *singleConn) ReleaseMsg(m []byte) {

|

||||

c.msgrw.ReleaseMsg(m)

|

||||

}

|

||||

|

||||

// ID returns the ID of a given Conn.

|

||||

func ID(c Conn) string {

|

||||

l := fmt.Sprintf("%s/%s", c.LocalMultiaddr(), c.LocalPeer().Pretty())

|

||||

r := fmt.Sprintf("%s/%s", c.RemoteMultiaddr(), c.RemotePeer().Pretty())

|

||||

lh := u.Hash([]byte(l))

|

||||

rh := u.Hash([]byte(r))

|

||||

ch := u.XOR(lh, rh)

|

||||

return u.Key(ch).Pretty()

|

||||

}

|

||||

|

||||

// String returns the user-friendly String representation of a conn

|

||||

func String(c Conn, typ string) string {

|

||||

return fmt.Sprintf("%s (%s) <-- %s %p --> (%s) %s",

|

||||

c.LocalPeer(), c.LocalMultiaddr(), typ, c, c.RemoteMultiaddr(), c.RemotePeer())

|

||||

}

|

||||

122

net2/conn/conn_test.go

Normal file

122

net2/conn/conn_test.go

Normal file

@@ -0,0 +1,122 @@

|

||||

package conn

|

||||

|

||||

import (

|

||||

"bytes"

|

||||

"fmt"

|

||||

"os"

|

||||

"runtime"

|

||||

"sync"

|

||||

"testing"

|

||||

"time"

|

||||

|

||||

context "github.com/jbenet/go-ipfs/Godeps/_workspace/src/code.google.com/p/go.net/context"

|

||||

)

|

||||

|

||||

func testOneSendRecv(t *testing.T, c1, c2 Conn) {

|

||||

log.Debugf("testOneSendRecv from %s to %s", c1.LocalPeer(), c2.LocalPeer())

|

||||

m1 := []byte("hello")

|

||||

if err := c1.WriteMsg(m1); err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

m2, err := c2.ReadMsg()

|

||||

if err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

if !bytes.Equal(m1, m2) {

|

||||

t.Fatal("failed to send: %s %s", m1, m2)

|

||||

}

|

||||

}

|

||||

|

||||

func testNotOneSendRecv(t *testing.T, c1, c2 Conn) {

|

||||

m1 := []byte("hello")

|

||||

if err := c1.WriteMsg(m1); err == nil {

|

||||

t.Fatal("write should have failed", err)

|

||||

}

|

||||

_, err := c2.ReadMsg()

|

||||

if err == nil {

|

||||

t.Fatal("read should have failed", err)

|

||||

}

|

||||

}

|

||||

|

||||

func TestClose(t *testing.T) {

|

||||

// t.Skip("Skipping in favor of another test")

|

||||

|

||||

ctx, cancel := context.WithCancel(context.Background())

|

||||

defer cancel()

|

||||

c1, c2, _, _ := setupSingleConn(t, ctx)

|

||||

|

||||

testOneSendRecv(t, c1, c2)

|

||||

testOneSendRecv(t, c2, c1)

|

||||

|

||||

c1.Close()

|

||||

testNotOneSendRecv(t, c1, c2)

|

||||

|

||||

c2.Close()

|

||||

testNotOneSendRecv(t, c2, c1)

|

||||

testNotOneSendRecv(t, c1, c2)

|

||||

}

|

||||

|

||||

func TestCloseLeak(t *testing.T) {

|

||||

// t.Skip("Skipping in favor of another test")

|

||||

if testing.Short() {

|

||||

t.SkipNow()

|

||||

}

|

||||

|

||||

if os.Getenv("TRAVIS") == "true" {

|

||||

t.Skip("this doesn't work well on travis")

|

||||

}

|

||||

|

||||

var wg sync.WaitGroup

|

||||

|

||||

runPair := func(num int) {

|

||||

ctx, cancel := context.WithCancel(context.Background())

|

||||

c1, c2, _, _ := setupSingleConn(t, ctx)

|

||||

|

||||

for i := 0; i < num; i++ {

|

||||

b1 := []byte(fmt.Sprintf("beep%d", i))

|

||||

c1.WriteMsg(b1)

|

||||

b2, err := c2.ReadMsg()

|

||||

if err != nil {

|

||||

panic(err)

|

||||

}

|

||||

if !bytes.Equal(b1, b2) {

|

||||

panic(fmt.Errorf("bytes not equal: %s != %s", b1, b2))

|

||||

}

|

||||

|

||||

b2 = []byte(fmt.Sprintf("boop%d", i))

|

||||

c2.WriteMsg(b2)

|

||||

b1, err = c1.ReadMsg()

|

||||

if err != nil {

|

||||

panic(err)

|

||||

}

|

||||

if !bytes.Equal(b1, b2) {

|

||||

panic(fmt.Errorf("bytes not equal: %s != %s", b1, b2))

|

||||

}

|

||||

|

||||

<-time.After(time.Microsecond * 5)

|

||||

}

|

||||

|

||||

c1.Close()

|

||||

c2.Close()

|

||||

cancel() // close the listener

|

||||

wg.Done()

|

||||

}

|

||||

|

||||

var cons = 5

|

||||

var msgs = 50

|

||||

log.Debugf("Running %d connections * %d msgs.\n", cons, msgs)

|

||||

for i := 0; i < cons; i++ {

|

||||

wg.Add(1)

|

||||

go runPair(msgs)

|

||||

}

|

||||

|

||||

log.Debugf("Waiting...\n")

|

||||

wg.Wait()

|

||||

// done!

|

||||

|

||||

<-time.After(time.Millisecond * 150)

|

||||

if runtime.NumGoroutine() > 20 {

|

||||

// panic("uncomment me to debug")

|

||||

t.Fatal("leaking goroutines:", runtime.NumGoroutine())

|

||||

}

|

||||

}

|

||||

131

net2/conn/dial.go

Normal file

131

net2/conn/dial.go

Normal file

@@ -0,0 +1,131 @@

|

||||

package conn

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"strings"

|

||||

|

||||

context "github.com/jbenet/go-ipfs/Godeps/_workspace/src/code.google.com/p/go.net/context"

|

||||

ma "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-multiaddr"

|

||||

manet "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-multiaddr-net"

|

||||

|

||||

peer "github.com/jbenet/go-ipfs/p2p/peer"

|

||||

debugerror "github.com/jbenet/go-ipfs/util/debugerror"

|

||||

)

|

||||

|

||||

// String returns the string rep of d.

|

||||

func (d *Dialer) String() string {

|

||||

return fmt.Sprintf("<Dialer %s %s ...>", d.LocalPeer, d.LocalAddrs[0])

|

||||

}

|

||||

|

||||

// Dial connects to a peer over a particular address

|

||||

// Ensures raddr is part of peer.Addresses()

|

||||

// Example: d.DialAddr(ctx, peer.Addresses()[0], peer)

|

||||

func (d *Dialer) Dial(ctx context.Context, raddr ma.Multiaddr, remote peer.ID) (Conn, error) {

|

||||

|

||||

network, _, err := manet.DialArgs(raddr)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

|

||||

if strings.HasPrefix(raddr.String(), "/ip4/0.0.0.0") {

|

||||

return nil, debugerror.Errorf("Attempted to connect to zero address: %s", raddr)

|

||||

}

|

||||

|

||||

var laddr ma.Multiaddr

|

||||

if len(d.LocalAddrs) > 0 {

|

||||

// laddr := MultiaddrNetMatch(raddr, d.LocalAddrs)

|

||||

laddr = NetAddress(network, d.LocalAddrs)

|

||||

if laddr == nil {

|

||||

return nil, debugerror.Errorf("No local address for network %s", network)

|

||||

}

|

||||

}

|

||||

|

||||

// TODO: try to get reusing addr/ports to work.

|

||||

// madialer := manet.Dialer{LocalAddr: laddr}

|

||||

madialer := manet.Dialer{}

|

||||

|

||||

log.Debugf("%s dialing %s %s", d.LocalPeer, remote, raddr)

|

||||

maconn, err := madialer.Dial(raddr)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

|

||||

var connOut Conn

|

||||

var errOut error

|

||||

done := make(chan struct{})

|

||||

|

||||

// do it async to ensure we respect don contexteone

|

||||

go func() {

|

||||

defer func() { done <- struct{}{} }()

|

||||

|

||||

c, err := newSingleConn(ctx, d.LocalPeer, remote, maconn)

|

||||

if err != nil {

|

||||

errOut = err

|

||||

return

|

||||

}

|

||||

|

||||

if d.PrivateKey == nil {

|

||||

log.Warning("dialer %s dialing INSECURELY %s at %s!", d, remote, raddr)

|

||||

connOut = c

|

||||

return

|

||||

}

|

||||

c2, err := newSecureConn(ctx, d.PrivateKey, c)

|

||||

if err != nil {

|

||||

errOut = err

|

||||

c.Close()

|

||||

return

|

||||

}

|

||||

|

||||

connOut = c2

|

||||

}()

|

||||

|

||||

select {

|

||||

case <-ctx.Done():

|

||||

maconn.Close()

|

||||

return nil, ctx.Err()

|

||||

case <-done:

|

||||

// whew, finished.

|

||||

}

|

||||

|

||||

return connOut, errOut

|

||||

}

|

||||

|

||||

// MultiaddrProtocolsMatch returns whether two multiaddrs match in protocol stacks.

|

||||

func MultiaddrProtocolsMatch(a, b ma.Multiaddr) bool {

|

||||

ap := a.Protocols()

|

||||

bp := b.Protocols()

|

||||

|

||||

if len(ap) != len(bp) {

|

||||

return false

|

||||

}

|

||||

|

||||

for i, api := range ap {

|

||||

if api != bp[i] {

|

||||

return false

|

||||

}

|

||||

}

|

||||

|

||||

return true

|

||||

}

|

||||

|

||||

// MultiaddrNetMatch returns the first Multiaddr found to match network.

|

||||

func MultiaddrNetMatch(tgt ma.Multiaddr, srcs []ma.Multiaddr) ma.Multiaddr {

|

||||

for _, a := range srcs {

|

||||

if MultiaddrProtocolsMatch(tgt, a) {

|

||||

return a

|

||||

}

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

// NetAddress returns the first Multiaddr found for a given network.

|

||||

func NetAddress(n string, addrs []ma.Multiaddr) ma.Multiaddr {

|

||||

for _, a := range addrs {

|

||||

for _, p := range a.Protocols() {

|

||||

if p.Name == n {

|

||||

return a

|

||||

}

|

||||

}

|

||||

}

|

||||

return nil

|

||||

}

|

||||

165

net2/conn/dial_test.go

Normal file

165

net2/conn/dial_test.go

Normal file

@@ -0,0 +1,165 @@

|

||||

package conn

|

||||

|

||||

import (

|

||||

"io"

|

||||

"net"

|

||||

"testing"

|

||||

"time"

|

||||

|

||||

tu "github.com/jbenet/go-ipfs/util/testutil"

|

||||

|

||||

context "github.com/jbenet/go-ipfs/Godeps/_workspace/src/code.google.com/p/go.net/context"

|

||||

)

|

||||

|

||||

func echoListen(ctx context.Context, listener Listener) {

|

||||

for {

|

||||

c, err := listener.Accept()

|

||||

if err != nil {

|

||||

|

||||

select {

|

||||

case <-ctx.Done():

|

||||

return

|

||||

default:

|

||||

}

|

||||

|

||||

if ne, ok := err.(net.Error); ok && ne.Temporary() {

|

||||

<-time.After(time.Microsecond * 10)

|

||||

continue

|

||||

}

|

||||

|

||||

log.Debugf("echoListen: listener appears to be closing")

|

||||

return

|

||||

}

|

||||

|

||||

go echo(c.(Conn))

|

||||

}

|

||||

}

|

||||

|

||||

func echo(c Conn) {

|

||||

io.Copy(c, c)

|

||||

}

|

||||

|

||||

func setupSecureConn(t *testing.T, ctx context.Context) (a, b Conn, p1, p2 tu.PeerNetParams) {

|

||||

return setupConn(t, ctx, true)

|

||||

}

|

||||

|

||||

func setupSingleConn(t *testing.T, ctx context.Context) (a, b Conn, p1, p2 tu.PeerNetParams) {

|

||||

return setupConn(t, ctx, false)

|

||||

}

|

||||

|

||||

func setupConn(t *testing.T, ctx context.Context, secure bool) (a, b Conn, p1, p2 tu.PeerNetParams) {

|

||||

|

||||

p1 = tu.RandPeerNetParamsOrFatal(t)

|

||||

p2 = tu.RandPeerNetParamsOrFatal(t)

|

||||

laddr := p1.Addr

|

||||

|

||||

key1 := p1.PrivKey

|

||||

key2 := p2.PrivKey

|

||||

if !secure {

|

||||

key1 = nil

|

||||

key2 = nil

|

||||

}

|

||||

l1, err := Listen(ctx, laddr, p1.ID, key1)

|

||||

if err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

|

||||

d2 := &Dialer{

|

||||

LocalPeer: p2.ID,

|

||||

PrivateKey: key2,

|

||||

}

|

||||

|

||||

var c2 Conn

|

||||

|

||||

done := make(chan error)

|

||||

go func() {

|

||||

var err error

|

||||

c2, err = d2.Dial(ctx, p1.Addr, p1.ID)

|

||||

if err != nil {

|

||||

done <- err

|

||||

}

|

||||

close(done)

|

||||

}()

|

||||

|

||||

c1, err := l1.Accept()

|

||||

if err != nil {

|

||||

t.Fatal("failed to accept", err)

|

||||

}

|

||||

if err := <-done; err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

|

||||

return c1.(Conn), c2, p1, p2

|

||||

}

|

||||

|

||||

func testDialer(t *testing.T, secure bool) {

|

||||

// t.Skip("Skipping in favor of another test")

|

||||

|

||||

p1 := tu.RandPeerNetParamsOrFatal(t)

|

||||

p2 := tu.RandPeerNetParamsOrFatal(t)

|

||||

|

||||

key1 := p1.PrivKey

|

||||

key2 := p2.PrivKey

|

||||

if !secure {

|

||||

key1 = nil

|

||||

key2 = nil

|

||||

}

|

||||

|

||||

ctx, cancel := context.WithCancel(context.Background())

|

||||

l1, err := Listen(ctx, p1.Addr, p1.ID, key1)

|

||||

if err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

|

||||

d2 := &Dialer{

|

||||

LocalPeer: p2.ID,

|

||||

PrivateKey: key2,

|

||||

}

|

||||

|

||||

go echoListen(ctx, l1)

|

||||

|

||||

c, err := d2.Dial(ctx, p1.Addr, p1.ID)

|

||||

if err != nil {

|

||||

t.Fatal("error dialing peer", err)

|

||||

}

|

||||

|

||||

// fmt.Println("sending")

|

||||

c.WriteMsg([]byte("beep"))

|

||||

c.WriteMsg([]byte("boop"))

|

||||

|

||||

out, err := c.ReadMsg()

|

||||

if err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

|

||||

// fmt.Println("recving", string(out))

|

||||

data := string(out)

|

||||

if data != "beep" {

|

||||

t.Error("unexpected conn output", data)

|

||||

}

|

||||

|

||||

out, err = c.ReadMsg()

|

||||

if err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

|

||||

data = string(out)

|

||||

if string(out) != "boop" {

|

||||

t.Error("unexpected conn output", data)

|

||||

}

|

||||

|

||||

// fmt.Println("closing")

|

||||

c.Close()

|

||||

l1.Close()

|

||||

cancel()

|

||||

}

|

||||

|

||||

func TestDialerInsecure(t *testing.T) {

|

||||

// t.Skip("Skipping in favor of another test")

|

||||

testDialer(t, false)

|

||||

}

|

||||

|

||||

func TestDialerSecure(t *testing.T) {

|

||||

// t.Skip("Skipping in favor of another test")

|

||||

testDialer(t, true)

|

||||

}

|

||||

84

net2/conn/interface.go

Normal file

84

net2/conn/interface.go

Normal file

@@ -0,0 +1,84 @@

|

||||

package conn

|

||||

|

||||

import (

|

||||

"io"

|

||||

"net"

|

||||

"time"

|

||||

|

||||

ic "github.com/jbenet/go-ipfs/p2p/crypto"

|

||||

peer "github.com/jbenet/go-ipfs/p2p/peer"

|

||||

u "github.com/jbenet/go-ipfs/util"

|

||||

|

||||

msgio "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-msgio"

|

||||

ma "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-multiaddr"

|

||||

)

|

||||

|

||||

// Map maps Keys (Peer.IDs) to Connections.

|

||||

type Map map[u.Key]Conn

|

||||

|

||||

type PeerConn interface {

|

||||

// LocalPeer (this side) ID, PrivateKey, and Address

|

||||

LocalPeer() peer.ID

|

||||

LocalPrivateKey() ic.PrivKey

|

||||

LocalMultiaddr() ma.Multiaddr

|

||||

|

||||

// RemotePeer ID, PublicKey, and Address

|

||||

RemotePeer() peer.ID

|

||||

RemotePublicKey() ic.PubKey

|

||||

RemoteMultiaddr() ma.Multiaddr

|

||||

}

|

||||

|

||||

// Conn is a generic message-based Peer-to-Peer connection.

|

||||

type Conn interface {

|

||||

PeerConn

|

||||

|

||||

// ID is an identifier unique to this connection.

|

||||

ID() string

|

||||

|

||||

// can't just say "net.Conn" cause we have duplicate methods.

|

||||

LocalAddr() net.Addr

|

||||

RemoteAddr() net.Addr

|

||||

SetDeadline(t time.Time) error

|

||||

SetReadDeadline(t time.Time) error

|

||||

SetWriteDeadline(t time.Time) error

|

||||

|

||||

msgio.Reader

|

||||

msgio.Writer

|

||||

io.Closer

|

||||

}

|

||||

|

||||

// Dialer is an object that can open connections. We could have a "convenience"

|

||||

// Dial function as before, but it would have many arguments, as dialing is

|

||||

// no longer simple (need a peerstore, a local peer, a context, a network, etc)

|

||||

type Dialer struct {

|

||||

|

||||

// LocalPeer is the identity of the local Peer.

|

||||

LocalPeer peer.ID

|

||||

|

||||

// LocalAddrs is a set of local addresses to use.

|

||||

LocalAddrs []ma.Multiaddr

|

||||

|

||||

// PrivateKey used to initialize a secure connection.

|

||||

// Warning: if PrivateKey is nil, connection will not be secured.

|

||||

PrivateKey ic.PrivKey

|

||||

}

|

||||

|

||||

// Listener is an object that can accept connections. It matches net.Listener

|

||||

type Listener interface {

|

||||

|

||||

// Accept waits for and returns the next connection to the listener.

|

||||

Accept() (net.Conn, error)

|

||||

|

||||

// Addr is the local address

|

||||

Addr() net.Addr

|

||||

|

||||

// Multiaddr is the local multiaddr address

|

||||

Multiaddr() ma.Multiaddr

|

||||

|

||||

// LocalPeer is the identity of the local Peer.

|

||||

LocalPeer() peer.ID

|

||||

|

||||

// Close closes the listener.

|

||||

// Any blocked Accept operations will be unblocked and return errors.

|

||||

Close() error

|

||||

}

|

||||

115

net2/conn/listen.go

Normal file

115

net2/conn/listen.go

Normal file

@@ -0,0 +1,115 @@

|

||||

package conn

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"net"

|

||||

|

||||

context "github.com/jbenet/go-ipfs/Godeps/_workspace/src/code.google.com/p/go.net/context"

|

||||

ctxgroup "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-ctxgroup"

|

||||

ma "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-multiaddr"

|

||||

manet "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-multiaddr-net"

|

||||

|

||||

ic "github.com/jbenet/go-ipfs/p2p/crypto"

|

||||

peer "github.com/jbenet/go-ipfs/p2p/peer"

|

||||

)

|

||||

|

||||

// listener is an object that can accept connections. It implements Listener

|

||||

type listener struct {

|

||||

manet.Listener

|

||||

|

||||

maddr ma.Multiaddr // Local multiaddr to listen on

|

||||

local peer.ID // LocalPeer is the identity of the local Peer

|

||||

privk ic.PrivKey // private key to use to initialize secure conns

|

||||

|

||||

cg ctxgroup.ContextGroup

|

||||

}

|

||||

|

||||

func (l *listener) teardown() error {

|

||||

defer log.Debugf("listener closed: %s %s", l.local, l.maddr)

|

||||

return l.Listener.Close()

|

||||

}

|

||||

|

||||

func (l *listener) Close() error {

|

||||

log.Debugf("listener closing: %s %s", l.local, l.maddr)

|

||||

return l.cg.Close()

|

||||

}

|

||||

|

||||

func (l *listener) String() string {

|

||||

return fmt.Sprintf("<Listener %s %s>", l.local, l.maddr)

|

||||

}

|

||||

|

||||

// Accept waits for and returns the next connection to the listener.

|

||||

// Note that unfortunately this

|

||||

func (l *listener) Accept() (net.Conn, error) {

|

||||

|

||||

// listeners dont have contexts. given changes dont make sense here anymore

|

||||

// note that the parent of listener will Close, which will interrupt all io.

|

||||

// Contexts and io don't mix.

|

||||

ctx := context.Background()

|

||||

|

||||

maconn, err := l.Listener.Accept()

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

|

||||

c, err := newSingleConn(ctx, l.local, "", maconn)

|

||||

if err != nil {

|

||||

return nil, fmt.Errorf("Error accepting connection: %v", err)

|

||||

}

|

||||

|

||||

if l.privk == nil {

|

||||

log.Warning("listener %s listening INSECURELY!", l)

|

||||

return c, nil

|

||||

}

|

||||

sc, err := newSecureConn(ctx, l.privk, c)

|

||||

if err != nil {

|

||||

return nil, fmt.Errorf("Error securing connection: %v", err)

|

||||

}

|

||||

return sc, nil

|

||||

}

|

||||

|

||||

func (l *listener) Addr() net.Addr {

|

||||

return l.Listener.Addr()

|

||||

}

|

||||

|

||||

// Multiaddr is the identity of the local Peer.

|

||||

func (l *listener) Multiaddr() ma.Multiaddr {

|

||||

return l.maddr

|

||||

}

|

||||

|

||||

// LocalPeer is the identity of the local Peer.

|

||||

func (l *listener) LocalPeer() peer.ID {

|

||||

return l.local

|

||||

}

|

||||

|

||||

func (l *listener) Loggable() map[string]interface{} {

|

||||

return map[string]interface{}{

|

||||

"listener": map[string]interface{}{

|

||||

"peer": l.LocalPeer(),

|

||||

"address": l.Multiaddr(),

|

||||

"secure": (l.privk != nil),

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

// Listen listens on the particular multiaddr, with given peer and peerstore.

|

||||

func Listen(ctx context.Context, addr ma.Multiaddr, local peer.ID, sk ic.PrivKey) (Listener, error) {

|

||||

|

||||

ml, err := manet.Listen(addr)

|

||||

if err != nil {

|

||||

return nil, fmt.Errorf("Failed to listen on %s: %s", addr, err)

|

||||

}

|

||||

|

||||

l := &listener{

|

||||

Listener: ml,

|

||||

maddr: addr,

|

||||

local: local,

|

||||

privk: sk,

|

||||

cg: ctxgroup.WithContext(ctx),

|

||||

}

|

||||

l.cg.SetTeardown(l.teardown)

|

||||

|

||||

log.Infof("swarm listening on %s", l.Multiaddr())

|

||||

log.Event(ctx, "swarmListen", l)

|

||||

return l, nil

|

||||

}

|

||||

154

net2/conn/secure_conn.go

Normal file

154

net2/conn/secure_conn.go

Normal file

@@ -0,0 +1,154 @@

|

||||

package conn

|

||||

|

||||

import (

|

||||

"net"

|

||||

"time"

|

||||

|

||||

context "github.com/jbenet/go-ipfs/Godeps/_workspace/src/code.google.com/p/go.net/context"

|

||||

msgio "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-msgio"

|

||||

ma "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-multiaddr"

|

||||

|

||||

ic "github.com/jbenet/go-ipfs/p2p/crypto"

|

||||

secio "github.com/jbenet/go-ipfs/p2p/crypto/secio"

|

||||

peer "github.com/jbenet/go-ipfs/p2p/peer"

|

||||

errors "github.com/jbenet/go-ipfs/util/debugerror"

|

||||

)

|

||||

|

||||

// secureConn wraps another Conn object with an encrypted channel.

|

||||

type secureConn struct {

|

||||

|

||||

// the wrapped conn

|

||||

insecure Conn

|

||||

|

||||

// secure io (wrapping insecure)

|

||||

secure msgio.ReadWriteCloser

|

||||

|

||||

// secure Session

|

||||

session secio.Session

|

||||

}

|

||||

|

||||

// newConn constructs a new connection

|

||||

func newSecureConn(ctx context.Context, sk ic.PrivKey, insecure Conn) (Conn, error) {

|

||||

|

||||

if insecure == nil {

|

||||

return nil, errors.New("insecure is nil")

|

||||

}

|

||||

if insecure.LocalPeer() == "" {

|

||||

return nil, errors.New("insecure.LocalPeer() is nil")

|

||||

}

|

||||

if sk == nil {

|

||||

panic("way")

|

||||

return nil, errors.New("private key is nil")

|

||||

}

|

||||

|

||||

// NewSession performs the secure handshake, which takes multiple RTT

|

||||

sessgen := secio.SessionGenerator{LocalID: insecure.LocalPeer(), PrivateKey: sk}

|

||||

session, err := sessgen.NewSession(ctx, insecure)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

|

||||

conn := &secureConn{

|

||||

insecure: insecure,

|

||||

session: session,

|

||||

secure: session.ReadWriter(),

|

||||

}

|

||||

log.Debugf("newSecureConn: %v to %v handshake success!", conn.LocalPeer(), conn.RemotePeer())

|

||||

return conn, nil

|

||||

}

|

||||

|

||||

func (c *secureConn) Close() error {

|

||||

if err := c.secure.Close(); err != nil {

|

||||

c.insecure.Close()

|

||||

return err

|

||||

}

|

||||

return c.insecure.Close()

|

||||

}

|

||||

|

||||

// ID is an identifier unique to this connection.

|

||||

func (c *secureConn) ID() string {

|

||||

return ID(c)

|

||||

}

|

||||

|

||||

func (c *secureConn) String() string {

|

||||

return String(c, "secureConn")

|

||||

}

|

||||

|

||||

func (c *secureConn) LocalAddr() net.Addr {

|

||||

return c.insecure.LocalAddr()

|

||||

}

|

||||

|

||||

func (c *secureConn) RemoteAddr() net.Addr {

|

||||

return c.insecure.RemoteAddr()

|

||||

}

|

||||

|

||||

func (c *secureConn) SetDeadline(t time.Time) error {

|

||||

return c.insecure.SetDeadline(t)

|

||||

}

|

||||

|

||||

func (c *secureConn) SetReadDeadline(t time.Time) error {

|

||||

return c.insecure.SetReadDeadline(t)

|

||||

}

|

||||

|

||||

func (c *secureConn) SetWriteDeadline(t time.Time) error {

|

||||

return c.insecure.SetWriteDeadline(t)

|

||||

}

|

||||

|

||||

// LocalMultiaddr is the Multiaddr on this side

|

||||

func (c *secureConn) LocalMultiaddr() ma.Multiaddr {

|

||||

return c.insecure.LocalMultiaddr()

|

||||

}

|

||||

|

||||

// RemoteMultiaddr is the Multiaddr on the remote side

|

||||

func (c *secureConn) RemoteMultiaddr() ma.Multiaddr {

|

||||

return c.insecure.RemoteMultiaddr()

|

||||

}

|

||||

|

||||

// LocalPeer is the Peer on this side

|

||||

func (c *secureConn) LocalPeer() peer.ID {

|

||||

return c.session.LocalPeer()

|

||||

}

|

||||

|

||||

// RemotePeer is the Peer on the remote side

|

||||

func (c *secureConn) RemotePeer() peer.ID {

|

||||

return c.session.RemotePeer()

|

||||

}

|

||||

|

||||

// LocalPrivateKey is the public key of the peer on this side

|

||||

func (c *secureConn) LocalPrivateKey() ic.PrivKey {

|

||||

return c.session.LocalPrivateKey()

|

||||

}

|

||||

|

||||

// RemotePubKey is the public key of the peer on the remote side

|

||||

func (c *secureConn) RemotePublicKey() ic.PubKey {

|

||||

return c.session.RemotePublicKey()

|

||||

}

|

||||

|

||||

// Read reads data, net.Conn style

|

||||

func (c *secureConn) Read(buf []byte) (int, error) {

|

||||

return c.secure.Read(buf)

|

||||

}

|

||||

|

||||

// Write writes data, net.Conn style

|

||||

func (c *secureConn) Write(buf []byte) (int, error) {

|

||||

return c.secure.Write(buf)

|

||||

}

|

||||

|

||||

func (c *secureConn) NextMsgLen() (int, error) {

|

||||

return c.secure.NextMsgLen()

|

||||

}

|

||||

|

||||

// ReadMsg reads data, net.Conn style

|

||||

func (c *secureConn) ReadMsg() ([]byte, error) {

|

||||

return c.secure.ReadMsg()

|

||||

}

|

||||

|

||||

// WriteMsg writes data, net.Conn style

|

||||

func (c *secureConn) WriteMsg(buf []byte) error {

|

||||

return c.secure.WriteMsg(buf)

|

||||

}

|

||||

|

||||

// ReleaseMsg releases a buffer

|

||||

func (c *secureConn) ReleaseMsg(m []byte) {

|

||||

c.secure.ReleaseMsg(m)

|

||||

}

|

||||

199

net2/conn/secure_conn_test.go

Normal file

199

net2/conn/secure_conn_test.go

Normal file

@@ -0,0 +1,199 @@

|

||||

package conn

|

||||

|

||||

import (

|

||||

"bytes"

|

||||

"os"

|

||||

"runtime"

|

||||

"sync"

|

||||

"testing"

|

||||

"time"

|

||||

|

||||

ic "github.com/jbenet/go-ipfs/p2p/crypto"

|

||||

|

||||

context "github.com/jbenet/go-ipfs/Godeps/_workspace/src/code.google.com/p/go.net/context"

|

||||

)

|

||||

|

||||

func upgradeToSecureConn(t *testing.T, ctx context.Context, sk ic.PrivKey, c Conn) (Conn, error) {

|

||||

if c, ok := c.(*secureConn); ok {

|

||||

return c, nil

|

||||

}

|

||||

|

||||

// shouldn't happen, because dial + listen already return secure conns.

|

||||

s, err := newSecureConn(ctx, sk, c)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

return s, nil

|

||||

}

|

||||

|

||||

func secureHandshake(t *testing.T, ctx context.Context, sk ic.PrivKey, c Conn, done chan error) {

|

||||

_, err := upgradeToSecureConn(t, ctx, sk, c)

|

||||

done <- err

|

||||

}

|

||||

|

||||

func TestSecureSimple(t *testing.T) {

|

||||

// t.Skip("Skipping in favor of another test")

|

||||

|

||||

numMsgs := 100

|

||||

if testing.Short() {

|

||||

numMsgs = 10

|

||||

}

|

||||

|

||||

ctx := context.Background()

|

||||

c1, c2, p1, p2 := setupSingleConn(t, ctx)

|

||||

|

||||

done := make(chan error)

|

||||

go secureHandshake(t, ctx, p1.PrivKey, c1, done)

|

||||

go secureHandshake(t, ctx, p2.PrivKey, c2, done)

|

||||

|

||||

for i := 0; i < 2; i++ {

|

||||

if err := <-done; err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

}

|

||||

|

||||

for i := 0; i < numMsgs; i++ {

|

||||

testOneSendRecv(t, c1, c2)

|

||||

testOneSendRecv(t, c2, c1)

|

||||

}

|

||||

|

||||

c1.Close()

|

||||

c2.Close()

|

||||

}

|

||||

|

||||

func TestSecureClose(t *testing.T) {

|

||||

// t.Skip("Skipping in favor of another test")

|

||||

|

||||

ctx := context.Background()

|

||||

c1, c2, p1, p2 := setupSingleConn(t, ctx)

|

||||

|

||||

done := make(chan error)

|

||||

go secureHandshake(t, ctx, p1.PrivKey, c1, done)

|

||||

go secureHandshake(t, ctx, p2.PrivKey, c2, done)

|

||||

|

||||

for i := 0; i < 2; i++ {

|

||||

if err := <-done; err != nil {

|

||||

t.Fatal(err)

|

||||

}

|

||||

}

|

||||

|

||||

testOneSendRecv(t, c1, c2)

|

||||

|

||||

c1.Close()

|

||||

testNotOneSendRecv(t, c1, c2)

|

||||

|

||||

c2.Close()

|

||||

testNotOneSendRecv(t, c1, c2)

|

||||

testNotOneSendRecv(t, c2, c1)

|

||||

|

||||

}

|

||||

|

||||

func TestSecureCancelHandshake(t *testing.T) {

|

||||

// t.Skip("Skipping in favor of another test")

|

||||

|

||||

ctx, cancel := context.WithCancel(context.Background())

|

||||

c1, c2, p1, p2 := setupSingleConn(t, ctx)

|

||||

|

||||

done := make(chan error)

|

||||

go secureHandshake(t, ctx, p1.PrivKey, c1, done)

|

||||

<-time.After(time.Millisecond)

|

||||

cancel() // cancel ctx

|

||||

go secureHandshake(t, ctx, p2.PrivKey, c2, done)

|

||||

|

||||

for i := 0; i < 2; i++ {

|

||||

if err := <-done; err == nil {

|

||||

t.Error("cancel should've errored out")

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

func TestSecureHandshakeFailsWithWrongKeys(t *testing.T) {

|

||||

// t.Skip("Skipping in favor of another test")

|

||||

|

||||

ctx, cancel := context.WithCancel(context.Background())

|

||||

defer cancel()

|

||||

c1, c2, p1, p2 := setupSingleConn(t, ctx)

|

||||

|

||||

done := make(chan error)

|

||||

go secureHandshake(t, ctx, p2.PrivKey, c1, done)

|

||||

go secureHandshake(t, ctx, p1.PrivKey, c2, done)

|

||||

|

||||

for i := 0; i < 2; i++ {

|

||||

if err := <-done; err == nil {

|

||||

t.Fatal("wrong keys should've errored out.")

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

func TestSecureCloseLeak(t *testing.T) {

|

||||

// t.Skip("Skipping in favor of another test")

|

||||

|

||||

if testing.Short() {

|

||||

t.SkipNow()

|

||||

}

|

||||

if os.Getenv("TRAVIS") == "true" {

|

||||

t.Skip("this doesn't work well on travis")

|

||||

}

|

||||

|

||||

runPair := func(c1, c2 Conn, num int) {

|

||||

log.Debugf("runPair %d", num)

|

||||

|

||||

for i := 0; i < num; i++ {

|

||||

log.Debugf("runPair iteration %d", i)

|

||||

b1 := []byte("beep")

|

||||

c1.WriteMsg(b1)

|

||||

b2, err := c2.ReadMsg()

|

||||

if err != nil {

|

||||

panic(err)

|

||||

}

|

||||

if !bytes.Equal(b1, b2) {

|

||||

panic("bytes not equal")

|

||||

}

|

||||

|

||||

b2 = []byte("beep")

|

||||

c2.WriteMsg(b2)

|

||||

b1, err = c1.ReadMsg()

|

||||

if err != nil {

|

||||

panic(err)

|

||||

}

|

||||

if !bytes.Equal(b1, b2) {

|

||||

panic("bytes not equal")

|

||||

}

|

||||

|

||||

<-time.After(time.Microsecond * 5)

|

||||

}

|

||||

}

|

||||

|

||||

var cons = 5

|

||||

var msgs = 50

|

||||

log.Debugf("Running %d connections * %d msgs.\n", cons, msgs)

|

||||

|

||||

var wg sync.WaitGroup

|

||||

for i := 0; i < cons; i++ {

|

||||

wg.Add(1)

|

||||

|

||||

ctx, cancel := context.WithCancel(context.Background())

|

||||

c1, c2, _, _ := setupSecureConn(t, ctx)

|

||||

go func(c1, c2 Conn) {

|

||||

|

||||

defer func() {

|

||||

c1.Close()

|

||||

c2.Close()

|

||||

cancel()

|

||||

wg.Done()

|

||||

}()

|

||||

|

||||

runPair(c1, c2, msgs)

|

||||

}(c1, c2)

|

||||

}

|

||||

|

||||

log.Debugf("Waiting...\n")

|

||||

wg.Wait()

|

||||

// done!

|

||||

|

||||

<-time.After(time.Millisecond * 150)

|

||||

if runtime.NumGoroutine() > 20 {

|

||||

// panic("uncomment me to debug")

|

||||

t.Fatal("leaking goroutines:", runtime.NumGoroutine())

|

||||

}

|

||||

}

|

||||

133

net2/interface.go

Normal file

133

net2/interface.go

Normal file

@@ -0,0 +1,133 @@

|

||||

package net

|

||||

|

||||

import (

|

||||

"io"

|

||||

|

||||

conn "github.com/jbenet/go-ipfs/p2p/net2/conn"

|

||||

peer "github.com/jbenet/go-ipfs/p2p/peer"

|

||||

|

||||

context "github.com/jbenet/go-ipfs/Godeps/_workspace/src/code.google.com/p/go.net/context"

|

||||

ctxgroup "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-ctxgroup"

|

||||

ma "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-multiaddr"

|

||||

)

|

||||

|

||||

// MessageSizeMax is a soft (recommended) maximum for network messages.

|

||||

// One can write more, as the interface is a stream. But it is useful

|

||||

// to bunch it up into multiple read/writes when the whole message is

|

||||

// a single, large serialized object.

|

||||

const MessageSizeMax = 2 << 22 // 4MB

|

||||

|

||||

// Stream represents a bidirectional channel between two agents in

|

||||

// the IPFS network. "agent" is as granular as desired, potentially

|

||||

// being a "request -> reply" pair, or whole protocols.

|

||||

// Streams are backed by SPDY streams underneath the hood.

|

||||

type Stream interface {

|

||||

io.Reader

|

||||

io.Writer

|

||||

io.Closer

|

||||

|

||||

// Conn returns the connection this stream is part of.

|

||||

Conn() Conn

|

||||

}

|

||||

|

||||

// StreamHandler is the type of function used to listen for

|

||||

// streams opened by the remote side.

|

||||

type StreamHandler func(Stream)

|

||||

|

||||

// Conn is a connection to a remote peer. It multiplexes streams.

|

||||

// Usually there is no need to use a Conn directly, but it may

|

||||

// be useful to get information about the peer on the other side:

|

||||

// stream.Conn().RemotePeer()

|

||||

type Conn interface {

|

||||

conn.PeerConn

|

||||

|

||||

// NewStream constructs a new Stream over this conn.

|

||||

NewStream() (Stream, error)

|

||||

}

|

||||

|

||||

// ConnHandler is the type of function used to listen for

|

||||

// connections opened by the remote side.

|

||||

type ConnHandler func(Conn)

|

||||

|

||||

// Network is the interface used to connect to the outside world.

|

||||

// It dials and listens for connections. it uses a Swarm to pool

|

||||

// connnections (see swarm pkg, and peerstream.Swarm). Connections

|

||||

// are encrypted with a TLS-like protocol.

|

||||

type Network interface {

|

||||

Dialer

|

||||

io.Closer

|

||||

|

||||

// SetStreamHandler sets the handler for new streams opened by the

|

||||

// remote side. This operation is threadsafe.

|

||||

SetStreamHandler(StreamHandler)

|

||||

|

||||

// SetConnHandler sets the handler for new connections opened by the

|

||||

// remote side. This operation is threadsafe.

|

||||

SetConnHandler(ConnHandler)

|

||||

|

||||

// NewStream returns a new stream to given peer p.

|

||||

// If there is no connection to p, attempts to create one.

|

||||

NewStream(peer.ID) (Stream, error)

|

||||

|

||||

// ListenAddresses returns a list of addresses at which this network listens.

|

||||

ListenAddresses() []ma.Multiaddr

|

||||

|

||||

// InterfaceListenAddresses returns a list of addresses at which this network

|

||||

// listens. It expands "any interface" addresses (/ip4/0.0.0.0, /ip6/::) to

|

||||

// use the known local interfaces.

|

||||

InterfaceListenAddresses() ([]ma.Multiaddr, error)

|

||||

|

||||

// CtxGroup returns the network's contextGroup

|

||||

CtxGroup() ctxgroup.ContextGroup

|

||||

}

|

||||

|

||||

// Dialer represents a service that can dial out to peers

|

||||

// (this is usually just a Network, but other services may not need the whole

|

||||

// stack, and thus it becomes easier to mock)

|

||||

type Dialer interface {

|

||||

|

||||

// Peerstore returns the internal peerstore

|

||||

// This is useful to tell the dialer about a new address for a peer.

|

||||

// Or use one of the public keys found out over the network.

|

||||

Peerstore() peer.Peerstore

|

||||

|

||||

// LocalPeer returns the local peer associated with this network

|

||||

LocalPeer() peer.ID

|

||||

|

||||

// DialPeer establishes a connection to a given peer

|

||||

DialPeer(context.Context, peer.ID) (Conn, error)

|

||||

|

||||

// ClosePeer closes the connection to a given peer

|

||||

ClosePeer(peer.ID) error

|

||||

|

||||

// Connectedness returns a state signaling connection capabilities

|

||||

Connectedness(peer.ID) Connectedness

|

||||

|

||||

// Peers returns the peers connected

|

||||

Peers() []peer.ID

|

||||

|

||||

// Conns returns the connections in this Netowrk

|

||||

Conns() []Conn

|

||||

|

||||

// ConnsToPeer returns the connections in this Netowrk for given peer.

|

||||

ConnsToPeer(p peer.ID) []Conn

|

||||

}

|

||||

|

||||

// Connectedness signals the capacity for a connection with a given node.

|

||||

// It is used to signal to services and other peers whether a node is reachable.

|

||||

type Connectedness int

|

||||

|

||||

const (

|

||||

// NotConnected means no connection to peer, and no extra information (default)

|

||||

NotConnected Connectedness = iota

|

||||

|

||||

// Connected means has an open, live connection to peer

|

||||

Connected

|

||||

|

||||

// CanConnect means recently connected to peer, terminated gracefully

|

||||

CanConnect

|

||||

|

||||

// CannotConnect means recently attempted connecting but failed to connect.

|

||||

// (should signal "made effort, failed")

|

||||

CannotConnect

|

||||

)

|

||||

98

net2/mock/interface.go

Normal file

98

net2/mock/interface.go

Normal file

@@ -0,0 +1,98 @@

|

||||

// Package mocknet provides a mock net.Network to test with.

|

||||

//

|

||||

// - a Mocknet has many inet.Networks

|

||||

// - a Mocknet has many Links

|

||||

// - a Link joins two inet.Networks

|

||||

// - inet.Conns and inet.Streams are created by inet.Networks

|

||||

package mocknet

|

||||

|

||||

import (

|

||||

"io"

|

||||

"time"

|

||||

|

||||

ic "github.com/jbenet/go-ipfs/p2p/crypto"

|

||||

inet "github.com/jbenet/go-ipfs/p2p/net2"

|

||||

peer "github.com/jbenet/go-ipfs/p2p/peer"

|

||||

|

||||

ma "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-multiaddr"

|

||||

)

|

||||

|

||||

type Mocknet interface {

|

||||

|

||||

// GenPeer generates a peer and its inet.Network in the Mocknet

|

||||

GenPeer() (inet.Network, error)

|

||||

|

||||

// AddPeer adds an existing peer. we need both a privkey and addr.

|

||||

// ID is derived from PrivKey

|

||||

AddPeer(ic.PrivKey, ma.Multiaddr) (inet.Network, error)

|

||||

|

||||

// retrieve things (with randomized iteration order)

|

||||

Peers() []peer.ID

|

||||

Net(peer.ID) inet.Network

|

||||

Nets() []inet.Network

|

||||

Links() LinkMap

|

||||

LinksBetweenPeers(a, b peer.ID) []Link

|

||||

LinksBetweenNets(a, b inet.Network) []Link

|

||||

|

||||

// Links are the **ability to connect**.

|

||||

// think of Links as the physical medium.

|

||||

// For p1 and p2 to connect, a link must exist between them.

|

||||

// (this makes it possible to test dial failures, and

|

||||

// things like relaying traffic)

|

||||

LinkPeers(peer.ID, peer.ID) (Link, error)

|

||||

LinkNets(inet.Network, inet.Network) (Link, error)

|

||||

Unlink(Link) error

|

||||

UnlinkPeers(peer.ID, peer.ID) error

|

||||

UnlinkNets(inet.Network, inet.Network) error

|

||||

|

||||

// LinkDefaults are the default options that govern links

|

||||

// if they do not have thier own option set.

|

||||

SetLinkDefaults(LinkOptions)

|

||||

LinkDefaults() LinkOptions

|

||||

|

||||

// Connections are the usual. Connecting means Dialing.

|

||||

// **to succeed, peers must be linked beforehand**

|

||||

ConnectPeers(peer.ID, peer.ID) (inet.Conn, error)

|

||||

ConnectNets(inet.Network, inet.Network) (inet.Conn, error)

|

||||

DisconnectPeers(peer.ID, peer.ID) error

|

||||

DisconnectNets(inet.Network, inet.Network) error

|

||||

}

|

||||

|

||||

// LinkOptions are used to change aspects of the links.

|

||||

// Sorry but they dont work yet :(

|

||||

type LinkOptions struct {

|

||||

Latency time.Duration

|

||||

Bandwidth int // in bytes-per-second

|

||||

// we can make these values distributions down the road.

|

||||

}

|

||||

|

||||

// Link represents the **possibility** of a connection between

|

||||

// two peers. Think of it like physical network links. Without

|

||||

// them, the peers can try and try but they won't be able to

|

||||

// connect. This allows constructing topologies where specific

|

||||

// nodes cannot talk to each other directly. :)

|

||||

type Link interface {

|

||||

Networks() []inet.Network

|

||||

Peers() []peer.ID

|

||||

|

||||

SetOptions(LinkOptions)

|

||||

Options() LinkOptions

|

||||

|

||||

// Metrics() Metrics

|

||||

}

|

||||

|

||||

// LinkMap is a 3D map to give us an easy way to track links.

|

||||

// (wow, much map. so data structure. how compose. ahhh pointer)

|

||||

type LinkMap map[string]map[string]map[Link]struct{}

|

||||

|

||||

// Printer lets you inspect things :)

|

||||

type Printer interface {

|

||||

// MocknetLinks shows the entire Mocknet's link table :)

|

||||

MocknetLinks(mn Mocknet)

|

||||

NetworkConns(ni inet.Network)

|

||||

}

|

||||

|

||||

// PrinterTo returns a Printer ready to write to w.

|

||||

func PrinterTo(w io.Writer) Printer {

|

||||

return &printer{w}

|

||||

}

|

||||

63

net2/mock/mock.go

Normal file

63

net2/mock/mock.go

Normal file

@@ -0,0 +1,63 @@

|

||||

package mocknet

|

||||

|

||||

import (

|

||||

eventlog "github.com/jbenet/go-ipfs/util/eventlog"

|

||||

|

||||

context "github.com/jbenet/go-ipfs/Godeps/_workspace/src/code.google.com/p/go.net/context"

|

||||

)

|

||||

|

||||

var log = eventlog.Logger("mocknet")

|

||||

|

||||

// WithNPeers constructs a Mocknet with N peers.

|

||||

func WithNPeers(ctx context.Context, n int) (Mocknet, error) {

|

||||

m := New(ctx)

|

||||

for i := 0; i < n; i++ {

|

||||

if _, err := m.GenPeer(); err != nil {

|

||||

return nil, err

|

||||

}

|

||||

}

|

||||

return m, nil

|

||||

}

|

||||

|

||||

// FullMeshLinked constructs a Mocknet with full mesh of Links.

|

||||

// This means that all the peers **can** connect to each other

|

||||

// (not that they already are connected. you can use m.ConnectAll())

|

||||

func FullMeshLinked(ctx context.Context, n int) (Mocknet, error) {

|

||||

m, err := WithNPeers(ctx, n)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

|

||||

nets := m.Nets()

|

||||

for _, n1 := range nets {

|

||||

for _, n2 := range nets {

|

||||

// yes, even self.

|

||||

if _, err := m.LinkNets(n1, n2); err != nil {

|

||||

return nil, err

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

return m, nil

|

||||

}

|

||||

|

||||

// FullMeshConnected constructs a Mocknet with full mesh of Connections.

|

||||

// This means that all the peers have dialed and are ready to talk to

|

||||

// each other.

|

||||

func FullMeshConnected(ctx context.Context, n int) (Mocknet, error) {

|

||||

m, err := FullMeshLinked(ctx, n)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

|

||||

nets := m.Nets()

|

||||

for _, n1 := range nets {

|

||||

for _, n2 := range nets {

|

||||

if _, err := m.ConnectNets(n1, n2); err != nil {

|

||||

return nil, err

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

return m, nil

|

||||

}

|

||||

120

net2/mock/mock_conn.go

Normal file

120

net2/mock/mock_conn.go

Normal file

@@ -0,0 +1,120 @@

|

||||

package mocknet

|

||||

|

||||

import (

|

||||

"container/list"

|

||||

"sync"

|

||||

|

||||

ic "github.com/jbenet/go-ipfs/p2p/crypto"

|

||||

inet "github.com/jbenet/go-ipfs/p2p/net2"

|

||||

peer "github.com/jbenet/go-ipfs/p2p/peer"

|

||||

|

||||

ma "github.com/jbenet/go-ipfs/Godeps/_workspace/src/github.com/jbenet/go-multiaddr"

|

||||

)

|

||||

|

||||

// conn represents one side's perspective of a

|

||||

// live connection between two peers.

|

||||

// it goes over a particular link.

|

||||

type conn struct {

|

||||

local peer.ID

|

||||

remote peer.ID

|

||||

|

||||

localAddr ma.Multiaddr

|

||||

remoteAddr ma.Multiaddr

|

||||

|

||||

localPrivKey ic.PrivKey

|

||||

remotePubKey ic.PubKey

|

||||

|

||||

net *peernet

|

||||

link *link

|

||||

rconn *conn // counterpart

|

||||

streams list.List

|

||||

|

||||

sync.RWMutex

|

||||

}

|

||||

|

||||

func (c *conn) Close() error {

|

||||

for _, s := range c.allStreams() {

|

||||

s.Close()

|

||||

}

|

||||

c.net.removeConn(c)

|

||||

return nil

|

||||

}

|

||||

|

||||

func (c *conn) addStream(s *stream) {

|

||||

c.Lock()

|

||||

s.conn = c

|

||||

c.streams.PushBack(s)

|

||||

c.Unlock()

|

||||

}

|

||||

|

||||

func (c *conn) removeStream(s *stream) {

|

||||

c.Lock()

|

||||

defer c.Unlock()

|

||||

for e := c.streams.Front(); e != nil; e = e.Next() {

|

||||

if s == e.Value {

|

||||

c.streams.Remove(e)

|

||||

return

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

func (c *conn) allStreams() []inet.Stream {

|

||||

c.RLock()

|

||||

defer c.RUnlock()

|

||||

|

||||

strs := make([]inet.Stream, 0, c.streams.Len())

|

||||

for e := c.streams.Front(); e != nil; e = e.Next() {

|

||||

s := e.Value.(*stream)

|

||||

strs = append(strs, s)

|

||||

}

|

||||

return strs

|

||||

}

|

||||

|

||||

func (c *conn) remoteOpenedStream(s *stream) {

|

||||

c.addStream(s)

|

||||

c.net.handleNewStream(s)

|

||||

}

|

||||

|

||||

func (c *conn) openStream() *stream {

|

||||

sl, sr := c.link.newStreamPair()

|

||||

c.addStream(sl)

|

||||

c.rconn.remoteOpenedStream(sr)

|

||||

return sl

|

||||

}

|

||||

|

||||

func (c *conn) NewStream() (inet.Stream, error) {

|

||||

log.Debugf("Conn.NewStreamWithProtocol: %s --> %s", c.local, c.remote)

|

||||

|

||||

s := c.openStream()

|

||||

return s, nil

|

||||

}

|

||||

|

||||

// LocalMultiaddr is the Multiaddr on this side

|

||||

func (c *conn) LocalMultiaddr() ma.Multiaddr {

|

||||

return c.localAddr

|

||||

}

|

||||

|

||||

// LocalPeer is the Peer on our side of the connection

|

||||

func (c *conn) LocalPeer() peer.ID {

|

||||

return c.local

|

||||

}

|

||||

|

||||

// LocalPrivateKey is the private key of the peer on our side.

|

||||

func (c *conn) LocalPrivateKey() ic.PrivKey {

|

||||

return c.localPrivKey

|

||||

}

|

||||

|

||||

// RemoteMultiaddr is the Multiaddr on the remote side

|

||||

func (c *conn) RemoteMultiaddr() ma.Multiaddr {

|

||||

return c.remoteAddr

|

||||

}

|

||||

|

||||

// RemotePeer is the Peer on the remote side

|

||||

func (c *conn) RemotePeer() peer.ID {

|

||||

return c.remote

|

||||

}

|

||||

|

||||

// RemotePublicKey is the private key of the peer on our side.

|

||||

func (c *conn) RemotePublicKey() ic.PubKey {

|

||||

return c.remotePubKey

|

||||

}

|

||||

93

net2/mock/mock_link.go

Normal file

93

net2/mock/mock_link.go

Normal file

@@ -0,0 +1,93 @@

|

||||

package mocknet

|

||||

|

||||

import (

|

||||

"io"

|

||||

"sync"

|

||||

|

||||

inet "github.com/jbenet/go-ipfs/p2p/net2"

|

||||

peer "github.com/jbenet/go-ipfs/p2p/peer"

|

||||

)

|

||||

|

||||

// link implements mocknet.Link

|

||||

// and, for simplicity, inet.Conn

|

||||

type link struct {

|

||||

mock *mocknet

|

||||

nets []*peernet

|

||||

opts LinkOptions

|

||||

|

||||