* Update README_CN.md 之前的readme cn复制错了,导致存在死链 * Update README_CN.md * Update README_CN.md * Update README.md * Update README.md * Update README.md * Update README_CN.md * Update README_CN.md * Update README.md * Update README_CN.md * Update README.md * Update README_CN.md * Update README.md * Update RNN.md * Update RNN_CN.md * Update WebDemo.md * Update WebDemo_CN.md * Update install_rknn_toolkit2.md * Update export.md * Update use_cpp_sdk_on_android.md * Update README.md * Update README_Pу́сский_язы́к.md * Update README_Pу́сский_язы́к.md * Update README_Pу́сский_язы́к.md * Update README_Pу́сский_язы́к.md * Update README_हिन्दी.md * Update README_日本語.md * Update README_한국인.md * Update README_日本語.md * Update README_CN.md * Update README_CN.md * Update README.md * Update README_CN.md * Update README.md * Update README.md * Update README_CN.md * Update README_CN.md * Update README_CN.md * Update README_CN.md

3.9 KiB

English | 简体中文

The computation process of RNN operator

1. Understanding of RNN

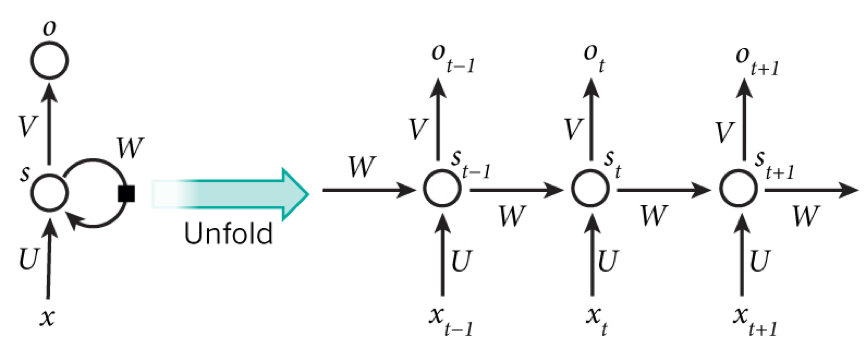

RNN is a recurrent neural network, including an input layer, a hidden layer and an output layer, which is specialized in processing sequential data.

paddle official document: https://www.paddlepaddle.org.cn/documentation/docs/zh/api/paddle/nn/RNN_cn.html#rnn

paddle official document: https://www.paddlepaddle.org.cn/documentation/docs/zh/api/paddle/nn/RNN_cn.html#rnn

paddle source code implementation: https://github.com/PaddlePaddle/Paddle/blob/develop/paddle/fluid/operators/rnn_op.h#L812

2. How to compute RNN

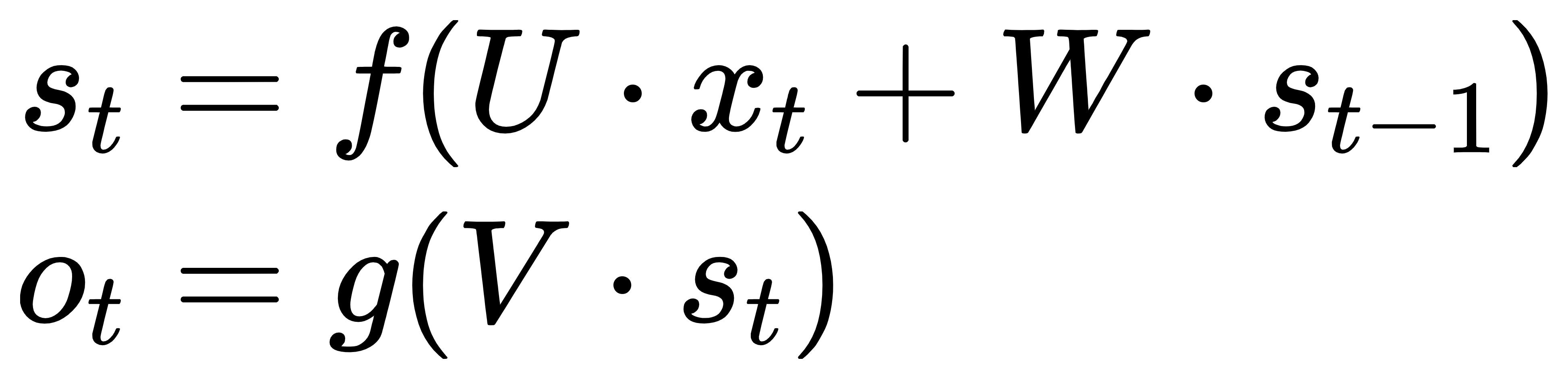

At moment t, the input layer is , hidden layer is

, output layer is

. As the picture above,

isn't just decided by

,it is also related to

. The formula is as follows.:

3. RNN operator implementation in pdjs

Because the gradient disappearance problem exists in RNN, and more contextual information cannot be obtained, LSTM (Long Short Term Memory) is used in CRNN, which is a special kind of RNN that can preserve long-term dependencies.

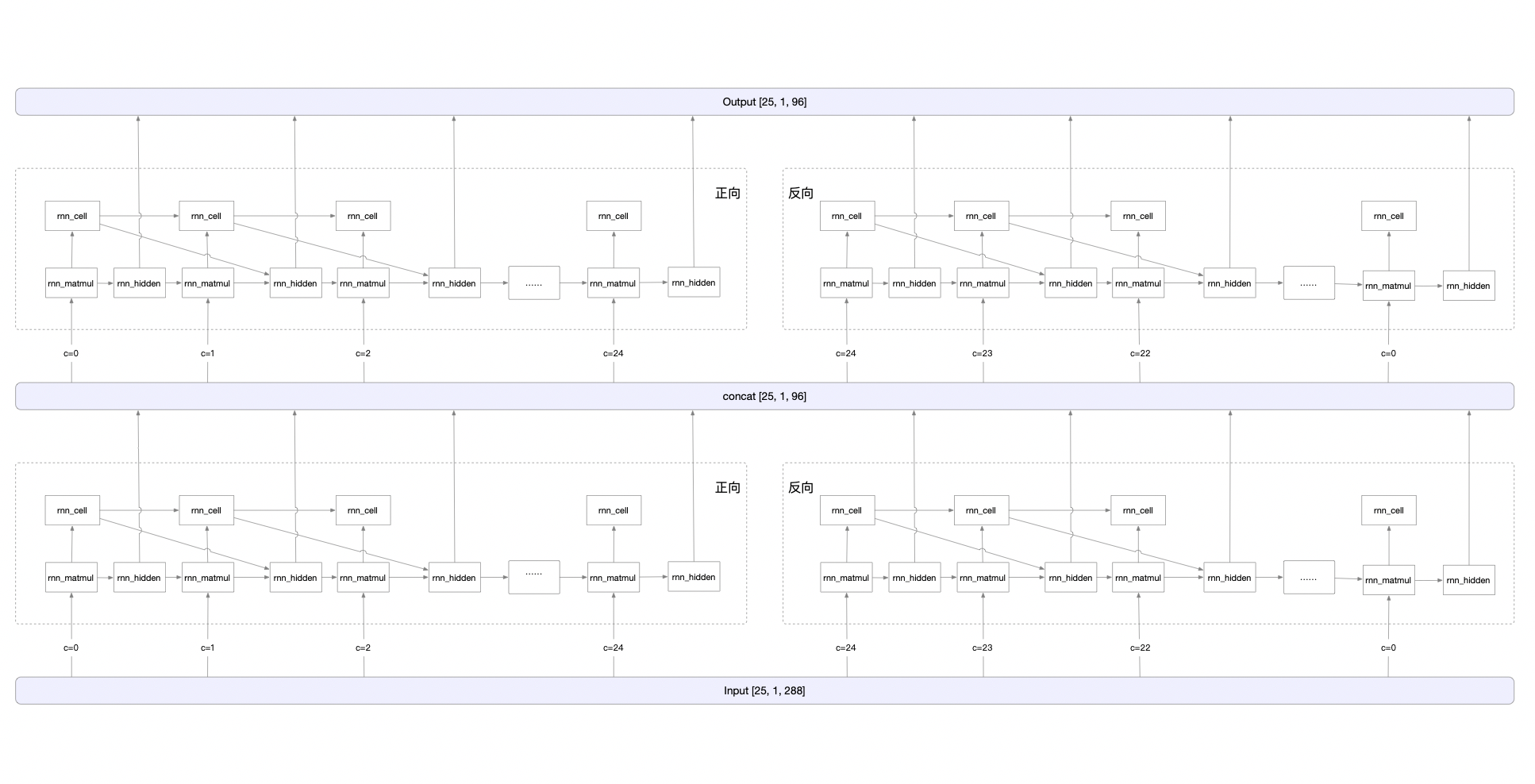

Based on the image sequence, the two directions of context are mutually useful and complementary. Since the LSTM is unidirectional, two LSTMs, one forward and one backward, are combined into a bidirectional LSTM. In addition, multiple layers of bidirectional LSTMs can be stacked. ch_PP-OCRv2_rec_infer recognition model is using a two-layer bidirectional LSTM structure. The calculation process is shown as follows.

Take ch_ppocr_mobile_v2.0_rec_infer model, rnn operator as an example

{

Attr: {

mode: 'LSTM'

// Whether bidirectional, if true, it is necessary to traverse both forward and reverse.

is_bidirec: true

// Number of hidden layers, representing the number of loops.

num_layers: 2

}

Input: [

transpose_1.tmp_0[25, 1, 288]

]

PreState: [

fill_constant_batch_size_like_0.tmp_0[4, 1, 48],

fill_constant_batch_size_like_1.tmp_0[4, 1, 48]

]

WeightList: [

lstm_cell_0.w_0[192, 288], lstm_cell_0.w_1[192, 48],

lstm_cell_1.w_0[192, 288], lstm_cell_1.w_1[192, 48],

lstm_cell_2.w_0[192, 96], lstm_cell_2.w_1[192, 48],

lstm_cell_3.w_0[192, 96], lstm_cell_3.w_1[192, 48],

lstm_cell_0.b_0[192], lstm_cell_0.b_1[192],

lstm_cell_1.b_0[192], lstm_cell_1.b_1[192],

lstm_cell_2.b_0[192], lstm_cell_2.b_1[192],

lstm_cell_3.b_0[192], lstm_cell_3.b_1[192]

]

Output: [

lstm_0.tmp_0[25, 1, 96]

]

}

Overall computation process

Add op in rnn calculation

-

rnn_origin Formula: blas.MatMul(Input, WeightList_ih, blas_ih) + blas.MatMul(PreState, WeightList_hh, blas_hh)

-

rnn_matmul Formula: rnn_matmul = rnn_origin + Matmul(

S_{t-1}, WeightList_hh) -

rnn_cell Method: Split the rnn_matmul op output into 4 copies, each copy performs a different activation function calculation, and finally outputs lstm_x_y.tmp_c[1, 1, 48]. x∈[0, 3], y∈[0, 24]. For details, please refer to rnn_cell.

-

rnn_hidden Split the rnn_matmul op output into 4 copies, each copy performs a different activation function calculation, and finally outputs lstm_x_y.tmp_h[1, 1, 48]. x∈[0, 3], y∈[0, 24]. For details, please refer to rnn_hidden.