mirror of

https://github.com/PaddlePaddle/FastDeploy.git

synced 2025-10-13 20:34:02 +08:00

Merge branch 'develop' of https://github.com/PaddlePaddle/FastDeploy into huawei

This commit is contained in:

@@ -1,3 +1,4 @@

|

||||

[English](../../en/build_and_install/sophgo.md) | 简体中文

|

||||

# SOPHGO 部署库编译

|

||||

|

||||

## SOPHGO 环境准备

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

|

||||

English | [中文](../../cn/build_and_install/sophgo.md)

|

||||

# How to Build SOPHGO Deployment Environment

|

||||

|

||||

## SOPHGO Environment Preparation

|

||||

|

||||

@@ -5,7 +5,7 @@ Please check out the FastDeploy C++ deployment library is already in your enviro

|

||||

|

||||

This document shows an inference sample on the CPU using the PaddleClas classification model MobileNetV2 as an example.

|

||||

|

||||

## 1. Obtaining the Module

|

||||

## 1. Obtaining the Model

|

||||

|

||||

```bash

|

||||

wget https://bj.bcebos.com/fastdeploy/models/mobilenetv2.tgz

|

||||

|

||||

@@ -5,7 +5,7 @@ Please check out the FastDeploy is already installed in your environment. You ca

|

||||

|

||||

This document shows an inference sample on the CPU using the PaddleClas classification model MobileNetV2 as an example.

|

||||

|

||||

## 1. Obtaining the Module

|

||||

## 1. Obtaining the model

|

||||

|

||||

``` python

|

||||

import fastdeploy as fd

|

||||

@@ -42,7 +42,7 @@ results = runtime.infer({

|

||||

|

||||

print(results[0].shape)

|

||||

```

|

||||

When loading is complete, you can get the following output information indicating the initialized backend and the hardware devices.

|

||||

When loading is complete, you will get the following output information indicating the initialized backend and the hardware devices.

|

||||

```

|

||||

[INFO] fastdeploy/fastdeploy_runtime.cc(283)::Init Runtime initialized with Backend::OrtBackend in device Device::CPU.

|

||||

```

|

||||

|

||||

@@ -1,3 +1,4 @@

|

||||

English | [简体中文](README_CN.md)

|

||||

# PaddleJsConverter

|

||||

|

||||

## Installation

|

||||

@@ -26,4 +27,4 @@ pip3 install paddlejsconverter

|

||||

```shell

|

||||

paddlejsconverter --modelPath=user_model_path --paramPath=user_model_params_path --outputDir=model_saved_path --useGPUOpt=True

|

||||

```

|

||||

注意:useGPUOpt 选项默认不开启,如果模型用在 gpu backend(webgl/webgpu),则开启 useGPUOpt,如果模型运行在(wasm/plain js)则不要开启。

|

||||

Note: The option useGPUOpt is not turned on by default. Turn on useGPUOpt if the model is used on gpu backend (webgl/webgpu), don't turn on if is running on (wasm/plain js).

|

||||

|

||||

30

examples/application/js/converter/README_CN.md

Normal file

30

examples/application/js/converter/README_CN.md

Normal file

@@ -0,0 +1,30 @@

|

||||

简体中文 | [English](README.md)

|

||||

# PaddleJsConverter

|

||||

|

||||

## Installation

|

||||

|

||||

System Requirements:

|

||||

|

||||

* paddlepaddle >= 2.0.0

|

||||

* paddlejslite >= 0.0.2

|

||||

* Python3: 3.5.1+ / 3.6 / 3.7

|

||||

* Python2: 2.7.15+

|

||||

|

||||

#### Install PaddleJsConverter

|

||||

|

||||

<img src="https://img.shields.io/pypi/v/paddlejsconverter" alt="version">

|

||||

|

||||

```shell

|

||||

pip install paddlejsconverter

|

||||

|

||||

# or

|

||||

pip3 install paddlejsconverter

|

||||

```

|

||||

|

||||

|

||||

## Usage

|

||||

|

||||

```shell

|

||||

paddlejsconverter --modelPath=user_model_path --paramPath=user_model_params_path --outputDir=model_saved_path --useGPUOpt=True

|

||||

```

|

||||

注意:useGPUOpt 选项默认不开启,如果模型用在 gpu backend(webgl/webgpu),则开启 useGPUOpt,如果模型运行在(wasm/plain js)则不要开启。

|

||||

@@ -1,3 +1,4 @@

|

||||

简体中文 | [English](RNN_EN.md)

|

||||

# RNN算子计算过程

|

||||

|

||||

## 一、RNN理解

|

||||

@@ -73,7 +74,7 @@ paddle源码实现:https://github.com/PaddlePaddle/Paddle/blob/develop/paddle/

|

||||

|

||||

计算方式:将rnn_matmul op输出结果分割成4份,每份执行不同激活函数计算,最后输出lstm_x_y.tmp_c[1, 1, 48]。x∈[0, 3],y∈[0, 24]。

|

||||

详见算子实现:[rnn_cell](../paddlejs-backend-webgl/src/ops/shader/rnn/rnn_cell.ts)

|

||||

)

|

||||

|

||||

|

||||

4)rnn_hidden

|

||||

计算方式:将rnn_matmul op输出结果分割成4份,每份执行不同激活函数计算,最后输出lstm_x_y.tmp_h[1, 1, 48]。x∈[0, 3],y∈[0, 24]。

|

||||

|

||||

80

examples/application/js/converter/RNN_EN.md

Normal file

80

examples/application/js/converter/RNN_EN.md

Normal file

@@ -0,0 +1,80 @@

|

||||

English | [简体中文](RNN.md)

|

||||

# The computation process of RNN operator

|

||||

|

||||

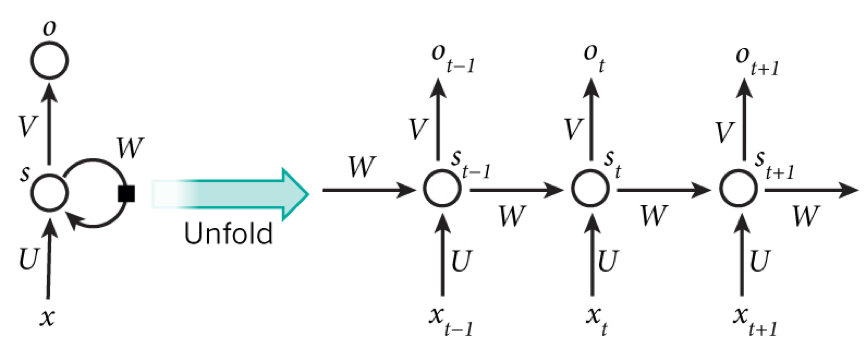

## 1. Understanding of RNN

|

||||

|

||||

**RNN** is a recurrent neural network, including an input layer, a hidden layer and an output layer, which is specialized in processing sequential data.

|

||||

|

||||

|

||||

paddle official document: https://www.paddlepaddle.org.cn/documentation/docs/zh/api/paddle/nn/RNN_cn.html#rnn

|

||||

|

||||

paddle source code implementation: https://github.com/PaddlePaddle/Paddle/blob/develop/paddle/fluid/operators/rnn_op.h#L812

|

||||

|

||||

## 2. How to compute RNN

|

||||

|

||||

At moment t, the input layer is , hidden layer is , output layer is . As the picture above, isn't just decided by ,it is also related to . The formula is as follows.:

|

||||

|

||||

|

||||

|

||||

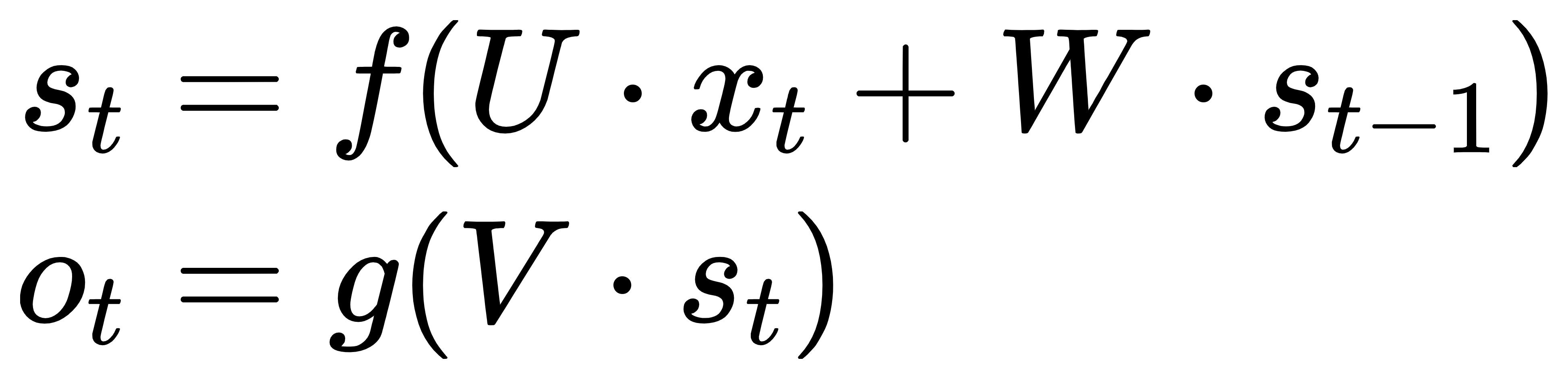

## 3. RNN operator implementation in pdjs

|

||||

|

||||

Because the gradient disappearance problem exists in RNN, and more contextual information cannot be obtained, **LSTM (Long Short Term Memory)** is used in CRNN, which is a special kind of RNN that can preserve long-term dependencies.

|

||||

|

||||

Based on the image sequence, the two directions of context are mutually useful and complementary. Since the LSTM is unidirectional, two LSTMs, one forward and one backward, are combined into a **bidirectional LSTM**. In addition, multiple layers of bidirectional LSTMs can be stacked. ch_PP-OCRv2_rec_infer recognition model is using a two-layer bidirectional LSTM structure. The calculation process is shown as follows.

|

||||

|

||||

#### Take ch_ppocr_mobile_v2.0_rec_infer model, rnn operator as an example

|

||||

```javascript

|

||||

{

|

||||

Attr: {

|

||||

mode: 'LSTM'

|

||||

// Whether bidirectional, if true, it is necessary to traverse both forward and reverse.

|

||||

is_bidirec: true

|

||||

// Number of hidden layers, representing the number of loops.

|

||||

num_layers: 2

|

||||

}

|

||||

|

||||

Input: [

|

||||

transpose_1.tmp_0[25, 1, 288]

|

||||

]

|

||||

|

||||

PreState: [

|

||||

fill_constant_batch_size_like_0.tmp_0[4, 1, 48],

|

||||

fill_constant_batch_size_like_1.tmp_0[4, 1, 48]

|

||||

]

|

||||

|

||||

WeightList: [

|

||||

lstm_cell_0.w_0[192, 288], lstm_cell_0.w_1[192, 48],

|

||||

lstm_cell_1.w_0[192, 288], lstm_cell_1.w_1[192, 48],

|

||||

lstm_cell_2.w_0[192, 96], lstm_cell_2.w_1[192, 48],

|

||||

lstm_cell_3.w_0[192, 96], lstm_cell_3.w_1[192, 48],

|

||||

lstm_cell_0.b_0[192], lstm_cell_0.b_1[192],

|

||||

lstm_cell_1.b_0[192], lstm_cell_1.b_1[192],

|

||||

lstm_cell_2.b_0[192], lstm_cell_2.b_1[192],

|

||||

lstm_cell_3.b_0[192], lstm_cell_3.b_1[192]

|

||||

]

|

||||

|

||||

Output: [

|

||||

lstm_0.tmp_0[25, 1, 96]

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

#### Overall computation process

|

||||

|

||||

#### Add op in rnn calculation

|

||||

1) rnn_origin

|

||||

Formula: blas.MatMul(Input, WeightList_ih, blas_ih) + blas.MatMul(PreState, WeightList_hh, blas_hh)

|

||||

|

||||

2) rnn_matmul

|

||||

Formula: rnn_matmul = rnn_origin + Matmul( $ S_{t-1} $, WeightList_hh)

|

||||

|

||||

3) rnn_cell

|

||||

Method: Split the rnn_matmul op output into 4 copies, each copy performs a different activation function calculation, and finally outputs lstm_x_y.tmp_c[1, 1, 48]. x∈[0, 3], y∈[0, 24].

|

||||

For details, please refer to [rnn_cell](../paddlejs-backend-webgl/src/ops/shader/rnn/rnn_cell.ts).

|

||||

|

||||

|

||||

4) rnn_hidden

|

||||

Split the rnn_matmul op output into 4 copies, each copy performs a different activation function calculation, and finally outputs lstm_x_y.tmp_h[1, 1, 48]. x∈[0, 3], y∈[0, 24].

|

||||

For details, please refer to [rnn_hidden](../paddlejs-backend-webgl/src/ops/shader/rnn/rnn_hidden.ts).

|

||||

|

||||

|

||||

@@ -47,7 +47,7 @@ humanseg.drawMask(data, canvas3, back_canvas);

|

||||

|

||||

```js

|

||||

|

||||

// 引入 humanseg sdk

|

||||

// import humanseg sdk

|

||||

import * as humanseg from '@paddle-js-models/humanseg/lib/index_gpu';

|

||||

|

||||

// load humanseg model, use 398x224 shape model, and preheat

|

||||

|

||||

@@ -1,9 +1,10 @@

|

||||

# PaddleSpeech 流式语音合成

|

||||

English | [简体中文](README_CN.md)

|

||||

# PaddleSpeech Streaming Text-to-Speech

|

||||

|

||||

|

||||

- 本文示例的实现来自[PaddleSpeech 流式语音合成](https://github.com/PaddlePaddle/PaddleSpeech/tree/r1.2).

|

||||

- The examples in this document are from [PaddleSpeech Streaming Text-to-Speech](https://github.com/PaddlePaddle/PaddleSpeech/tree/r1.2).

|

||||

|

||||

## 详细部署文档

|

||||

## Detailed deployment document

|

||||

|

||||

- [Python部署](python)

|

||||

- [Serving部署](serving)

|

||||

- [Python deployment](python)

|

||||

- [Serving deployment](serving)

|

||||

|

||||

10

examples/audio/pp-tts/README_CN.md

Normal file

10

examples/audio/pp-tts/README_CN.md

Normal file

@@ -0,0 +1,10 @@

|

||||

简体中文 | [English](README.md)

|

||||

# PaddleSpeech 流式语音合成

|

||||

|

||||

|

||||

- 本文示例的实现来自[PaddleSpeech 流式语音合成](https://github.com/PaddlePaddle/PaddleSpeech/tree/r1.2).

|

||||

|

||||

## 详细部署文档

|

||||

|

||||

- [Python部署](python)

|

||||

- [Serving部署](serving)

|

||||

@@ -1,63 +1,64 @@

|

||||

# FastDeploy Diffusion模型高性能部署

|

||||

English | [简体中文](README_CN.md)

|

||||

# FastDeploy Diffusion Model High-Performance Deployment

|

||||

|

||||

本部署示例使用⚡️`FastDeploy`在Huggingface团队[Diffusers](https://github.com/huggingface/diffusers)项目设计的`DiffusionPipeline`基础上,完成Diffusion模型的高性能部署。

|

||||

This document completes the high-performance deployment of the Diffusion model with ⚡️`FastDeploy`, based on `DiffusionPipeline` in project [Diffusers](https://github.com/huggingface/diffusers) designed by Huggingface.

|

||||

|

||||

### 部署模型准备

|

||||

### Preperation for Deployment

|

||||

|

||||

本示例需要使用训练模型导出后的部署模型。有两种部署模型的获取方式:

|

||||

This example needs the deployment model after exporting the training model. Here are two ways to obtain the deployment model:

|

||||

|

||||

- 模型导出方式,可参考[模型导出文档](./export.md)导出部署模型。

|

||||

- 下载部署模型。为了方便开发者快速测试本示例,我们已经将部分`Diffusion`模型预先导出,开发者只要下载模型就可以快速测试:

|

||||

- Methods for model export. Please refer to [Model Export](./export_EN.md) to export deployment model.

|

||||

- Download the deployment model. To facilitate developers to test the example, we have pre-exported some of the `Diffusion` models, so you can just download models and test them quickly:

|

||||

|

||||

| 模型 | Scheduler |

|

||||

| Model | Scheduler |

|

||||

|----------|--------------|

|

||||

| [CompVis/stable-diffusion-v1-4](https://bj.bcebos.com/fastdeploy/models/stable-diffusion/CompVis/stable-diffusion-v1-4.tgz) | PNDM |

|

||||

| [runwayml/stable-diffusion-v1-5](https://bj.bcebos.com/fastdeploy/models/stable-diffusion/runwayml/stable-diffusion-v1-5.tgz) | EulerAncestral |

|

||||

|

||||

## 环境依赖

|

||||

## Environment Dependency

|

||||

|

||||

在示例中使用了PaddleNLP的CLIP模型的分词器,所以需要执行以下命令安装依赖。

|

||||

In the example, the word splitter in CLIP model of PaddleNLP is required, so you need to run the following line to install the dependency.

|

||||

|

||||

```shell

|

||||

pip install paddlenlp paddlepaddle-gpu

|

||||

```

|

||||

|

||||

### 快速体验

|

||||

### Quick Experience

|

||||

|

||||

我们经过部署模型准备,可以开始进行测试。下面将指定模型目录以及推理引擎后端,运行`infer.py`脚本,完成推理。

|

||||

We are ready to start testing after model deployment. Here we will specify the model directory as well as the inference engine backend, and run the `infer.py` script to complete the inference.

|

||||

|

||||

```

|

||||

python infer.py --model_dir stable-diffusion-v1-4/ --scheduler "pndm" --backend paddle

|

||||

```

|

||||

|

||||

得到的图像文件为fd_astronaut_rides_horse.png。生成的图片示例如下(每次生成的图片都不相同,示例仅作参考):

|

||||

The image file is fd_astronaut_rides_horse.png. An example of the generated image is as follows (the generated image is different each time, the example is for reference only):

|

||||

|

||||

|

||||

|

||||

如果使用stable-diffusion-v1-5模型,则可执行以下命令完成推理:

|

||||

If the stable-diffusion-v1-5 model is used, you can run these to complete the inference.

|

||||

|

||||

```

|

||||

# GPU上推理

|

||||

# Inference on GPU

|

||||

python infer.py --model_dir stable-diffusion-v1-5/ --scheduler "euler_ancestral" --backend paddle

|

||||

|

||||

# 在昆仑芯XPU上推理

|

||||

# Inference on KunlunXin XPU

|

||||

python infer.py --model_dir stable-diffusion-v1-5/ --scheduler "euler_ancestral" --backend paddle-kunlunxin

|

||||

```

|

||||

|

||||

#### 参数说明

|

||||

#### Parameters

|

||||

|

||||

`infer.py` 除了以上示例的命令行参数,还支持更多命令行参数的设置。以下为各命令行参数的说明。

|

||||

`infer.py` supports more command line parameters than the above example. The following is a description of each command line parameter.

|

||||

|

||||

| 参数 |参数说明 |

|

||||

| Parameter |Description |

|

||||

|----------|--------------|

|

||||

| --model_dir | 导出后模型的目录。 |

|

||||

| --model_format | 模型格式。默认为`'paddle'`,可选列表:`['paddle', 'onnx']`。 |

|

||||

| --backend | 推理引擎后端。默认为`paddle`,可选列表:`['onnx_runtime', 'paddle', 'paddle-kunlunxin']`,当模型格式为`onnx`时,可选列表为`['onnx_runtime']`。 |

|

||||

| --scheduler | StableDiffusion 模型的scheduler。默认为`'pndm'`。可选列表:`['pndm', 'euler_ancestral']`,StableDiffusio模型对应的scheduler可参考[ppdiffuser模型列表](https://github.com/PaddlePaddle/PaddleNLP/tree/develop/ppdiffusers/examples/textual_inversion)。|

|

||||

| --unet_model_prefix | UNet模型前缀。默认为`unet`。 |

|

||||

| --vae_model_prefix | VAE模型前缀。默认为`vae_decoder`。 |

|

||||

| --text_encoder_model_prefix | TextEncoder模型前缀。默认为`text_encoder`。 |

|

||||

| --inference_steps | UNet模型运行的次数,默认为100。 |

|

||||

| --image_path | 生成图片的路径。默认为`fd_astronaut_rides_horse.png`。 |

|

||||

| --device_id | gpu设备的id。若`device_id`为-1,视为使用cpu推理。 |

|

||||

| --use_fp16 | 是否使用fp16精度。默认为`False`。使用tensorrt或者paddle-tensorrt后端时可以设为`True`开启。 |

|

||||

| --model_dir | Directory of the exported model. |

|

||||

| --model_format | Model format. Default is `'paddle'`, optional list: `['paddle', 'onnx']`. |

|

||||

| --backend | Inference engine backend. Default is`paddle`, optional list: `['onnx_runtime', 'paddle', 'paddle-kunlunxin']`, when the model format is `onnx`, optional list is`['onnx_runtime']`. |

|

||||

| --scheduler | Scheduler in StableDiffusion model. Default is`'pndm'`, optional list `['pndm', 'euler_ancestral']`. The scheduler corresponding to the StableDiffusio model can be found in [ppdiffuser model list](https://github.com/PaddlePaddle/PaddleNLP/tree/develop/ppdiffusers/examples/textual_inversion).|

|

||||

| --unet_model_prefix | UNet model prefix, default is `unet`. |

|

||||

| --vae_model_prefix | VAE model prefix, defalut is `vae_decoder`. |

|

||||

| --text_encoder_model_prefix | TextEncoder model prefix, default is `text_encoder`. |

|

||||

| --inference_steps | Running times of UNet model, default is 100. |

|

||||

| --image_path | Path to the generated images, defalut is `fd_astronaut_rides_horse.png`. |

|

||||

| --device_id | gpu id. If `device_id` is -1, cpu is used for inference. |

|

||||

| --use_fp16 | Indicates if fp16 is used, default is `False`. Can be set to `True` when using tensorrt or paddle-tensorrt backend. |

|

||||

|

||||

64

examples/multimodal/stable_diffusion/README_CN.md

Normal file

64

examples/multimodal/stable_diffusion/README_CN.md

Normal file

@@ -0,0 +1,64 @@

|

||||

简体中文 | [English](README.md)

|

||||

# FastDeploy Diffusion模型高性能部署

|

||||

|

||||

本部署示例使用⚡️`FastDeploy`在Huggingface团队[Diffusers](https://github.com/huggingface/diffusers)项目设计的`DiffusionPipeline`基础上,完成Diffusion模型的高性能部署。

|

||||

|

||||

### 部署模型准备

|

||||

|

||||

本示例需要使用训练模型导出后的部署模型。有两种部署模型的获取方式:

|

||||

|

||||

- 模型导出方式,可参考[模型导出文档](./export.md)导出部署模型。

|

||||

- 下载部署模型。为了方便开发者快速测试本示例,我们已经将部分`Diffusion`模型预先导出,开发者只要下载模型就可以快速测试:

|

||||

|

||||

| 模型 | Scheduler |

|

||||

|----------|--------------|

|

||||

| [CompVis/stable-diffusion-v1-4](https://bj.bcebos.com/fastdeploy/models/stable-diffusion/CompVis/stable-diffusion-v1-4.tgz) | PNDM |

|

||||

| [runwayml/stable-diffusion-v1-5](https://bj.bcebos.com/fastdeploy/models/stable-diffusion/runwayml/stable-diffusion-v1-5.tgz) | EulerAncestral |

|

||||

|

||||

## 环境依赖

|

||||

|

||||

在示例中使用了PaddleNLP的CLIP模型的分词器,所以需要执行以下命令安装依赖。

|

||||

|

||||

```shell

|

||||

pip install paddlenlp paddlepaddle-gpu

|

||||

```

|

||||

|

||||

### 快速体验

|

||||

|

||||

我们经过部署模型准备,可以开始进行测试。下面将指定模型目录以及推理引擎后端,运行`infer.py`脚本,完成推理。

|

||||

|

||||

```

|

||||

python infer.py --model_dir stable-diffusion-v1-4/ --scheduler "pndm" --backend paddle

|

||||

```

|

||||

|

||||

得到的图像文件为fd_astronaut_rides_horse.png。生成的图片示例如下(每次生成的图片都不相同,示例仅作参考):

|

||||

|

||||

|

||||

|

||||

如果使用stable-diffusion-v1-5模型,则可执行以下命令完成推理:

|

||||

|

||||

```

|

||||

# GPU上推理

|

||||

python infer.py --model_dir stable-diffusion-v1-5/ --scheduler "euler_ancestral" --backend paddle

|

||||

|

||||

# 在昆仑芯XPU上推理

|

||||

python infer.py --model_dir stable-diffusion-v1-5/ --scheduler "euler_ancestral" --backend paddle-kunlunxin

|

||||

```

|

||||

|

||||

#### 参数说明

|

||||

|

||||

`infer.py` 除了以上示例的命令行参数,还支持更多命令行参数的设置。以下为各命令行参数的说明。

|

||||

|

||||

| 参数 |参数说明 |

|

||||

|----------|--------------|

|

||||

| --model_dir | 导出后模型的目录。 |

|

||||

| --model_format | 模型格式。默认为`'paddle'`,可选列表:`['paddle', 'onnx']`。 |

|

||||

| --backend | 推理引擎后端。默认为`paddle`,可选列表:`['onnx_runtime', 'paddle', 'paddle-kunlunxin']`,当模型格式为`onnx`时,可选列表为`['onnx_runtime']`。 |

|

||||

| --scheduler | StableDiffusion 模型的scheduler。默认为`'pndm'`。可选列表:`['pndm', 'euler_ancestral']`,StableDiffusio模型对应的scheduler可参考[ppdiffuser模型列表](https://github.com/PaddlePaddle/PaddleNLP/tree/develop/ppdiffusers/examples/textual_inversion)。|

|

||||

| --unet_model_prefix | UNet模型前缀。默认为`unet`。 |

|

||||

| --vae_model_prefix | VAE模型前缀。默认为`vae_decoder`。 |

|

||||

| --text_encoder_model_prefix | TextEncoder模型前缀。默认为`text_encoder`。 |

|

||||

| --inference_steps | UNet模型运行的次数,默认为100。 |

|

||||

| --image_path | 生成图片的路径。默认为`fd_astronaut_rides_horse.png`。 |

|

||||

| --device_id | gpu设备的id。若`device_id`为-1,视为使用cpu推理。 |

|

||||

| --use_fp16 | 是否使用fp16精度。默认为`False`。使用tensorrt或者paddle-tensorrt后端时可以设为`True`开启。 |

|

||||

@@ -1,12 +1,13 @@

|

||||

# StableDiffusion C++部署示例

|

||||

English | [简体中文](README_CN.md)

|

||||

# StableDiffusion C++ Deployment

|

||||

|

||||

在部署前,需确认以下两个步骤

|

||||

Before deployment, the following two steps need to be confirmed:

|

||||

|

||||

- 1. 软硬件环境满足要求,参考[FastDeploy环境要求](../../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 2. 根据开发环境,下载预编译部署库和samples代码,参考[FastDeploy预编译库](../../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 1. Hardware and software environment meets the requirements. Please refer to [Environment requirements for FastDeploy](../../../../docs/en/build_and_install/download_prebuilt_libraries.md)

|

||||

- 2. Download pre-compiled libraries and samples according to the development environment. Please refer to [FastDeploy pre-compiled libraries](../../../../docs/en/build_and_install/download_prebuilt_libraries.md)

|

||||

|

||||

本目录下提供`*_infer.cc`快速完成StableDiffusion各任务的C++部署示例。

|

||||

This directory provides `*_infer.cc` to quickly complete C++ deployment examples for each task of StableDiffusion.

|

||||

|

||||

## Inpaint任务

|

||||

## Inpaint Task

|

||||

|

||||

StableDiffusion Inpaint任务是一个根据提示文本补全图片的任务,具体而言就是用户给定提示文本,原始图片以及原始图片的mask图片,该任务输出补全后的图片。

|

||||

The StableDiffusion Inpaint task is a task that completes the image based on the prompt text. User provides the prompt text, the original image and the mask image of the original image, and the task outputs the completed image.

|

||||

|

||||

13

examples/multimodal/stable_diffusion/cpp/README_CN.md

Normal file

13

examples/multimodal/stable_diffusion/cpp/README_CN.md

Normal file

@@ -0,0 +1,13 @@

|

||||

简体中文 | [English](README.md)

|

||||

# StableDiffusion C++部署示例

|

||||

|

||||

在部署前,需确认以下两个步骤

|

||||

|

||||

- 1. 软硬件环境满足要求,参考[FastDeploy环境要求](../../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 2. 根据开发环境,下载预编译部署库和samples代码,参考[FastDeploy预编译库](../../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

|

||||

本目录下提供`*_infer.cc`快速完成StableDiffusion各任务的C++部署示例。

|

||||

|

||||

## Inpaint任务

|

||||

|

||||

StableDiffusion Inpaint任务是一个根据提示文本补全图片的任务,具体而言就是用户给定提示文本,原始图片以及原始图片的mask图片,该任务输出补全后的图片。

|

||||

@@ -1,3 +1,4 @@

|

||||

简体中文 | [English](export_EN.md)

|

||||

# Diffusion模型导出教程

|

||||

|

||||

本项目支持两种模型导出方式:[PPDiffusers](https://github.com/PaddlePaddle/PaddleNLP/tree/develop/ppdiffusers)模型导出以及[Diffusers](https://github.com/huggingface/diffusers)模型导出。下面分别介绍这两种模型导出方式。

|

||||

|

||||

106

examples/multimodal/stable_diffusion/export_EN.md

Normal file

106

examples/multimodal/stable_diffusion/export_EN.md

Normal file

@@ -0,0 +1,106 @@

|

||||

English | [简体中文](export.md)

|

||||

# Diffusion Model Export

|

||||

|

||||

The project supports two methods of model export, [PPDiffusers](https://github.com/PaddlePaddle/PaddleNLP/tree/develop/ppdiffusers) model export and [Diffusers](https://github.com/huggingface/diffusers) model export. Here we introduce each of these two methods.

|

||||

|

||||

## PPDiffusers Model Export

|

||||

|

||||

[PPDiffusers](https://github.com/PaddlePaddle/PaddleNLP/tree/develop/ppdiffusers) is a Diffusion Model toolkit that supports cross-modal (e.g., image and speech) training and inference. It builds on the design of [Diffusers](https://github.com/huggingface/diffusers) by the 🤗 Huggingface team, and relies on [PaddlePaddle](https://github.com/PaddlePaddle/Paddle) framework and the [PaddleNLP](https://github.com/PaddlePaddle/PaddleNLP) natural language processing library. The following describes how to use FastDeploy to deploy the Diffusion model provided by PPDiffusers for high performance.

|

||||

|

||||

### Dependency Installation

|

||||

|

||||

The model export depends on `paddlepaddle`, `paddlenlp` and `ppdiffusers`, which can be installed quickly by running the following command using `pip`.

|

||||

|

||||

```shell

|

||||

pip install -r requirements_paddle.txt

|

||||

```

|

||||

|

||||

### Model Export

|

||||

|

||||

___Note: The StableDiffusion model needs to be downloaded during the model export process. In order to use the model and weights, you must accept the License required. Please visit HuggingFace's [model card](https://huggingface.co/runwayml/stable-diffusion-v1-5), to read the License carefully, and then sign the agreement.___

|

||||

|

||||

___Tips: Stable Diffusion is based on these Licenses: The CreativeML OpenRAIL M license is an Open RAIL M license, adapted from the work that BigScience and the RAIL Initiative are jointly carrying in the area of responsible AI licensing. See also the article about the BLOOM Open RAIL license on which this license is based.___

|

||||

|

||||

You can run the following lines to export model.

|

||||

|

||||

```shell

|

||||

python export_model.py --pretrained_model_name_or_path CompVis/stable-diffusion-v1-4 --output_path stable-diffusion-v1-4

|

||||

```

|

||||

|

||||

The output model directory is as follows:

|

||||

```shell

|

||||

stable-diffusion-v1-4/

|

||||

├── text_encoder

|

||||

│ ├── inference.pdiparams

|

||||

│ ├── inference.pdiparams.info

|

||||

│ └── inference.pdmodel

|

||||

├── unet

|

||||

│ ├── inference.pdiparams

|

||||

│ ├── inference.pdiparams.info

|

||||

│ └── inference.pdmodel

|

||||

└── vae_decoder

|

||||

├── inference.pdiparams

|

||||

├── inference.pdiparams.info

|

||||

└── inference.pdmodel

|

||||

```

|

||||

|

||||

#### Parameters

|

||||

|

||||

Here is description of each command line parameter in `export_model.py`.

|

||||

|

||||

| Parameter |Description |

|

||||

|----------|--------------|

|

||||

|<div style="width: 230pt">--pretrained_model_name_or_path </div> | The diffusion pretrained model provided by ppdiffuers. Default is "CompVis/stable-diffusion-v1-4". For more diffusion pretrained models, please refer to [ppdiffuser model list](https://github.com/PaddlePaddle/PaddleNLP/tree/develop/ppdiffusers/examples/textual_inversion).|

|

||||

|--output_path | Exported directory |

|

||||

|

||||

|

||||

## Diffusers Model Export

|

||||

|

||||

[Diffusers](https://github.com/huggingface/diffusers) is a Diffusion Model toolkit built by HuggingFace to support cross-modal (e.g. image and speech) training and inference. The underlying model code is available in both a PyTorch implementation and a Flax implementation. This example shows how to use FastDeploy to deploy a PyTorch implementation of Diffusion Model for high performance.

|

||||

|

||||

### Dependency Installation

|

||||

|

||||

The model export depends on `onnx`, `torch`, `diffusers` and `transformers`, which can be installed quickly by running the following command using `pip`.

|

||||

|

||||

```shell

|

||||

pip install -r requirements_torch.txt

|

||||

```

|

||||

|

||||

### Model Export

|

||||

|

||||

___Note: The StableDiffusion model needs to be downloaded during the model export process. In order to use the model and weights, you must accept the License required, and get the Token granted by HF Hub. Please visit HuggingFace's [model card](https://huggingface.co/runwayml/stable-diffusion-v1-5), to read the License carefully, and then sign the agreement.___

|

||||

|

||||

___Tips: Stable Diffusion is based on these Licenses: The CreativeML OpenRAIL M license is an Open RAIL M license, adapted from the work that BigScience and the RAIL Initiative are jointly carrying in the area of responsible AI licensing. See also the article about the BLOOM Open RAIL license on which this license is based.___

|

||||

|

||||

If you are exporting a model for the first time, you need to log in to the HuggingFace client first. Run the following command to log in:

|

||||

|

||||

```shell

|

||||

huggingface-cli login

|

||||

```

|

||||

|

||||

After finishing the login, you can run the following lines to export model.

|

||||

|

||||

```shell

|

||||

python export_torch_to_onnx_model.py --pretrained_model_name_or_path CompVis/stable-diffusion-v1-4 --output_path torch_diffusion_model

|

||||

```

|

||||

|

||||

The output model directory is as follows:

|

||||

|

||||

```shell

|

||||

torch_diffusion_model/

|

||||

├── text_encoder

|

||||

│ └── inference.onnx

|

||||

├── unet

|

||||

│ └── inference.onnx

|

||||

└── vae_decoder

|

||||

└── inference.onnx

|

||||

```

|

||||

|

||||

#### Parameters

|

||||

|

||||

Here is description of each command line parameter in `export_torch_to_onnx_model.py`.

|

||||

|

||||

| Parameter |Description |

|

||||

|----------|--------------|

|

||||

|<div style="width: 230pt">--pretrained_model_name_or_path </div> |The diffusion pretrained model provided by ppdiffuers, default is "CompVis/stable-diffusion-v1-4". For more diffusion pretrained models, please refer to [HuggingFace model list](https://huggingface.co/CompVis/stable-diffusion-v1-4).|

|

||||

|--output_path |Exported directory |

|

||||

@@ -1,8 +1,9 @@

|

||||

English | [简体中文](README_CN.md)

|

||||

# FastDeploy Runtime examples

|

||||

|

||||

FastDeploy Runtime 推理示例如下

|

||||

FastDeploy Runtime examples are as follows:

|

||||

|

||||

## Python 示例

|

||||

## Python Example

|

||||

|

||||

| Example Code | Program Language | Description |

|

||||

| :------- | :------- | :---- |

|

||||

@@ -15,7 +16,7 @@ FastDeploy Runtime 推理示例如下

|

||||

| python/infer_onnx_onnxruntime.py | Python | Deploy ONNX model with ONNX Runtime(CPU/GPU) |

|

||||

| python/infer_torchscript_poros.py | Python | Deploy TorchScript model with Poros Runtime(CPU/GPU) |

|

||||

|

||||

## C++ 示例

|

||||

## C++ Example

|

||||

|

||||

| Example Code | Program Language | Description |

|

||||

| :------- | :------- | :---- |

|

||||

@@ -28,7 +29,7 @@ FastDeploy Runtime 推理示例如下

|

||||

| cpp/infer_onnx_onnxruntime.cc | C++ | Deploy ONNX model with ONNX Runtime(CPU/GPU) |

|

||||

| cpp/infer_torchscript_poros.cc | C++ | Deploy TorchScript model with Poros Runtime(CPU/GPU) |

|

||||

|

||||

## 详细部署文档

|

||||

## Detailed deployment documents

|

||||

|

||||

- [Python部署](python)

|

||||

- [C++部署](cpp)

|

||||

- [Python deployment](python)

|

||||

- [C++ deployment](cpp)

|

||||

|

||||

35

examples/runtime/README_CN.md

Normal file

35

examples/runtime/README_CN.md

Normal file

@@ -0,0 +1,35 @@

|

||||

简体中文 | [English](README.md)

|

||||

# FastDeploy Runtime examples

|

||||

|

||||

FastDeploy Runtime 推理示例如下

|

||||

|

||||

## Python 示例

|

||||

|

||||

| Example Code | Program Language | Description |

|

||||

| :------- | :------- | :---- |

|

||||

| python/infer_paddle_paddle_inference.py | Python | Deploy Paddle model with Paddle Inference(CPU/GPU) |

|

||||

| python/infer_paddle_tensorrt.py | Python | Deploy Paddle model with TensorRT(GPU) |

|

||||

| python/infer_paddle_openvino.py | Python | Deploy Paddle model with OpenVINO(CPU) |

|

||||

| python/infer_paddle_onnxruntime.py | Python | Deploy Paddle model with ONNX Runtime(CPU/GPU) |

|

||||

| python/infer_onnx_openvino.py | Python | Deploy ONNX model with OpenVINO(CPU) |

|

||||

| python/infer_onnx_tensorrt.py | Python | Deploy ONNX model with TensorRT(GPU) |

|

||||

| python/infer_onnx_onnxruntime.py | Python | Deploy ONNX model with ONNX Runtime(CPU/GPU) |

|

||||

| python/infer_torchscript_poros.py | Python | Deploy TorchScript model with Poros Runtime(CPU/GPU) |

|

||||

|

||||

## C++ 示例

|

||||

|

||||

| Example Code | Program Language | Description |

|

||||

| :------- | :------- | :---- |

|

||||

| cpp/infer_paddle_paddle_inference.cc | C++ | Deploy Paddle model with Paddle Inference(CPU/GPU) |

|

||||

| cpp/infer_paddle_tensorrt.cc | C++ | Deploy Paddle model with TensorRT(GPU) |

|

||||

| cpp/infer_paddle_openvino.cc | C++ | Deploy Paddle model with OpenVINO(CPU |

|

||||

| cpp/infer_paddle_onnxruntime.cc | C++ | Deploy Paddle model with ONNX Runtime(CPU/GPU) |

|

||||

| cpp/infer_onnx_openvino.cc | C++ | Deploy ONNX model with OpenVINO(CPU) |

|

||||

| cpp/infer_onnx_tensorrt.cc | C++ | Deploy ONNX model with TensorRT(GPU) |

|

||||

| cpp/infer_onnx_onnxruntime.cc | C++ | Deploy ONNX model with ONNX Runtime(CPU/GPU) |

|

||||

| cpp/infer_torchscript_poros.cc | C++ | Deploy TorchScript model with Poros Runtime(CPU/GPU) |

|

||||

|

||||

## 详细部署文档

|

||||

|

||||

- [Python部署](python)

|

||||

- [C++部署](cpp)

|

||||

@@ -1,22 +1,23 @@

|

||||

English | [简体中文](README_CN.md)

|

||||

# C++推理

|

||||

|

||||

在运行demo前,需确认以下两个步骤

|

||||

Before running demo, the following two steps need to be confirmed:

|

||||

|

||||

- 1. 软硬件环境满足要求,参考[FastDeploy环境要求](../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 2. 根据开发环境,下载预编译部署库和samples代码,参考[FastDeploy预编译库](../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 1. Hardware and software environment meets the requirements. Please refer to [Environment requirements for FastDeploy](../../../docs/en/build_and_install/download_prebuilt_libraries.md).

|

||||

- 2. Download pre-compiled libraries and samples according to the development environment. Please refer to [FastDeploy pre-compiled libraries](../../../docs/cn/build_and_install/download_prebuilt_libraries.md).

|

||||

|

||||

本文档以 PaddleClas 分类模型 MobileNetV2 为例展示CPU上的推理示例

|

||||

This document shows an inference example on the CPU using the PaddleClas classification model MobileNetV2 as an example.

|

||||

|

||||

## 1. 获取模型

|

||||

## 1. Obtaining the Model

|

||||

|

||||

```bash

|

||||

wget https://bj.bcebos.com/fastdeploy/models/mobilenetv2.tgz

|

||||

tar xvf mobilenetv2.tgz

|

||||

```

|

||||

|

||||

## 2. 配置后端

|

||||

## 2. Backend Configuration

|

||||

|

||||

如下C++代码保存为`infer_paddle_onnxruntime.cc`

|

||||

The following C++ code is saved as `infer_paddle_onnxruntime.cc`.

|

||||

|

||||

``` c++

|

||||

#include "fastdeploy/runtime.h"

|

||||

@@ -66,35 +67,35 @@ int main(int argc, char* argv[]) {

|

||||

return 0;

|

||||

}

|

||||

```

|

||||

加载完成,会输出提示如下,说明初始化的后端,以及运行的硬件设备

|

||||

When loading is complete, the following prompt will be output, indicating the initialized backend, and the running hardware devices.

|

||||

```

|

||||

[INFO] fastdeploy/fastdeploy_runtime.cc(283)::Init Runtime initialized with Backend::OrtBackend in device Device::CPU.

|

||||

```

|

||||

|

||||

## 3. 准备CMakeLists.txt

|

||||

## 3. Prepare for CMakeLists.txt

|

||||

|

||||

FastDeploy中包含多个依赖库,直接采用`g++`或编译器编译较为繁杂,推荐使用cmake进行编译配置。示例配置如下,

|

||||

FastDeploy contains several dependencies, it is complicated to compile directly with `g++` or compiler, so we recommend using cmake for compiling configuration. The sample configuration is as follows:

|

||||

|

||||

```cmake

|

||||

PROJECT(runtime_demo C CXX)

|

||||

CMAKE_MINIMUM_REQUIRED (VERSION 3.12)

|

||||

|

||||

# 指定下载解压后的fastdeploy库路径

|

||||

# Specify the path to the fastdeploy library after downloading and unpacking.

|

||||

option(FASTDEPLOY_INSTALL_DIR "Path of downloaded fastdeploy sdk.")

|

||||

|

||||

include(${FASTDEPLOY_INSTALL_DIR}/FastDeploy.cmake)

|

||||

|

||||

# 添加FastDeploy依赖头文件

|

||||

# Add FastDeploy dependency headers.

|

||||

include_directories(${FASTDEPLOY_INCS})

|

||||

|

||||

add_executable(runtime_demo ${PROJECT_SOURCE_DIR}/infer_onnx_openvino.cc)

|

||||

# 添加FastDeploy库依赖

|

||||

# Adding FastDeploy library dependencies.

|

||||

target_link_libraries(runtime_demo ${FASTDEPLOY_LIBS})

|

||||

```

|

||||

|

||||

## 4. 编译可执行程序

|

||||

## 4. Compile executable program

|

||||

|

||||

打开命令行终端,进入`infer_paddle_onnxruntime.cc`和`CMakeLists.txt`所在的目录,执行如下命令

|

||||

Open the terminal, go to the directory where `infer_paddle_onnxruntime.cc` and `CMakeLists.txt` are located, and run the following command:

|

||||

|

||||

```bash

|

||||

mkdir build & cd build

|

||||

@@ -102,20 +103,20 @@ cmake .. -DFASTDEPLOY_INSTALL_DIR=$fastdeploy_cpp_sdk

|

||||

make -j

|

||||

```

|

||||

|

||||

```fastdeploy_cpp_sdk``` 为FastDeploy C++部署库路径

|

||||

```fastdeploy_cpp_sdk``` is path to FastDeploy C++ deployment libraries.

|

||||

|

||||

编译完成后,使用如下命令执行可得到预测结果

|

||||

After compiling, run the following command and get the results.

|

||||

```bash

|

||||

./runtime_demo

|

||||

```

|

||||

执行时如提示`error while loading shared libraries: libxxx.so: cannot open shared object file: No such file...`,说明程序执行时没有找到FastDeploy的库路径,可通过执行如下命令,将FastDeploy的库路径添加到环境变量之后,重新执行二进制程序。

|

||||

If you are prompted with `error while loading shared libraries: libxxx.so: cannot open shared object file: No such file... `, it means that the path to FastDeploy libraries is not found, you can run the program again after adding the path to the environment variable by executing the following command.

|

||||

```bash

|

||||

source /Path/to/fastdeploy_cpp_sdk/fastdeploy_init.sh

|

||||

```

|

||||

|

||||

本示例代码在各平台(Windows/Linux/Mac)上通用,但编译过程仅支持(Linux/Mac),Windows上使用msbuild进行编译,具体使用方式参考[Windows平台使用FastDeploy C++ SDK](../../../docs/cn/faq/use_sdk_on_windows.md)

|

||||

This sample code is common on all platforms (Windows/Linux/Mac), but the compilation process is only supported on (Linux/Mac),while using msbuild to compile on Windows. Please refer to [FastDeploy C++ SDK on Windows](../../../docs/en/faq/use_sdk_on_windows.md).

|

||||

|

||||

## 其它文档

|

||||

## Other Documents

|

||||

|

||||

- [Runtime Python 示例](../python)

|

||||

- [切换模型推理的硬件和后端](../../../docs/cn/faq/how_to_change_backend.md)

|

||||

- [A Python example for Runtime](../python)

|

||||

- [Switching hardware and backend for model inference](../../../docs/en/faq/how_to_change_backend.md)

|

||||

|

||||

122

examples/runtime/cpp/README_CN.md

Normal file

122

examples/runtime/cpp/README_CN.md

Normal file

@@ -0,0 +1,122 @@

|

||||

简体中文 | [English](README.md)

|

||||

# C++推理

|

||||

|

||||

在运行demo前,需确认以下两个步骤

|

||||

|

||||

- 1. 软硬件环境满足要求,参考[FastDeploy环境要求](../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 2. 根据开发环境,下载预编译部署库和samples代码,参考[FastDeploy预编译库](../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

|

||||

本文档以 PaddleClas 分类模型 MobileNetV2 为例展示CPU上的推理示例

|

||||

|

||||

## 1. 获取模型

|

||||

|

||||

```bash

|

||||

wget https://bj.bcebos.com/fastdeploy/models/mobilenetv2.tgz

|

||||

tar xvf mobilenetv2.tgz

|

||||

```

|

||||

|

||||

## 2. 配置后端

|

||||

|

||||

如下C++代码保存为`infer_paddle_onnxruntime.cc`

|

||||

|

||||

``` c++

|

||||

#include "fastdeploy/runtime.h"

|

||||

|

||||

namespace fd = fastdeploy;

|

||||

|

||||

int main(int argc, char* argv[]) {

|

||||

std::string model_file = "mobilenetv2/inference.pdmodel";

|

||||

std::string params_file = "mobilenetv2/inference.pdiparams";

|

||||

|

||||

// setup option

|

||||

fd::RuntimeOption runtime_option;

|

||||

runtime_option.SetModelPath(model_file, params_file, fd::ModelFormat::PADDLE);

|

||||

runtime_option.UseOrtBackend();

|

||||

runtime_option.SetCpuThreadNum(12);

|

||||

// init runtime

|

||||

std::unique_ptr<fd::Runtime> runtime =

|

||||

std::unique_ptr<fd::Runtime>(new fd::Runtime());

|

||||

if (!runtime->Init(runtime_option)) {

|

||||

std::cerr << "--- Init FastDeploy Runitme Failed! "

|

||||

<< "\n--- Model: " << model_file << std::endl;

|

||||

return -1;

|

||||

} else {

|

||||

std::cout << "--- Init FastDeploy Runitme Done! "

|

||||

<< "\n--- Model: " << model_file << std::endl;

|

||||

}

|

||||

// init input tensor shape

|

||||

fd::TensorInfo info = runtime->GetInputInfo(0);

|

||||

info.shape = {1, 3, 224, 224};

|

||||

|

||||

std::vector<fd::FDTensor> input_tensors(1);

|

||||

std::vector<fd::FDTensor> output_tensors(1);

|

||||

|

||||

std::vector<float> inputs_data;

|

||||

inputs_data.resize(1 * 3 * 224 * 224);

|

||||

for (size_t i = 0; i < inputs_data.size(); ++i) {

|

||||

inputs_data[i] = std::rand() % 1000 / 1000.0f;

|

||||

}

|

||||

input_tensors[0].SetExternalData({1, 3, 224, 224}, fd::FDDataType::FP32, inputs_data.data());

|

||||

|

||||

//get input name

|

||||

input_tensors[0].name = info.name;

|

||||

|

||||

runtime->Infer(input_tensors, &output_tensors);

|

||||

|

||||

output_tensors[0].PrintInfo();

|

||||

return 0;

|

||||

}

|

||||

```

|

||||

加载完成,会输出提示如下,说明初始化的后端,以及运行的硬件设备

|

||||

```

|

||||

[INFO] fastdeploy/fastdeploy_runtime.cc(283)::Init Runtime initialized with Backend::OrtBackend in device Device::CPU.

|

||||

```

|

||||

|

||||

## 3. 准备CMakeLists.txt

|

||||

|

||||

FastDeploy中包含多个依赖库,直接采用`g++`或编译器编译较为繁杂,推荐使用cmake进行编译配置。示例配置如下,

|

||||

|

||||

```cmake

|

||||

PROJECT(runtime_demo C CXX)

|

||||

CMAKE_MINIMUM_REQUIRED (VERSION 3.12)

|

||||

|

||||

# 指定下载解压后的fastdeploy库路径

|

||||

option(FASTDEPLOY_INSTALL_DIR "Path of downloaded fastdeploy sdk.")

|

||||

|

||||

include(${FASTDEPLOY_INSTALL_DIR}/FastDeploy.cmake)

|

||||

|

||||

# 添加FastDeploy依赖头文件

|

||||

include_directories(${FASTDEPLOY_INCS})

|

||||

|

||||

add_executable(runtime_demo ${PROJECT_SOURCE_DIR}/infer_onnx_openvino.cc)

|

||||

# 添加FastDeploy库依赖

|

||||

target_link_libraries(runtime_demo ${FASTDEPLOY_LIBS})

|

||||

```

|

||||

|

||||

## 4. 编译可执行程序

|

||||

|

||||

打开命令行终端,进入`infer_paddle_onnxruntime.cc`和`CMakeLists.txt`所在的目录,执行如下命令

|

||||

|

||||

```bash

|

||||

mkdir build & cd build

|

||||

cmake .. -DFASTDEPLOY_INSTALL_DIR=$fastdeploy_cpp_sdk

|

||||

make -j

|

||||

```

|

||||

|

||||

```fastdeploy_cpp_sdk``` 为FastDeploy C++部署库路径

|

||||

|

||||

编译完成后,使用如下命令执行可得到预测结果

|

||||

```bash

|

||||

./runtime_demo

|

||||

```

|

||||

执行时如提示`error while loading shared libraries: libxxx.so: cannot open shared object file: No such file...`,说明程序执行时没有找到FastDeploy的库路径,可通过执行如下命令,将FastDeploy的库路径添加到环境变量之后,重新执行二进制程序。

|

||||

```bash

|

||||

source /Path/to/fastdeploy_cpp_sdk/fastdeploy_init.sh

|

||||

```

|

||||

|

||||

本示例代码在各平台(Windows/Linux/Mac)上通用,但编译过程仅支持(Linux/Mac),Windows上使用msbuild进行编译,具体使用方式参考[Windows平台使用FastDeploy C++ SDK](../../../docs/cn/faq/use_sdk_on_windows.md)

|

||||

|

||||

## 其它文档

|

||||

|

||||

- [Runtime Python 示例](../python)

|

||||

- [切换模型推理的硬件和后端](../../../docs/cn/faq/how_to_change_backend.md)

|

||||

@@ -1,13 +1,14 @@

|

||||

English | [简体中文](README_CN.md)

|

||||

# Python推理

|

||||

|

||||

在运行demo前,需确认以下两个步骤

|

||||

Before running demo, the following two steps need to be confirmed:

|

||||

|

||||

- 1. 软硬件环境满足要求,参考[FastDeploy环境要求](../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 2. FastDeploy Python whl包安装,参考[FastDeploy Python安装](../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 1. Hardware and software environment meets the requirements. Please refer to [Environment requirements for FastDeploy](../../../docs/en/build_and_install/download_prebuilt_libraries.md).

|

||||

- 2. Install FastDeploy Python whl package, please refer to [FastDeploy Python Installation](../../../docs/cn/build_and_install/download_prebuilt_libraries.md).

|

||||

|

||||

本文档以 PaddleClas 分类模型 MobileNetV2 为例展示 CPU 上的推理示例

|

||||

This document shows an inference example on the CPU using the PaddleClas classification model MobileNetV2 as an example.

|

||||

|

||||

## 1. 获取模型

|

||||

## 1. Obtaining the model

|

||||

|

||||

``` python

|

||||

import fastdeploy as fd

|

||||

@@ -16,7 +17,7 @@ model_url = "https://bj.bcebos.com/fastdeploy/models/mobilenetv2.tgz"

|

||||

fd.download_and_decompress(model_url, path=".")

|

||||

```

|

||||

|

||||

## 2. 配置后端

|

||||

## 2. Backend Configuration

|

||||

|

||||

``` python

|

||||

option = fd.RuntimeOption()

|

||||

@@ -24,30 +25,30 @@ option = fd.RuntimeOption()

|

||||

option.set_model_path("mobilenetv2/inference.pdmodel",

|

||||

"mobilenetv2/inference.pdiparams")

|

||||

|

||||

# **** CPU 配置 ****

|

||||

# **** CPU Configuration ****

|

||||

option.use_cpu()

|

||||

option.use_ort_backend()

|

||||

option.set_cpu_thread_num(12)

|

||||

|

||||

# 初始化构造runtime

|

||||

# Initialise runtime

|

||||

runtime = fd.Runtime(option)

|

||||

|

||||

# 获取模型输入名

|

||||

# Get model input name

|

||||

input_name = runtime.get_input_info(0).name

|

||||

|

||||

# 构造随机数据进行推理

|

||||

# Constructing random data for inference

|

||||

results = runtime.infer({

|

||||

input_name: np.random.rand(1, 3, 224, 224).astype("float32")

|

||||

})

|

||||

|

||||

print(results[0].shape)

|

||||

```

|

||||

加载完成,会输出提示如下,说明初始化的后端,以及运行的硬件设备

|

||||

When loading is complete, you will get the following output information indicating the initialized backend and the hardware devices.

|

||||

```

|

||||

[INFO] fastdeploy/fastdeploy_runtime.cc(283)::Init Runtime initialized with Backend::OrtBackend in device Device::CPU.

|

||||

```

|

||||

|

||||

## 其它文档

|

||||

## Other Documents

|

||||

|

||||

- [Runtime C++ 示例](../cpp)

|

||||

- [切换模型推理的硬件和后端](../../../docs/cn/faq/how_to_change_backend.md)

|

||||

- [A C++ example for Runtime C++](../cpp)

|

||||

- [Switching hardware and backend for model inference](../../../docs/en/faq/how_to_change_backend.md)

|

||||

|

||||

54

examples/runtime/python/README_CN.md

Normal file

54

examples/runtime/python/README_CN.md

Normal file

@@ -0,0 +1,54 @@

|

||||

简体中文 | [English](README.md)

|

||||

# Python推理

|

||||

|

||||

在运行demo前,需确认以下两个步骤

|

||||

|

||||

- 1. 软硬件环境满足要求,参考[FastDeploy环境要求](../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 2. FastDeploy Python whl包安装,参考[FastDeploy Python安装](../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

|

||||

本文档以 PaddleClas 分类模型 MobileNetV2 为例展示 CPU 上的推理示例

|

||||

|

||||

## 1. 获取模型

|

||||

|

||||

``` python

|

||||

import fastdeploy as fd

|

||||

|

||||

model_url = "https://bj.bcebos.com/fastdeploy/models/mobilenetv2.tgz"

|

||||

fd.download_and_decompress(model_url, path=".")

|

||||

```

|

||||

|

||||

## 2. 配置后端

|

||||

|

||||

``` python

|

||||

option = fd.RuntimeOption()

|

||||

|

||||

option.set_model_path("mobilenetv2/inference.pdmodel",

|

||||

"mobilenetv2/inference.pdiparams")

|

||||

|

||||

# **** CPU 配置 ****

|

||||

option.use_cpu()

|

||||

option.use_ort_backend()

|

||||

option.set_cpu_thread_num(12)

|

||||

|

||||

# 初始化构造runtime

|

||||

runtime = fd.Runtime(option)

|

||||

|

||||

# 获取模型输入名

|

||||

input_name = runtime.get_input_info(0).name

|

||||

|

||||

# 构造随机数据进行推理

|

||||

results = runtime.infer({

|

||||

input_name: np.random.rand(1, 3, 224, 224).astype("float32")

|

||||

})

|

||||

|

||||

print(results[0].shape)

|

||||

```

|

||||

加载完成,会输出提示如下,说明初始化的后端,以及运行的硬件设备

|

||||

```

|

||||

[INFO] fastdeploy/fastdeploy_runtime.cc(283)::Init Runtime initialized with Backend::OrtBackend in device Device::CPU.

|

||||

```

|

||||

|

||||

## 其它文档

|

||||

|

||||

- [Runtime C++ 示例](../cpp)

|

||||

- [切换模型推理的硬件和后端](../../../docs/cn/faq/how_to_change_backend.md)

|

||||

@@ -35,7 +35,7 @@ tar xvfz ernie-3.0-medium-zh-afqmc.tgz

|

||||

# GPU Inference

|

||||

./seq_cls_infer_demo --device gpu --model_dir ernie-3.0-medium-zh-afqmc

|

||||

|

||||

# KunlunXin XPU 推理

|

||||

# KunlunXin XPU Inference

|

||||

./seq_cls_infer_demo --device kunlunxin --model_dir ernie-3.0-medium-zh-afqmc

|

||||

```

|

||||

The result returned after running is as follows:

|

||||

|

||||

@@ -30,18 +30,18 @@ mv msra_ner_pruned_infer_model/float32.pdiparams models/ernie_tokencls_model/1/m

|

||||

After download and move, the models directory of the classification tasks is as follows:

|

||||

```

|

||||

models

|

||||

├── ernie_seqcls # 分类任务的pipeline

|

||||

├── ernie_seqcls # Pipeline for classification task

|

||||

│ ├── 1

|

||||

│ └── config.pbtxt # 通过这个文件组合前后处理和模型推理

|

||||

├── ernie_seqcls_model # 分类任务的模型推理

|

||||

│ └── config.pbtxt # Combine pre and post processing and model inference

|

||||

├── ernie_seqcls_model # Model inference for classification task

|

||||

│ ├── 1

|

||||

│ │ └── model.onnx

|

||||

│ └── config.pbtxt

|

||||

├── ernie_seqcls_postprocess # 分类任务后处理

|

||||

├── ernie_seqcls_postprocess # Post-processing of classification task

|

||||

│ ├── 1

|

||||

│ │ └── model.py

|

||||

│ └── config.pbtxt

|

||||

└── ernie_tokenizer # 预处理分词

|

||||

└── ernie_tokenizer # Pre-processing splitting

|

||||

├── 1

|

||||

│ └── model.py

|

||||

└── config.pbtxt

|

||||

@@ -63,9 +63,9 @@ docker run -it --net=host --name fastdeploy_server --shm-size="1g" -v /path/ser

|

||||

The serving directory contains the configuration to start the pipeline service and the code to send the prediction request, including

|

||||

|

||||

```

|

||||

models # 服务化启动需要的模型仓库,包含模型和服务配置文件

|

||||

seq_cls_rpc_client.py # 新闻分类任务发送pipeline预测请求的脚本

|

||||

token_cls_rpc_client.py # 序列标注任务发送pipeline预测请求的脚本

|

||||

models # Model repository needed for serving startup, containing model and service configuration files

|

||||

seq_cls_rpc_client.py # Script for sending pipeline prediction requests for news classification task

|

||||

token_cls_rpc_client.py # Script for sequence annotation task to send pipeline prediction requests

|

||||

```

|

||||

|

||||

*Attention*:Attention: When starting the service, each python backend process of Server requests 64M memory by default, and the docker started by default cannot start more than one python backend node. There are two solutions:

|

||||

@@ -76,13 +76,13 @@ token_cls_rpc_client.py # 序列标注任务发送pipeline预测请求的脚

|

||||

### Classification Task

|

||||

Execute the following command in the container to start the service:

|

||||

```

|

||||

# 默认启动models下所有模型

|

||||

# Enable all models by default

|

||||

fastdeployserver --model-repository=/models

|

||||

|

||||

# 可通过参数只启动分类任务

|

||||

# You can only enable classification task via parameters

|

||||

fastdeployserver --model-repository=/models --model-control-mode=explicit --load-model=ernie_seqcls

|

||||

```

|

||||

输出打印如下:

|

||||

The output is:

|

||||

```

|

||||

I1019 09:41:15.375496 2823 model_repository_manager.cc:1183] successfully loaded 'ernie_tokenizer' version 1

|

||||

I1019 09:41:15.375987 2823 model_repository_manager.cc:1022] loading: ernie_seqcls:1

|

||||

@@ -109,7 +109,7 @@ Execute the following command in the container to start the sequence labelling s

|

||||

```

|

||||

fastdeployserver --model-repository=/models --model-control-mode=explicit --load-model=ernie_tokencls --backend-config=python,shm-default-byte-size=10485760

|

||||

```

|

||||

输出打印如下:

|

||||

The output is:

|

||||

```

|

||||

I1019 09:41:15.375496 2823 model_repository_manager.cc:1183] successfully loaded 'ernie_tokenizer' version 1

|

||||

I1019 09:41:15.375987 2823 model_repository_manager.cc:1022] loading: ernie_seqcls:1

|

||||

@@ -148,7 +148,7 @@ Attention: The proxy need turning off when executing client requests. The ip add

|

||||

```

|

||||

python seq_cls_grpc_client.py

|

||||

```

|

||||

输出打印如下:

|

||||

The output is:

|

||||

```

|

||||

{'label': array([5, 9]), 'confidence': array([0.6425664 , 0.66534853], dtype=float32)}

|

||||

{'label': array([4]), 'confidence': array([0.53198355], dtype=float32)}

|

||||

@@ -160,7 +160,7 @@ Attention: The proxy need turning off when executing client requests. The ip add

|

||||

```

|

||||

python token_cls_grpc_client.py

|

||||

```

|

||||

输出打印如下:

|

||||

The output is:

|

||||

```

|

||||

input data: 北京的涮肉,重庆的火锅,成都的小吃都是极具特色的美食。

|

||||

The model detects all entities:

|

||||

@@ -173,5 +173,5 @@ entity: 玛雅 label: LOC pos: [2, 3]

|

||||

entity: 华夏 label: LOC pos: [14, 15]

|

||||

```

|

||||

|

||||

## 配置修改

|

||||

## Configuration Modification

|

||||

The current classification task (ernie_seqcls_model/config.pbtxt) is by default configured to run the OpenVINO engine on CPU; the sequence labelling task is by default configured to run the Paddle engine on GPU. If you want to run on CPU/GPU or other inference engines, you should modify the configuration. please refer to the [configuration document.](../../../../serving/docs/zh_CN/model_configuration.md)

|

||||

|

||||

@@ -1 +1,2 @@

|

||||

本目录存放ERNIE 3.0模型

|

||||

English | [简体中文](README_CN.md)

|

||||

This directory contains ERNIE 3.0 models.

|

||||

@@ -0,0 +1,2 @@

|

||||

[English](README.md) | 简体中文

|

||||

本目录存放ERNIE 3.0模型

|

||||

@@ -1 +1,2 @@

|

||||

本目录存放ERNIE 3.0模型

|

||||

English | [简体中文](README_CN.md)

|

||||

This directory contains ERNIE 3.0 models

|

||||

|

||||

@@ -0,0 +1,2 @@

|

||||

[English](README.md) | 简体中文

|

||||

本目录存放ERNIE 3.0模型

|

||||

@@ -6,8 +6,8 @@ This directory provides `infer.cc` quickly complete the example on CPU/GPU by [U

|

||||

|

||||

Before deployment, two steps need to be confirmed.

|

||||

|

||||

- 1. The software and hardware environment meets the requirements. Please refer to [FastDeploy环境要求](../../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 2. Download precompiled deployment library and samples code based on the develop environment. Please refer to [FastDeploy预编译库](../../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 1. The software and hardware environment meets the requirements. Please refer to [Environment requirements for FastDeploy](../../../../docs/en/build_and_install/download_prebuilt_libraries.md).

|

||||

- 2. Download precompiled deployment library and samples code based on the develop environment. Please refer to [FastDeploy pre-compiled libraries](../../../../docs/en/build_and_install/download_prebuilt_libraries.md).

|

||||

|

||||

## A Quick Start

|

||||

Take uie-base model inference on Linux as an example, execute the following command in this directory to complete the compilation test. FastDeploy version 0.7.0 or above is required to support this model (x.x.x>=0.7.0).

|

||||

@@ -15,7 +15,7 @@ Take uie-base model inference on Linux as an example, execute the following comm

|

||||

```

|

||||

mkdir build

|

||||

cd build

|

||||

# Download FastDeploy precompiled library. Users can choose proper versions in the `FastDeploy预编译库` mentioned above.

|

||||

# Download FastDeploy precompiled library. Users can choose proper versions in the `FastDeploy pre-compiled libraries` mentioned above.

|

||||

wget https://bj.bcebos.com/fastdeploy/release/cpp/fastdeploy-linux-x64-x.x.x.tgz

|

||||

tar xvf fastdeploy-linux-x64-x.x.x.tgz

|

||||

cmake .. -DFASTDEPLOY_INSTALL_DIR=${PWD}/fastdeploy-linux-x64-x.x.x

|

||||

@@ -73,10 +73,10 @@ std::string param_path = model_dir + sep + "inference.pdiparams";

|

||||

std::string vocab_path = model_dir + sep + "vocab.txt";

|

||||

using fastdeploy::text::SchemaNode;

|

||||

using fastdeploy::text::UIEResult;

|

||||

// 定义uie result对象

|

||||

// Define the uie result object

|

||||

std::vector<std::unordered_map<std::string, std::vector<UIEResult>>> results;

|

||||

|

||||

// 初始化UIE模型

|

||||

// Initialize UIE model

|

||||

auto predictor =

|

||||

fastdeploy::text::UIEModel(model_path, param_path, vocab_path, 0.5, 128,

|

||||

{"时间", "选手", "赛事名称"}, option);

|

||||

@@ -94,7 +94,7 @@ predictor.Predict({"2月8日上午北京冬奥会自由式滑雪女子大跳台

|

||||

std::cout << results << std::endl;

|

||||

results.clear();

|

||||

|

||||

// 示例输出

|

||||

// An output example

|

||||

// The result:

|

||||

// 赛事名称:

|

||||

// text: 北京冬奥会自由式滑雪女子大跳台决赛

|

||||

@@ -128,7 +128,7 @@ predictor.Predict({"(右肝肿瘤)肝细胞性肝癌(II-"

|

||||

std::cout << results << std::endl;

|

||||

results.clear();

|

||||

|

||||

// 示例输出

|

||||

// An output example

|

||||

// The result:

|

||||

// 脉管内癌栓分级:

|

||||

// text: M0级

|

||||

@@ -174,7 +174,7 @@ predictor.Predict(

|

||||

std::cout << results << std::endl;

|

||||

results.clear();

|

||||

|

||||

// 示例输出

|

||||

// An output example

|

||||

// The result:

|

||||

// 竞赛名称:

|

||||

// text: 2022语言与智能技术竞赛

|

||||

@@ -233,7 +233,7 @@ predictor.Predict(

|

||||

std::cout << results << std::endl;

|

||||

results.clear();

|

||||

|

||||

// 示例输出

|

||||

// An output example

|

||||

// The result:

|

||||

// 地震触发词:

|

||||

// text: 地震

|

||||

@@ -287,7 +287,7 @@ predictor.Predict(

|

||||

std::cout << results << std::endl;

|

||||

results.clear();

|

||||

|

||||

// 示例输出

|

||||

// An output example

|

||||

// The result:

|

||||

// 评价维度:

|

||||

// text: 店面

|

||||

@@ -332,7 +332,7 @@ predictor.Predict({"这个产品用起来真的很流畅,我非常喜欢"}, &r

|

||||

std::cout << results << std::endl;

|

||||

results.clear();

|

||||

|

||||

// 示例输出

|

||||

// An output example

|

||||

// The result:

|

||||

// 情感倾向[正向,负向]:

|

||||

// text: 正向

|

||||

@@ -355,7 +355,7 @@ predictor.Predict({"北京市海淀区人民法院\n民事判决书\n(199x)"

|

||||

&results);

|

||||

std::cout << results << std::endl;

|

||||

results.clear();

|

||||

// 示例输出

|

||||

// An output example

|

||||

// The result:

|

||||

// 被告:

|

||||

// text: B公司

|

||||

@@ -433,7 +433,7 @@ UIEModel(

|

||||

SchemaLanguage schema_language = SchemaLanguage::ZH);

|

||||

```

|

||||

|

||||

UIEModel loading and initialization. Among them, model_file, params_file are Paddle inference documents exported by trained models. Please refer to [模型导出](https://github.com/PaddlePaddle/PaddleNLP/blob/develop/model_zoo/uie/README.md#%E6%A8%A1%E5%9E%8B%E9%83%A8%E7%BD%B2)。

|

||||

UIEModel loading and initialization. Among them, model_file, params_file are Paddle inference documents exported by trained models. Please refer to [Model export](https://github.com/PaddlePaddle/PaddleNLP/blob/develop/model_zoo/uie/README.md#%E6%A8%A1%E5%9E%8B%E9%83%A8%E7%BD%B2).

|

||||

|

||||

**Parameter**

|

||||

|

||||

@@ -472,8 +472,8 @@ void Predict(

|

||||

|

||||

## Related Documents

|

||||

|

||||

[UIE模型详细介绍](https://github.com/PaddlePaddle/PaddleNLP/blob/develop/model_zoo/uie/README.md)

|

||||

[Details for UIE model](https://github.com/PaddlePaddle/PaddleNLP/blob/develop/model_zoo/uie/README.md)

|

||||

|

||||

[UIE模型导出方法](https://github.com/PaddlePaddle/PaddleNLP/blob/develop/model_zoo/uie/README.md#%E6%A8%A1%E5%9E%8B%E9%83%A8%E7%BD%B2)

|

||||

[How to export a UIE model](https://github.com/PaddlePaddle/PaddleNLP/blob/develop/model_zoo/uie/README.md#%E6%A8%A1%E5%9E%8B%E9%83%A8%E7%BD%B2)

|

||||

|

||||

[UIE Python部署方法](../python/README.md)

|

||||

[UIE Python deployment](../python/README.md)

|

||||

|

||||

@@ -4,8 +4,8 @@ English | [简体中文](README_CN.md)

|

||||

|

||||

Before deployment, two steps need to be confirmed.

|

||||

|

||||

- 1. The software and hardware environment meets the requirements. Please refer to [FastDeploy环境要求](../../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 2. FastDeploy Python whl pacakage needs installation. Please refer to [FastDeploy Python安装](../../../../docs/cn/build_and_install/download_prebuilt_libraries.md)

|

||||

- 1. The software and hardware environment meets the requirements. Please refer to [Environment requirements for FastDeploy](../../../../docs/en/build_and_install/download_prebuilt_libraries.md)

|

||||

- 2. FastDeploy Python whl pacakage needs installation. Please refer to [FastDeploy Python Installation](../../../../docs/en/build_and_install/download_prebuilt_libraries.md)

|

||||

|

||||

This directory provides an example that `infer.py` quickly complete CPU deployment conducted by the UIE model with OpenVINO acceleration on CPU/GPU and CPU.

|

||||

|

||||

@@ -67,7 +67,7 @@ The extraction schema: ['肿瘤的大小', '肿瘤的个数', '肝癌级别', '

|

||||

|

||||

### Description of command line arguments

|

||||

|

||||

`infer.py` 除了以上示例的命令行参数,还支持更多命令行参数的设置。以下为各命令行参数的说明。

|

||||

`infer.py` supports more command line parameters than the above example. The following is a description of each command line parameter.

|

||||

|

||||

| Argument | Description |

|

||||

|----------|--------------|

|

||||

@@ -95,7 +95,7 @@ vocab_path = os.path.join(model_dir, "vocab.txt")

|

||||

runtime_option = fastdeploy.RuntimeOption()

|

||||

schema = ["时间", "选手", "赛事名称"]

|

||||

|

||||

# 初始化UIE模型

|

||||

# Initialise UIE model

|

||||

uie = UIEModel(

|

||||

model_path,

|

||||

param_path,

|

||||

@@ -116,7 +116,7 @@ The initialization stage sets the schema```["time", "player", "event name"]``` t

|

||||

["2月8日上午北京冬奥会自由式滑雪女子大跳台决赛中中国选手谷爱凌以188.25分获得金牌!"], return_dict=True)

|

||||

>>> pprint(results)

|

||||

|

||||

# 示例输出

|

||||

# An output example

|

||||

# [{'时间': {'end': 6,

|

||||

# 'probability': 0.9857379794120789,

|

||||

# 'start': 0,

|

||||

@@ -145,7 +145,7 @@ For example, if the target entity types are "肿瘤的大小", "肿瘤的个数"

|

||||

return_dict=True)

|

||||

>>> pprint(results)

|

||||

|

||||

# 示例输出

|

||||

# An output example

|

||||

# [{'肝癌级别': {'end': 20,

|

||||

# 'probability': 0.9243271350860596,

|

||||