mirror of

https://github.com/PaddlePaddle/FastDeploy.git

synced 2025-10-06 00:57:33 +08:00

[Model] add Paddle.js web demo (#392)

* add application include paddle.js web demo and xcx * cp PR #5 * add readme * fix comments and link * fix xcx readme * fix Task 1 * fix bugs * refine readme * delete ocrxcx readme * refine readme * fix bugs * delete old readme * 200px to 300px * revert 200px to 300px Co-authored-by: Jason <jiangjiajun@baidu.com>

This commit is contained in:

35

examples/application/js/README.md

Normal file

35

examples/application/js/README.md

Normal file

@@ -0,0 +1,35 @@

|

||||

|

||||

# 前端AI应用

|

||||

|

||||

人工智能技术的快速发展带动了计算机视觉、自然语言处理领域的产业升级。另外,随着PC和移动设备上算力的稳步增强、模型压缩技术迭代更新以及各种创新需求的不断催生,在浏览器中部署AI模型实现前端智能已经具备了良好的基础条件。

|

||||

针对前端部署AI深度学习模型困难的问题,百度开源了Paddle.js前端深度学习模型部署框架,可以很容易的将深度学习模型部署到前端项目中。

|

||||

|

||||

## Paddle.js简介

|

||||

|

||||

[Paddle.js](https://github.com/PaddlePaddle/Paddle.js)是百度`PaddlePaddle`的web方向子项目,是一个运行在浏览器中的开源深度学习框架。`Paddle.js`可以加载`PaddlePaddle`动转静的模型,经过`Paddle.js`的模型转换工具`paddlejs-converter`转换成浏览器友好的模型,易于在线推理预测使用。`Paddle.js`支持`WebGL/WebGPU/WebAssembly`的浏览器中运行,也可以在百度小程序和微信小程序环境下运行。

|

||||

|

||||

简言之,利用Paddle.js,我们可以在浏览器、小程序等前端应用场景上线AI功能,包括但不限于目标检测,图像分割,OCR,物品分类等AI能力。

|

||||

|

||||

## Web Demo使用

|

||||

|

||||

在浏览器中直接运行官方demo参考[文档](./web_demo/README.md)

|

||||

|

||||

|demo名称|web demo目录|可视化|

|

||||

|-|-|-|

|

||||

|目标检测|[ScrewDetection/FaceDetection](./web_demo/demo/src/pages/cv/detection/)| <img src="https://user-images.githubusercontent.com/26592129/196874536-b7fa2c0a-d71f-4271-8c40-f9088bfad3c9.png" height="200px">|

|

||||

|人像分割背景替换|[HumanSeg](./web_demo//demo/src/pages/cv/segmentation/HumanSeg)|<img src="https://user-images.githubusercontent.com/26592129/196874452-4ef2e770-fbb3-4a35-954b-f871716d6669.png" height="200px">|

|

||||

|物体识别|[GestureRecognition/ItemIdentification](./web_demo//demo/src/pages/cv/recognition/)|<img src="https://user-images.githubusercontent.com/26592129/196874416-454e6bb0-4ebd-4b51-a88a-8c40614290ae.png" height="200px">|

|

||||

|OCR|[TextDetection/TextRecognition](./web_demo//demo/src/pages/cv/ocr/)|<img src="https://user-images.githubusercontent.com/26592129/196874354-1b5eecb0-f273-403c-aa6c-4463bf6d78db.png" height="200px">|

|

||||

|

||||

|

||||

## 微信小程序Demo使用

|

||||

|

||||

在微信小程序运行官方demo参考[文档](./mini_program/README.md)

|

||||

|

||||

|名称|目录|

|

||||

|-|-|

|

||||

|OCR文本检测| [ocrdetecXcx](./mini_program/ocrdetectXcx/) |

|

||||

|OCR文本识别| [ocrXcx](./mini_program/ocrXcx/) |

|

||||

|目标检测| coming soon |

|

||||

|图像分割| coming soon |

|

||||

|物品分类| coming soon |

|

||||

126

examples/application/js/mini_program/README.md

Normal file

126

examples/application/js/mini_program/README.md

Normal file

@@ -0,0 +1,126 @@

|

||||

|

||||

# Paddle.js微信小程序Demo

|

||||

|

||||

- [1.简介](#1)

|

||||

- [2. 项目启动](#2)

|

||||

* [2.1 准备工作](#21)

|

||||

* [2.2 启动步骤](#22)

|

||||

* [2.3 效果展示](#23)

|

||||

- [3. 模型推理pipeline](#3)

|

||||

- [4. 常见问题](#4)

|

||||

|

||||

<a name="1"></a>

|

||||

## 1.简介

|

||||

|

||||

|

||||

本目录下包含文本检测、文本识别小程序demo,通过使用 [Paddle.js](https://github.com/PaddlePaddle/Paddle.js) 以及 [Paddle.js微信小程序插件](https://mp.weixin.qq.com/wxopen/plugindevdoc?appid=wx7138a7bb793608c3&token=956931339&lang=zh_CN) 完成在小程序上利用用户终端算力实现文本检测框选效果。

|

||||

|

||||

<a name="2"></a>

|

||||

## 2. 项目启动

|

||||

|

||||

<a name="21"></a>

|

||||

### 2.1 准备工作

|

||||

* [申请微信小程序账号](https://mp.weixin.qq.com/)

|

||||

* [微信小程序开发者工具](https://developers.weixin.qq.com/miniprogram/dev/devtools/download.html)

|

||||

* 前端开发环境准备:node、npm

|

||||

* 小程序管理后台配置服务器域名,或打开开发者工具【不校验合法域名】

|

||||

|

||||

详情参考:https://mp.weixin.qq.com/wxamp/devprofile/get_profile?token=1132303404&lang=zh_CN)

|

||||

|

||||

<a name="22"></a>

|

||||

### 2.2 启动步骤

|

||||

|

||||

#### **1. 克隆Demo代码**

|

||||

```sh

|

||||

git clone https://github.com/PaddlePaddle/FastDeploy

|

||||

cd FastDeploy/examples/application/js/mini_program

|

||||

```

|

||||

|

||||

#### **2. 进入小程序目录,安装依赖**

|

||||

|

||||

```sh

|

||||

# 运行文本识别demo,进入到ocrXcx目录

|

||||

cd ./ocrXcx && npm install

|

||||

# 运行文本检测demo,进入到ocrdetectXcx目录

|

||||

# cd ./ocrdetectXcx && npm install

|

||||

```

|

||||

|

||||

#### **3. 微信小程序导入代码**

|

||||

打开微信开发者工具 --> 导入 --> 选定目录,输入相关信息

|

||||

|

||||

#### **4. 添加 Paddle.js微信小程序插件**

|

||||

小程序管理界面 --> 设置 --> 第三方设置 --> 插件管理 --> 添加插件 --> 搜索 `wx7138a7bb793608c3` 并添加

|

||||

[参考文档](https://developers.weixin.qq.com/miniprogram/dev/framework/plugin/using.html)

|

||||

|

||||

#### **5. 构建依赖**

|

||||

点击开发者工具中的菜单栏:工具 --> 构建 npm

|

||||

|

||||

原因:node_modules 目录不会参与编译、上传和打包中,小程序想要使用 npm 包必须走一遍“构建 npm”的过程,构建完成会生成一个 miniprogram_npm 目录,里面会存放构建打包后的 npm 包,也就是小程序真正使用的 npm 包。*

|

||||

[参考文档](https://developers.weixin.qq.com/miniprogram/dev/devtools/npm.html)

|

||||

|

||||

<a name="23"></a>

|

||||

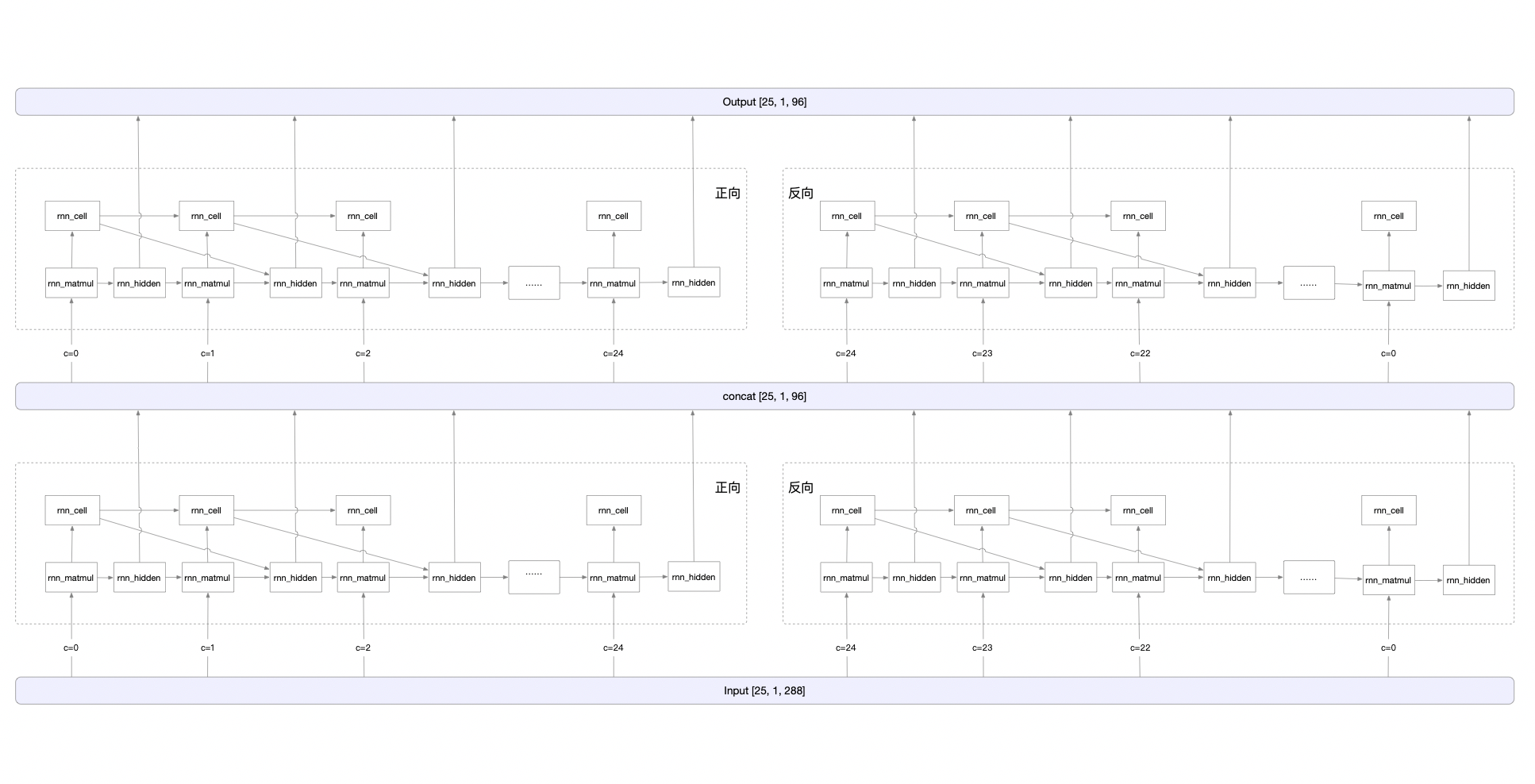

### 2.3 效果展示

|

||||

|

||||

<img src="https://user-images.githubusercontent.com/43414102/157648579-cdbbee61-9866-4364-9edd-a97ac0eda0c1.png" width="300px">

|

||||

|

||||

<a name="3"></a>

|

||||

## 3. 模型推理pipeline

|

||||

|

||||

```typescript

|

||||

// 引入 paddlejs 和 paddlejs-plugin,注册小程序环境变量和合适的 backend

|

||||

import * as paddlejs from '@paddlejs/paddlejs-core';

|

||||

import '@paddlejs/paddlejs-backend-webgl';

|

||||

const plugin = requirePlugin('paddlejs-plugin');

|

||||

plugin.register(paddlejs, wx);

|

||||

|

||||

// 初始化推理引擎

|

||||

const runner = new paddlejs.Runner({modelPath, feedShape, mean, std});

|

||||

await runner.init();

|

||||

|

||||

// 获取图像信息

|

||||

wx.canvasGetImageData({

|

||||

canvasId: canvasId,

|

||||

x: 0,

|

||||

y: 0,

|

||||

width: canvas.width,

|

||||

height: canvas.height,

|

||||

success(res) {

|

||||

// 推理预测

|

||||

runner.predict({

|

||||

data: res.data,

|

||||

width: canvas.width,

|

||||

height: canvas.height,

|

||||

}, function (data) {

|

||||

// 获取推理结果

|

||||

console.log(data)

|

||||

});

|

||||

}

|

||||

});

|

||||

```

|

||||

|

||||

<a name="4"></a>

|

||||

## 4. 常见问题

|

||||

### 4.1 出现报错 `Invalid context type [webgl2] for Canvas#getContext`

|

||||

|

||||

可以不管,不影响正常代码运行和demo功能

|

||||

|

||||

### 4.2 预览看不到结果

|

||||

|

||||

建议尝试真机调试

|

||||

|

||||

### 4.3 微信开发者工具出现黑屏,然后出现超多报错

|

||||

|

||||

重启微信开发者工具

|

||||

|

||||

### 4.4 模拟和真机调试结果不一致;模拟检测不到文本等

|

||||

|

||||

可以以真机为准;

|

||||

|

||||

模拟检测不到文本等可以尝试随意改动下代码(增删换行等)再点击编译

|

||||

|

||||

|

||||

### 4.5 手机调试或运行时出现 长时间无反应等提示

|

||||

|

||||

请继续等待,模型推理需要一定时间

|

||||

|

||||

|

||||

12

examples/application/js/mini_program/ocrXcx/app.js

Normal file

12

examples/application/js/mini_program/ocrXcx/app.js

Normal file

@@ -0,0 +1,12 @@

|

||||

/* global wx, App */

|

||||

import * as paddlejs from '@paddlejs/paddlejs-core';

|

||||

import '@paddlejs/paddlejs-backend-webgl';

|

||||

// eslint-disable-next-line no-undef

|

||||

const plugin = requirePlugin('paddlejs-plugin');

|

||||

plugin.register(paddlejs, wx);

|

||||

|

||||

App({

|

||||

globalData: {

|

||||

Paddlejs: paddlejs.Runner

|

||||

}

|

||||

});

|

||||

12

examples/application/js/mini_program/ocrXcx/app.json

Normal file

12

examples/application/js/mini_program/ocrXcx/app.json

Normal file

@@ -0,0 +1,12 @@

|

||||

{

|

||||

"pages": [

|

||||

"pages/index/index"

|

||||

],

|

||||

"plugins": {

|

||||

"paddlejs-plugin": {

|

||||

"version": "2.0.1",

|

||||

"provider": "wx7138a7bb793608c3"

|

||||

}

|

||||

},

|

||||

"sitemapLocation": "sitemap.json"

|

||||

}

|

||||

72

examples/application/js/mini_program/ocrXcx/package-lock.json

generated

Normal file

72

examples/application/js/mini_program/ocrXcx/package-lock.json

generated

Normal file

@@ -0,0 +1,72 @@

|

||||

{

|

||||

"name": "paddlejs-demo",

|

||||

"version": "0.0.1",

|

||||

"lockfileVersion": 2,

|

||||

"requires": true,

|

||||

"packages": {

|

||||

"": {

|

||||

"name": "paddlejs-demo",

|

||||

"version": "0.0.1",

|

||||

"license": "ISC",

|

||||

"dependencies": {

|

||||

"@paddlejs/paddlejs-backend-webgl": "^1.2.0",

|

||||

"@paddlejs/paddlejs-core": "^2.1.18",

|

||||

"d3-polygon": "2.0.0",

|

||||

"js-clipper": "1.0.1",

|

||||

"number-precision": "1.5.2"

|

||||

}

|

||||

},

|

||||

"node_modules/@paddlejs/paddlejs-backend-webgl": {

|

||||

"version": "1.2.9",

|

||||

"resolved": "https://registry.npmjs.org/@paddlejs/paddlejs-backend-webgl/-/paddlejs-backend-webgl-1.2.9.tgz",

|

||||

"integrity": "sha512-cVDa0/Wbw2EyfsYqdYUPhFeqKsET79keEUWjyhYQmQkJfWg8j1qdR6yp7g6nx9qAGrqFvwuj1s0EqkYA1dok6A=="

|

||||

},

|

||||

"node_modules/@paddlejs/paddlejs-core": {

|

||||

"version": "2.2.0",

|

||||

"resolved": "https://registry.npmjs.org/@paddlejs/paddlejs-core/-/paddlejs-core-2.2.0.tgz",

|

||||

"integrity": "sha512-P3rPkF9fFHtq8uSte5gA7fJQwBNl9Ytsvj6aTcfQSsirnBO/HxMNu0gJyh7+lItvEtF92PR15eI0eOwJYfZDhQ=="

|

||||

},

|

||||

"node_modules/d3-polygon": {

|

||||

"version": "2.0.0",

|

||||

"resolved": "https://registry.npmjs.org/d3-polygon/-/d3-polygon-2.0.0.tgz",

|

||||

"integrity": "sha512-MsexrCK38cTGermELs0cO1d79DcTsQRN7IWMJKczD/2kBjzNXxLUWP33qRF6VDpiLV/4EI4r6Gs0DAWQkE8pSQ=="

|

||||

},

|

||||

"node_modules/js-clipper": {

|

||||

"version": "1.0.1",

|

||||

"resolved": "https://registry.npmjs.org/js-clipper/-/js-clipper-1.0.1.tgz",

|

||||

"integrity": "sha512-0XYAS0ZoCki5K0fWwj8j8ug4mgxHXReW3ayPbVqr4zXPJuIs2pyvemL1sALadsEiAywZwW5Ify1XfU4bNJvokg=="

|

||||

},

|

||||

"node_modules/number-precision": {

|

||||

"version": "1.5.2",

|

||||

"resolved": "https://registry.npmjs.org/number-precision/-/number-precision-1.5.2.tgz",

|

||||

"integrity": "sha512-q7C1ZW3FyjsJ+IpGB6ykX8OWWa5+6M+hEY0zXBlzq1Sq1IPY9GeI3CQ9b2i6CMIYoeSuFhop2Av/OhCxClXqag=="

|

||||

}

|

||||

},

|

||||

"dependencies": {

|

||||

"@paddlejs/paddlejs-backend-webgl": {

|

||||

"version": "1.2.9",

|

||||

"resolved": "https://registry.npmjs.org/@paddlejs/paddlejs-backend-webgl/-/paddlejs-backend-webgl-1.2.9.tgz",

|

||||

"integrity": "sha512-cVDa0/Wbw2EyfsYqdYUPhFeqKsET79keEUWjyhYQmQkJfWg8j1qdR6yp7g6nx9qAGrqFvwuj1s0EqkYA1dok6A=="

|

||||

},

|

||||

"@paddlejs/paddlejs-core": {

|

||||

"version": "2.2.0",

|

||||

"resolved": "https://registry.npmjs.org/@paddlejs/paddlejs-core/-/paddlejs-core-2.2.0.tgz",

|

||||

"integrity": "sha512-P3rPkF9fFHtq8uSte5gA7fJQwBNl9Ytsvj6aTcfQSsirnBO/HxMNu0gJyh7+lItvEtF92PR15eI0eOwJYfZDhQ=="

|

||||

},

|

||||

"d3-polygon": {

|

||||

"version": "2.0.0",

|

||||

"resolved": "https://registry.npmjs.org/d3-polygon/-/d3-polygon-2.0.0.tgz",

|

||||

"integrity": "sha512-MsexrCK38cTGermELs0cO1d79DcTsQRN7IWMJKczD/2kBjzNXxLUWP33qRF6VDpiLV/4EI4r6Gs0DAWQkE8pSQ=="

|

||||

},

|

||||

"js-clipper": {

|

||||

"version": "1.0.1",

|

||||

"resolved": "https://registry.npmjs.org/js-clipper/-/js-clipper-1.0.1.tgz",

|

||||

"integrity": "sha512-0XYAS0ZoCki5K0fWwj8j8ug4mgxHXReW3ayPbVqr4zXPJuIs2pyvemL1sALadsEiAywZwW5Ify1XfU4bNJvokg=="

|

||||

},

|

||||

"number-precision": {

|

||||

"version": "1.5.2",

|

||||

"resolved": "https://registry.npmjs.org/number-precision/-/number-precision-1.5.2.tgz",

|

||||

"integrity": "sha512-q7C1ZW3FyjsJ+IpGB6ykX8OWWa5+6M+hEY0zXBlzq1Sq1IPY9GeI3CQ9b2i6CMIYoeSuFhop2Av/OhCxClXqag=="

|

||||

}

|

||||

}

|

||||

}

|

||||

19

examples/application/js/mini_program/ocrXcx/package.json

Normal file

19

examples/application/js/mini_program/ocrXcx/package.json

Normal file

@@ -0,0 +1,19 @@

|

||||

{

|

||||

"name": "paddlejs-demo",

|

||||

"version": "0.0.1",

|

||||

"description": "",

|

||||

"main": "app.js",

|

||||

"dependencies": {

|

||||

"@paddlejs/paddlejs-backend-webgl": "^1.2.0",

|

||||

"@paddlejs/paddlejs-core": "^2.1.18",

|

||||

"d3-polygon": "2.0.0",

|

||||

"js-clipper": "1.0.1",

|

||||

"number-precision": "1.5.2"

|

||||

},

|

||||

"scripts": {

|

||||

"test": "echo \"Error: no test specified\" && exit 1"

|

||||

},

|

||||

"keywords": [],

|

||||

"author": "",

|

||||

"license": "ISC"

|

||||

}

|

||||

Binary file not shown.

|

After Width: | Height: | Size: 222 KiB |

578

examples/application/js/mini_program/ocrXcx/pages/index/index.js

Normal file

578

examples/application/js/mini_program/ocrXcx/pages/index/index.js

Normal file

@@ -0,0 +1,578 @@

|

||||

/* global wx, Page */

|

||||

import * as paddlejs from '@paddlejs/paddlejs-core';

|

||||

import '@paddlejs/paddlejs-backend-webgl';

|

||||

import clipper from 'js-clipper';

|

||||

import { divide, enableBoundaryChecking, plus } from 'number-precision';

|

||||

|

||||

import { recDecode } from 'recPostprocess.js';

|

||||

// eslint-disable-next-line no-undef

|

||||

const plugin = requirePlugin('paddlejs-plugin');

|

||||

const Polygon = require('d3-polygon');

|

||||

|

||||

global.wasm_url = 'pages/index/wasm/opencv_js.wasm.br';

|

||||

const CV = require('./wasm/opencv.js');

|

||||

|

||||

plugin.register(paddlejs, wx);

|

||||

|

||||

let DETSHAPE = 960;

|

||||

let RECWIDTH = 320;

|

||||

const RECHEIGHT = 32;

|

||||

|

||||

// 声明后续图像变换要用到的canvas;此时未绑定

|

||||

let canvas_det;

|

||||

let canvas_rec;

|

||||

let my_canvas;

|

||||

let my_canvas_ctx;

|

||||

|

||||

|

||||

const imgList = [

|

||||

'https://paddlejs.bj.bcebos.com/xcx/ocr.png'

|

||||

];

|

||||

|

||||

// eslint-disable-next-line max-lines-per-function

|

||||

const outputBox = (res) => {

|

||||

const thresh = 0.3;

|

||||

const box_thresh = 0.5;

|

||||

const max_candidates = 1000;

|

||||

const min_size = 3;

|

||||

const width = 960;

|

||||

const height = 960;

|

||||

const pred = res;

|

||||

const segmentation = [];

|

||||

pred.forEach(item => {

|

||||

segmentation.push(item > thresh ? 255 : 0);

|

||||

});

|

||||

|

||||

function get_mini_boxes(contour) {

|

||||

// 生成最小外接矩形

|

||||

const bounding_box = CV.minAreaRect(contour);

|

||||

const points = [];

|

||||

const mat = new CV.Mat();

|

||||

// 获取矩形的四个顶点坐标

|

||||

CV.boxPoints(bounding_box, mat);

|

||||

for (let i = 0; i < mat.data32F.length; i += 2) {

|

||||

const arr = [];

|

||||

arr[0] = mat.data32F[i];

|

||||

arr[1] = mat.data32F[i + 1];

|

||||

points.push(arr);

|

||||

}

|

||||

|

||||

function sortNumber(a, b) {

|

||||

return a[0] - b[0];

|

||||

}

|

||||

points.sort(sortNumber);

|

||||

let index_1 = 0;

|

||||

let index_2 = 1;

|

||||

let index_3 = 2;

|

||||

let index_4 = 3;

|

||||

if (points[1][1] > points[0][1]) {

|

||||

index_1 = 0;

|

||||

index_4 = 1;

|

||||

}

|

||||

else {

|

||||

index_1 = 1;

|

||||

index_4 = 0;

|

||||

}

|

||||

|

||||

if (points[3][1] > points[2][1]) {

|

||||

index_2 = 2;

|

||||

index_3 = 3;

|

||||

}

|

||||

else {

|

||||

index_2 = 3;

|

||||

index_3 = 2;

|

||||

}

|

||||

const box = [

|

||||

points[index_1],

|

||||

points[index_2],

|

||||

points[index_3],

|

||||

points[index_4]

|

||||

];

|

||||

const side = Math.min(bounding_box.size.height, bounding_box.size.width);

|

||||

mat.delete();

|

||||

return {

|

||||

points: box,

|

||||

side

|

||||

};

|

||||

}

|

||||

|

||||

function box_score_fast(bitmap, _box) {

|

||||

const h = height;

|

||||

const w = width;

|

||||

const box = JSON.parse(JSON.stringify(_box));

|

||||

const x = [];

|

||||

const y = [];

|

||||

box.forEach(item => {

|

||||

x.push(item[0]);

|

||||

y.push(item[1]);

|

||||

});

|

||||

// clip这个函数将将数组中的元素限制在a_min, a_max之间,大于a_max的就使得它等于 a_max,小于a_min,的就使得它等于a_min。

|

||||

const xmin = clip(Math.floor(Math.min(...x)), 0, w - 1);

|

||||

const xmax = clip(Math.ceil(Math.max(...x)), 0, w - 1);

|

||||

const ymin = clip(Math.floor(Math.min(...y)), 0, h - 1);

|

||||

const ymax = clip(Math.ceil(Math.max(...y)), 0, h - 1);

|

||||

// eslint-disable-next-line new-cap

|

||||

const mask = new CV.Mat.zeros(ymax - ymin + 1, xmax - xmin + 1, CV.CV_8UC1);

|

||||

box.forEach(item => {

|

||||

item[0] = Math.max(item[0] - xmin, 0);

|

||||

item[1] = Math.max(item[1] - ymin, 0);

|

||||

});

|

||||

const npts = 4;

|

||||

const point_data = new Uint8Array(box.flat());

|

||||

const points = CV.matFromArray(npts, 1, CV.CV_32SC2, point_data);

|

||||

const pts = new CV.MatVector();

|

||||

pts.push_back(points);

|

||||

const color = new CV.Scalar(255);

|

||||

// 多个多边形填充

|

||||

CV.fillPoly(mask, pts, color, 1);

|

||||

const sliceArr = [];

|

||||

for (let i = ymin; i < ymax + 1; i++) {

|

||||

sliceArr.push(...bitmap.slice(960 * i + xmin, 960 * i + xmax + 1));

|

||||

}

|

||||

const mean = num_mean(sliceArr, mask.data);

|

||||

mask.delete();

|

||||

points.delete();

|

||||

pts.delete();

|

||||

return mean;

|

||||

}

|

||||

|

||||

function clip(data, min, max) {

|

||||

return data < min ? min : data > max ? max : data;

|

||||

}

|

||||

|

||||

function unclip(box) {

|

||||

const unclip_ratio = 1.6;

|

||||

const area = Math.abs(Polygon.polygonArea(box));

|

||||

const length = Polygon.polygonLength(box);

|

||||

const distance = area * unclip_ratio / length;

|

||||

const tmpArr = [];

|

||||

box.forEach(item => {

|

||||

const obj = {

|

||||

X: 0,

|

||||

Y: 0

|

||||

};

|

||||

obj.X = item[0];

|

||||

obj.Y = item[1];

|

||||

tmpArr.push(obj);

|

||||

});

|

||||

const offset = new clipper.ClipperOffset();

|

||||

offset.AddPath(tmpArr, clipper.JoinType.jtRound, clipper.EndType.etClosedPolygon);

|

||||

const expanded = [];

|

||||

offset.Execute(expanded, distance);

|

||||

let expandedArr = [];

|

||||

expanded[0] && expanded[0].forEach(item => {

|

||||

expandedArr.push([item.X, item.Y]);

|

||||

});

|

||||

expandedArr = [].concat(...expandedArr);

|

||||

return expandedArr;

|

||||

}

|

||||

|

||||

function num_mean(data, mask) {

|

||||

let sum = 0;

|

||||

let length = 0;

|

||||

for (let i = 0; i < data.length; i++) {

|

||||

if (mask[i]) {

|

||||

sum = plus(sum, data[i]);

|

||||

length++;

|

||||

}

|

||||

}

|

||||

return divide(sum, length);

|

||||

}

|

||||

|

||||

// eslint-disable-next-line new-cap

|

||||

const src = new CV.matFromArray(960, 960, CV.CV_8UC1, segmentation);

|

||||

const contours = new CV.MatVector();

|

||||

const hierarchy = new CV.Mat();

|

||||

// 获取轮廓

|

||||

CV.findContours(src, contours, hierarchy, CV.RETR_LIST, CV.CHAIN_APPROX_SIMPLE);

|

||||

const num_contours = Math.min(contours.size(), max_candidates);

|

||||

const boxes = [];

|

||||

const scores = [];

|

||||

const arr = [];

|

||||

for (let i = 0; i < num_contours; i++) {

|

||||

const contour = contours.get(i);

|

||||

let {

|

||||

points,

|

||||

side

|

||||

} = get_mini_boxes(contour);

|

||||

if (side < min_size) {

|

||||

continue;

|

||||

}

|

||||

const score = box_score_fast(pred, points);

|

||||

if (box_thresh > score) {

|

||||

continue;

|

||||

}

|

||||

let box = unclip(points);

|

||||

// eslint-disable-next-line new-cap

|

||||

const boxMap = new CV.matFromArray(box.length / 2, 1, CV.CV_32SC2, box);

|

||||

const resultObj = get_mini_boxes(boxMap);

|

||||

box = resultObj.points;

|

||||

side = resultObj.side;

|

||||

if (side < min_size + 2) {

|

||||

continue;

|

||||

}

|

||||

box.forEach(item => {

|

||||

item[0] = clip(Math.round(item[0]), 0, 960);

|

||||

item[1] = clip(Math.round(item[1]), 0, 960);

|

||||

});

|

||||

boxes.push(box);

|

||||

scores.push(score);

|

||||

arr.push(i);

|

||||

boxMap.delete();

|

||||

}

|

||||

src.delete();

|

||||

contours.delete();

|

||||

hierarchy.delete();

|

||||

return {

|

||||

boxes,

|

||||

scores

|

||||

};

|

||||

};

|

||||

|

||||

const sorted_boxes = (box) => {

|

||||

function sortNumber(a, b) {

|

||||

return a[0][1] - b[0][1];

|

||||

}

|

||||

|

||||

const boxes = box.sort(sortNumber);

|

||||

const num_boxes = boxes.length;

|

||||

for (let i = 0; i < num_boxes - 1; i++) {

|

||||

if (Math.abs(boxes[i + 1][0][1] - boxes[i][0][1]) < 10

|

||||

&& boxes[i + 1][0][0] < boxes[i][0][0]) {

|

||||

const tmp = boxes[i];

|

||||

boxes[i] = boxes[i + 1];

|

||||

boxes[i + 1] = tmp;

|

||||

}

|

||||

}

|

||||

return boxes;

|

||||

}

|

||||

|

||||

function flatten(arr) {

|

||||

return arr.toString().split(',').map(item => +item);

|

||||

}

|

||||

|

||||

function int(num) {

|

||||

return num > 0 ? Math.floor(num) : Math.ceil(num);

|

||||

}

|

||||

|

||||

function clip(data, min, max) {

|

||||

return data < min ? min : data > max ? max : data;

|

||||

}

|

||||

|

||||

function get_rotate_crop_image(img, points) {

|

||||

const img_crop_width = int(Math.max(

|

||||

linalg_norm(points[0], points[1]),

|

||||

linalg_norm(points[2], points[3])

|

||||

));

|

||||

const img_crop_height = int(Math.max(

|

||||

linalg_norm(points[0], points[3]),

|

||||

linalg_norm(points[1], points[2])

|

||||

));

|

||||

const pts_std = [

|

||||

[0, 0],

|

||||

[img_crop_width, 0],

|

||||

[img_crop_width, img_crop_height],

|

||||

[0, img_crop_height]

|

||||

];

|

||||

const srcTri = CV.matFromArray(4, 1, CV.CV_32FC2, flatten(points));

|

||||

const dstTri = CV.matFromArray(4, 1, CV.CV_32FC2, flatten(pts_std));

|

||||

// 获取到目标矩阵

|

||||

const M = CV.getPerspectiveTransform(srcTri, dstTri);

|

||||

const src = CV.imread(img);

|

||||

const dst = new CV.Mat();

|

||||

const dsize = new CV.Size(img_crop_width, img_crop_height);

|

||||

// 透视转换

|

||||

CV.warpPerspective(src, dst, M, dsize, CV.INTER_CUBIC, CV.BORDER_REPLICATE, new CV.Scalar());

|

||||

|

||||

const dst_img_height = dst.rows;

|

||||

const dst_img_width = dst.cols;

|

||||

let dst_rot;

|

||||

// 图像旋转

|

||||

if (dst_img_height / dst_img_width >= 1.5) {

|

||||

dst_rot = new CV.Mat();

|

||||

const dsize_rot = new CV.Size(dst.rows, dst.cols);

|

||||

const center = new CV.Point(dst.cols / 2, dst.cols / 2);

|

||||

const M = CV.getRotationMatrix2D(center, 90, 1);

|

||||

CV.warpAffine(dst, dst_rot, M, dsize_rot, CV.INTER_CUBIC, CV.BORDER_REPLICATE, new CV.Scalar());

|

||||

}

|

||||

|

||||

const dst_resize = new CV.Mat();

|

||||

const dsize_resize = new CV.Size(0, 0);

|

||||

let scale;

|

||||

if (dst_rot) {

|

||||

scale = RECHEIGHT / dst_rot.rows;

|

||||

CV.resize(dst_rot, dst_resize, dsize_resize, scale, scale, CV.INTER_AREA);

|

||||

dst_rot.delete();

|

||||

}

|

||||

else {

|

||||

scale = RECHEIGHT / dst_img_height;

|

||||

CV.resize(dst, dst_resize, dsize_resize, scale, scale, CV.INTER_AREA);

|

||||

}

|

||||

|

||||

canvas_det.width = dst_resize.cols;

|

||||

canvas_det.height = dst_resize.rows;

|

||||

canvas_det.getContext('2d').clearRect(0, 0, canvas_det.width, canvas_det.height);

|

||||

CV.imshow(canvas_det, dst_resize);

|

||||

|

||||

src.delete();

|

||||

dst.delete();

|

||||

dst_resize.delete();

|

||||

srcTri.delete();

|

||||

dstTri.delete();

|

||||

}

|

||||

|

||||

function linalg_norm(x, y) {

|

||||

return Math.sqrt(Math.pow(x[0] - y[0], 2) + Math.pow(x[1] - y[1], 2));

|

||||

}

|

||||

|

||||

function resize_norm_img_splice(

|

||||

image,

|

||||

origin_width,

|

||||

origin_height,

|

||||

index = 0

|

||||

) {

|

||||

canvas_rec.width = RECWIDTH;

|

||||

canvas_rec.height = RECHEIGHT;

|

||||

const ctx = canvas_rec.getContext('2d');

|

||||

ctx.fillStyle = '#fff';

|

||||

ctx.clearRect(0, 0, canvas_rec.width, canvas_rec.height);

|

||||

// ctx.drawImage(image, -index * RECWIDTH, 0, origin_width, origin_height);

|

||||

ctx.putImageData(image, -index * RECWIDTH, 0);

|

||||

}

|

||||

|

||||

// 声明检测和识别Runner;未初始化

|

||||

let detectRunner;

|

||||

let recRunner;

|

||||

|

||||

Page({

|

||||

data: {

|

||||

photo_src:'',

|

||||

imgList: imgList,

|

||||

imgInfo: {},

|

||||

result: '',

|

||||

select_mode: false,

|

||||

loaded: false

|

||||

},

|

||||

switch_choose(){

|

||||

this.setData({

|

||||

select_mode: true

|

||||

})

|

||||

},

|

||||

switch_example(){

|

||||

this.setData({

|

||||

select_mode: false

|

||||

})

|

||||

},

|

||||

chose_photo:function(evt){

|

||||

let _this = this

|

||||

wx.chooseImage({

|

||||

count: 1,

|

||||

sizeType: ['original', 'compressed'],

|

||||

sourceType: ['album', 'camera'],

|

||||

success(res) {

|

||||

console.log(res.tempFilePaths) //一个数组,每个元素都是“http://...”图片地址

|

||||

_this.setData({

|

||||

photo_src: res.tempFilePaths[0]

|

||||

})

|

||||

}

|

||||

})

|

||||

},

|

||||

reselect:function(evt){

|

||||

let _this = this

|

||||

wx.chooseImage({

|

||||

count: 1,

|

||||

sizeType: ['original', 'compressed'],

|

||||

sourceType: ['album', 'camera'],

|

||||

success(res) {

|

||||

_this.setData({

|

||||

photo_src: res.tempFilePaths[0]

|

||||

})

|

||||

}

|

||||

})

|

||||

},

|

||||

photo_preview:function(evt){

|

||||

let _this = this;

|

||||

let imgs = [];

|

||||

imgs.push(_this.data.photo_src);

|

||||

wx.previewImage({

|

||||

urls:imgs

|

||||

})

|

||||

},

|

||||

|

||||

predect_choose_img() {

|

||||

console.log(this.data.photo_src)

|

||||

this.getImageInfo(this.data.photo_src);

|

||||

},

|

||||

|

||||

onLoad() {

|

||||

enableBoundaryChecking(false);

|

||||

// 绑定canvas;该操作是异步,因此最好加延迟保证后续使用时已完成绑定

|

||||

wx.createSelectorQuery()

|

||||

.select('#canvas_det')

|

||||

.fields({ node: true, size: true })

|

||||

.exec(async(res) => {

|

||||

canvas_det = res[0].node;

|

||||

});

|

||||

|

||||

wx.createSelectorQuery()

|

||||

.select('#canvas_rec')

|

||||

.fields({ node: true, size: true })

|

||||

.exec(async(res) => {

|

||||

canvas_rec = res[0].node;

|

||||

});

|

||||

|

||||

wx.createSelectorQuery()

|

||||

.select('#myCanvas')

|

||||

.fields({ node: true, size: true })

|

||||

.exec((res) => {

|

||||

my_canvas = res[0].node;

|

||||

my_canvas_ctx = my_canvas.getContext('2d');

|

||||

});

|

||||

|

||||

const me = this;

|

||||

// 初始化Runner

|

||||

detectRunner = new paddlejs.Runner({

|

||||

modelPath: 'https://paddleocr.bj.bcebos.com/PaddleJS/PP-OCRv3/ch/ch_PP-OCRv3_det_infer_js_960/model.json',

|

||||

mean: [0.485, 0.456, 0.406],

|

||||

std: [0.229, 0.224, 0.225],

|

||||

bgr: true,

|

||||

webglFeedProcess: true

|

||||

});

|

||||

recRunner = new paddlejs.Runner({

|

||||

modelPath: 'https://paddleocr.bj.bcebos.com/PaddleJS/PP-OCRv3/ch/ch_PP-OCRv3_rec_infer_js/model.json',

|

||||

fill: '#000',

|

||||

mean: [0.5, 0.5, 0.5],

|

||||

std: [0.5, 0.5, 0.5],

|

||||

bgr: true,

|

||||

webglFeedProcess: true

|

||||

});

|

||||

// 等待模型数据全部加载完成

|

||||

Promise.all([detectRunner.init(), recRunner.init()]).then(_ => {

|

||||

me.setData({

|

||||

loaded: true

|

||||

});

|

||||

});

|

||||

|

||||

},

|

||||

|

||||

selectImage(event) {

|

||||

const imgPath = this.data.imgList[event.target.dataset.index];

|

||||

this.getImageInfo(imgPath);

|

||||

},

|

||||

|

||||

getImageInfo(imgPath) {

|

||||

const me = this;

|

||||

wx.getImageInfo({

|

||||

src: imgPath,

|

||||

success: (imgInfo) => {

|

||||

const {

|

||||

path,

|

||||

width,

|

||||

height

|

||||

} = imgInfo;

|

||||

const canvasPath = imgPath.includes('http') ? path : imgPath;

|

||||

|

||||

let sw = 960;

|

||||

let sh = 960;

|

||||

let x = 0;

|

||||

let y = 0;

|

||||

|

||||

if (height / width >= 1) {

|

||||

sw = Math.round(sh * width / height);

|

||||

x = Math.floor((960 - sw) / 2);

|

||||

}

|

||||

else {

|

||||

sh = Math.round(sw * height / width);

|

||||

y = Math.floor((960 - sh) / 2);

|

||||

}

|

||||

my_canvas.width = sw;

|

||||

my_canvas.height = sh;

|

||||

|

||||

// 微信上canvas输入图片

|

||||

const image = my_canvas.createImage();

|

||||

image.src = canvasPath;

|

||||

image.onload = () => {

|

||||

my_canvas_ctx.clearRect(0, 0, my_canvas.width, my_canvas.height);

|

||||

my_canvas_ctx.drawImage(image, x, y, sw, sh);

|

||||

const imageData = my_canvas_ctx.getImageData(0, 0, sw, sh);

|

||||

// 开始识别

|

||||

me.recognize({

|

||||

data: imageData.data,

|

||||

width: 960,

|

||||

height: 960

|

||||

}, {canvasPath, sw, sh, x, y});

|

||||

}

|

||||

}

|

||||

});

|

||||

},

|

||||

|

||||

async recognize(res, img) {

|

||||

const me = this;

|

||||

// 文本框选坐标点

|

||||

let points;

|

||||

await detectRunner.predict(res, function (detectRes) {

|

||||

points = outputBox(detectRes);

|

||||

});

|

||||

|

||||

// 绘制文本框

|

||||

me.drawCanvasPoints(img, points.boxes);

|

||||

|

||||

// 排序,使得最后结果输出尽量按照从上到下的顺序

|

||||

const boxes = sorted_boxes(points.boxes);

|

||||

|

||||

const text_list = [];

|

||||

|

||||

for (let i = 0; i < boxes.length; i++) {

|

||||

const tmp_box = JSON.parse(JSON.stringify(boxes[i]));

|

||||

// 获取tmp_box对应图片到canvas_det

|

||||

get_rotate_crop_image(res, tmp_box);

|

||||

// 这里是计算要识别文字的图片片段是否大于识别模型要求的输入宽度;超过了的话会分成多次识别,再拼接结果

|

||||

const width_num = Math.ceil(canvas_det.width / RECWIDTH);

|

||||

|

||||

let text_list_tmp = '';

|

||||

for (let j = 0; j < width_num; j++) {

|

||||

// 根据原图的宽度进行裁剪拼接,超出指定宽度会被截断;然后再次识别,最后拼接起来

|

||||

resize_norm_img_splice(canvas_det.getContext('2d').getImageData(0, 0, canvas_det.width, canvas_det.height), canvas_det.width, canvas_det.height, j);

|

||||

|

||||

const imgData = canvas_rec.getContext('2d').getImageData(0, 0, canvas_rec.width, canvas_rec.height);

|

||||

|

||||

await recRunner.predict(imgData, function(output){

|

||||

// 将输出向量转化为idx再传化为对应字符

|

||||

const text = recDecode(output);

|

||||

text_list_tmp = text_list_tmp.concat(text.text);

|

||||

});

|

||||

}

|

||||

text_list.push(text_list_tmp);

|

||||

}

|

||||

me.setData({

|

||||

result: JSON.stringify(boxes) + JSON.stringify(text_list)

|

||||

});

|

||||

},

|

||||

|

||||

drawCanvasPoints(img, points) {

|

||||

// 设置线条

|

||||

my_canvas_ctx.strokeStyle = 'blue';

|

||||

my_canvas_ctx.lineWidth = 5;

|

||||

|

||||

// 先绘制图片

|

||||

const image = my_canvas.createImage();

|

||||

image.src = img.canvasPath;

|

||||

image.onload = () => {

|

||||

my_canvas_ctx.clearRect(0, 0, my_canvas_ctx.width, my_canvas_ctx.height);

|

||||

my_canvas_ctx.drawImage(image, img.x, img.y, img.sw, img.sh);

|

||||

// 绘制线框

|

||||

points.length && points.forEach(point => {

|

||||

my_canvas_ctx.beginPath();

|

||||

// 设置路径起点坐标

|

||||

my_canvas_ctx.moveTo(point[0][0], point[0][1]);

|

||||

my_canvas_ctx.lineTo(point[1][0], point[1][1]);

|

||||

my_canvas_ctx.lineTo(point[2][0], point[2][1]);

|

||||

my_canvas_ctx.lineTo(point[3][0], point[3][1]);

|

||||

my_canvas_ctx.lineTo(point[0][0], point[0][1]);

|

||||

my_canvas_ctx.stroke();

|

||||

my_canvas_ctx.closePath();

|

||||

});

|

||||

}

|

||||

|

||||

}

|

||||

});

|

||||

@@ -0,0 +1,2 @@

|

||||

{

|

||||

}

|

||||

@@ -0,0 +1,56 @@

|

||||

<view>

|

||||

<view>

|

||||

<button bindtap="switch_choose" wx:if="{{!select_mode}}">切换选择本地图片模式</button>

|

||||

<button bindtap="switch_example" wx:else>切换示例图片模式</button>

|

||||

</view>

|

||||

<view class="photo_box" wx:if="{{select_mode}}">

|

||||

<view wx:if="{{photo_src != ''}}" class="photo_preview">

|

||||

<image src="{{photo_src}}" mode="aspectFit" bindtap="photo_preview"></image>

|

||||

<view class="reselect" bindtap="reselect">重新选择</view>

|

||||

</view>

|

||||

<view wx:else class="photo_text" bindtap="chose_photo">点击拍照或上传本地照片</view>

|

||||

<button bindtap="predect_choose_img">检测</button>

|

||||

</view>

|

||||

<view wx:else>

|

||||

<text class="title">点击图片进行预测</text>

|

||||

<scroll-view class="imgWrapper" scroll-x="true">

|

||||

<image

|

||||

class="img {{selectedIndex == index ? 'selected' : ''}}"

|

||||

wx:for="{{imgList}}"

|

||||

wx:key="index"

|

||||

src="{{item}}"

|

||||

mode="aspectFit"

|

||||

bindtap="selectImage"

|

||||

data-index="{{index}}"

|

||||

></image>

|

||||

</scroll-view>

|

||||

</view>

|

||||

<view class="img-view">

|

||||

<scroll-view class="imgWrapper" scroll-x="true" style="width: 960px; height: 960px;">

|

||||

<canvas

|

||||

id="myCanvas"

|

||||

type="2d"

|

||||

style="width: 960px; height: 960px;"

|

||||

></canvas>

|

||||

</scroll-view>

|

||||

<scroll-view class="imgWrapper" scroll-x="true" style="width: 960px; height: 960px;">

|

||||

<canvas

|

||||

id="canvas_det"

|

||||

type="2d"

|

||||

style="width: 960px; height: 960px;"

|

||||

></canvas>

|

||||

</scroll-view>

|

||||

<scroll-view class="imgWrapper" scroll-x="true" style="width: 960px; height: 960px;">

|

||||

<canvas

|

||||

id="canvas_rec"

|

||||

type="2d"

|

||||

style="width: 960px; height: 960px;"

|

||||

></canvas>

|

||||

</scroll-view>

|

||||

<text class="result" wx:if="{{result}}" style="height: 300rpx;">文本框选坐标:{{result}}</text>

|

||||

</view>

|

||||

</view>

|

||||

|

||||

<view class="mask" wx:if="{{!loaded}}">

|

||||

<text class="loading">loading…</text>

|

||||

</view>

|

||||

@@ -0,0 +1,78 @@

|

||||

.photo_box{

|

||||

width: 750rpx;

|

||||

border: 1px solid #cccccc;

|

||||

box-sizing: border-box;

|

||||

}

|

||||

.photo_text{

|

||||

width: 100%;

|

||||

line-height: 500rpx;

|

||||

text-align: center;

|

||||

}

|

||||

.photo_preview image{

|

||||

width: 750rpx;

|

||||

}

|

||||

.photo_preview .reselect{

|

||||

width: 750rpx;

|

||||

height: 100rpx;

|

||||

background-color: #3F8EFF;

|

||||

text-align: center;

|

||||

line-height: 100rpx;

|

||||

border-top: 1px solid #cccccc;

|

||||

}

|

||||

|

||||

text {

|

||||

display: block;

|

||||

}

|

||||

|

||||

.title {

|

||||

margin-top: 10px;

|

||||

font-size: 16px;

|

||||

line-height: 32px;

|

||||

font-weight: bold;

|

||||

}

|

||||

|

||||

.imgWrapper {

|

||||

margin: 10px 10px 0;

|

||||

white-space: nowrap;

|

||||

}

|

||||

.img {

|

||||

width: 960px;

|

||||

height: 960px;

|

||||

border: 1px solid #f1f1f1;

|

||||

}

|

||||

|

||||

.result {

|

||||

margin-top: 5px;

|

||||

}

|

||||

|

||||

.selected {

|

||||

border: 1px solid #999;

|

||||

}

|

||||

|

||||

.select-btn {

|

||||

margin-top: 20px;

|

||||

width: 60%;

|

||||

}

|

||||

|

||||

.mask {

|

||||

position: absolute;

|

||||

top: 0;

|

||||

left: 0;

|

||||

right: 0;

|

||||

bottom: 0;

|

||||

background-color: rgba(0, 0, 0, .7);

|

||||

}

|

||||

|

||||

.loading {

|

||||

color: #fff;

|

||||

font-size: 20px;

|

||||

position: absolute;

|

||||

top: 50%;

|

||||

left: 50%;

|

||||

transform: translate(-50%, -50%);

|

||||

}

|

||||

|

||||

.img-view {

|

||||

padding-bottom: 20px;

|

||||

border-bottom: 1px solid #f1f1f1;

|

||||

}

|

||||

File diff suppressed because it is too large

Load Diff

File diff suppressed because one or more lines are too long

@@ -0,0 +1,63 @@

|

||||

import { character } from 'ppocr_keys_v1.js';

|

||||

|

||||

const ocr_character = character;

|

||||

let preds_idx = [];

|

||||

let preds_prob = [];

|

||||

|

||||

function init(preds) {

|

||||

preds_idx = [];

|

||||

preds_prob = [];

|

||||

// preds: [1, ?, 6625]

|

||||

const pred_len = 6625;

|

||||

for (let i = 0; i < preds.length; i += pred_len) {

|

||||

const tmpArr = preds.slice(i, i + pred_len - 1);

|

||||

const tmpMax = Math.max(...tmpArr);

|

||||

const tmpIdx = tmpArr.indexOf(tmpMax);

|

||||

preds_prob.push(tmpMax);

|

||||

preds_idx.push(tmpIdx);

|

||||

}

|

||||

}

|

||||

|

||||

function get_ignored_tokens() {

|

||||

return [0];

|

||||

}

|

||||

|

||||

function decode(text_index, text_prob, is_remove_duplicate = false) {

|

||||

const ignored_tokens = get_ignored_tokens();

|

||||

const char_list = [];

|

||||

const conf_list = [];

|

||||

for (let idx = 0; idx < text_index.length; idx++) {

|

||||

if (text_index[idx] in ignored_tokens) {

|

||||

continue;

|

||||

}

|

||||

if (is_remove_duplicate) {

|

||||

if (idx > 0 && text_index[idx - 1] === text_index[idx]) {

|

||||

continue;

|

||||

}

|

||||

}

|

||||

char_list.push(ocr_character[text_index[idx] - 1]);

|

||||

if (text_prob) {

|

||||

conf_list.push(text_prob[idx]);

|

||||

}

|

||||

else {

|

||||

conf_list.push(1);

|

||||

}

|

||||

}

|

||||

let text = '';

|

||||

let mean = 0;

|

||||

|

||||

if (char_list.length) {

|

||||

text = char_list.join('');

|

||||

let sum = 0;

|

||||

conf_list.forEach(item => {

|

||||

sum += item;

|

||||

});

|

||||

mean = sum / conf_list.length;

|

||||

}

|

||||

return { text, mean };

|

||||

}

|

||||

|

||||

export function recDecode(preds) {

|

||||

init(preds);

|

||||

return decode(preds_idx, preds_prob, true);

|

||||

}

|

||||

File diff suppressed because it is too large

Load Diff

Binary file not shown.

@@ -0,0 +1,58 @@

|

||||

{

|

||||

"description": "项目配置文件,详见文档:https://developers.weixin.qq.com/miniprogram/dev/devtools/projectconfig.html",

|

||||

"packOptions": {

|

||||

"ignore": [],

|

||||

"include": []

|

||||

},

|

||||

"setting": {

|

||||

"urlCheck": false,

|

||||

"es6": true,

|

||||

"enhance": true,

|

||||

"postcss": true,

|

||||

"preloadBackgroundData": false,

|

||||

"minified": true,

|

||||

"newFeature": false,

|

||||

"coverView": true,

|

||||

"nodeModules": true,

|

||||

"autoAudits": false,

|

||||

"showShadowRootInWxmlPanel": true,

|

||||

"scopeDataCheck": false,

|

||||

"uglifyFileName": false,

|

||||

"checkInvalidKey": true,

|

||||

"checkSiteMap": true,

|

||||

"uploadWithSourceMap": true,

|

||||

"compileHotReLoad": false,

|

||||

"lazyloadPlaceholderEnable": false,

|

||||

"useMultiFrameRuntime": true,

|

||||

"useApiHook": true,

|

||||

"useApiHostProcess": true,

|

||||

"babelSetting": {

|

||||

"ignore": [],

|

||||

"disablePlugins": [],

|

||||

"outputPath": ""

|

||||

},

|

||||

"enableEngineNative": false,

|

||||

"useIsolateContext": true,

|

||||

"userConfirmedBundleSwitch": false,

|

||||

"packNpmManually": false,

|

||||

"packNpmRelationList": [],

|

||||

"minifyWXSS": true,

|

||||

"disableUseStrict": false,

|

||||

"minifyWXML": true,

|

||||

"showES6CompileOption": false,

|

||||

"useCompilerPlugins": false,

|

||||

"useStaticServer": true,

|

||||

"ignoreUploadUnusedFiles": false

|

||||

},

|

||||

"compileType": "miniprogram",

|

||||

"libVersion": "2.22.1",

|

||||

"appid": "wx78461a9c81d1234c",

|

||||

"projectname": "mobilenet",

|

||||

"simulatorType": "wechat",

|

||||

"simulatorPluginLibVersion": {},

|

||||

"condition": {},

|

||||

"editorSetting": {

|

||||

"tabIndent": "insertSpaces",

|

||||

"tabSize": 2

|

||||

}

|

||||

}

|

||||

@@ -0,0 +1,8 @@

|

||||

{

|

||||

"projectname": "ocrXcx",

|

||||

"setting": {

|

||||

"compileHotReLoad": true

|

||||

},

|

||||

"description": "项目私有配置文件。此文件中的内容将覆盖 project.config.json 中的相同字段。项目的改动优先同步到此文件中。详见文档:https://developers.weixin.qq.com/miniprogram/dev/devtools/projectconfig.html",

|

||||

"libVersion": "2.23.4"

|

||||

}

|

||||

7

examples/application/js/mini_program/ocrXcx/sitemap.json

Normal file

7

examples/application/js/mini_program/ocrXcx/sitemap.json

Normal file

@@ -0,0 +1,7 @@

|

||||

{

|

||||

"desc": "关于本文件的更多信息,请参考文档 https://developers.weixin.qq.com/miniprogram/dev/framework/sitemap.html",

|

||||

"rules": [{

|

||||

"action": "allow",

|

||||

"page": "*"

|

||||

}]

|

||||

}

|

||||

12

examples/application/js/mini_program/ocrdetectXcx/app.js

Normal file

12

examples/application/js/mini_program/ocrdetectXcx/app.js

Normal file

@@ -0,0 +1,12 @@

|

||||

/* global wx, App */

|

||||

import * as paddlejs from '@paddlejs/paddlejs-core';

|

||||

import '@paddlejs/paddlejs-backend-webgl';

|

||||

// eslint-disable-next-line no-undef

|

||||

const plugin = requirePlugin('paddlejs-plugin');

|

||||

plugin.register(paddlejs, wx);

|

||||

|

||||

App({

|

||||

globalData: {

|

||||

Paddlejs: paddlejs.Runner

|

||||

}

|

||||

});

|

||||

12

examples/application/js/mini_program/ocrdetectXcx/app.json

Normal file

12

examples/application/js/mini_program/ocrdetectXcx/app.json

Normal file

@@ -0,0 +1,12 @@

|

||||

{

|

||||

"pages": [

|

||||

"pages/index/index"

|

||||

],

|

||||

"plugins": {

|

||||

"paddlejs-plugin": {

|

||||

"version": "2.0.1",

|

||||

"provider": "wx7138a7bb793608c3"

|

||||

}

|

||||

},

|

||||

"sitemapLocation": "sitemap.json"

|

||||

}

|

||||

33

examples/application/js/mini_program/ocrdetectXcx/package-lock.json

generated

Normal file

33

examples/application/js/mini_program/ocrdetectXcx/package-lock.json

generated

Normal file

@@ -0,0 +1,33 @@

|

||||

{

|

||||

"name": "paddlejs-demo",

|

||||

"version": "0.0.1",

|

||||

"lockfileVersion": 1,

|

||||

"requires": true,

|

||||

"dependencies": {

|

||||

"@paddlejs/paddlejs-backend-webgl": {

|

||||

"version": "1.2.0",

|

||||

"resolved": "https://registry.npmjs.org/@paddlejs/paddlejs-backend-webgl/-/paddlejs-backend-webgl-1.2.0.tgz",

|

||||

"integrity": "sha512-bKJKJkGldC3NPOuJyk+372z0XW1dd1D9lR0f9OHqWQboY0Mkah+gX+8tkerrNg+QjYz88IW0iJaRKB0jm+6d9g=="

|

||||

},

|

||||

"@paddlejs/paddlejs-core": {

|

||||

"version": "2.1.18",

|

||||

"resolved": "https://registry.npmjs.org/@paddlejs/paddlejs-core/-/paddlejs-core-2.1.18.tgz",

|

||||

"integrity": "sha512-QrXxwaHm4llp1sxbUq/oCCqlYx4ciXanBn/Lfq09UqR4zkYi5SptacQlIxgJ70HOO6RWIxjWN4liQckMwa2TkA=="

|

||||

},

|

||||

"d3-polygon": {

|

||||

"version": "2.0.0",

|

||||

"resolved": "https://registry.npmjs.org/d3-polygon/-/d3-polygon-2.0.0.tgz",

|

||||

"integrity": "sha512-MsexrCK38cTGermELs0cO1d79DcTsQRN7IWMJKczD/2kBjzNXxLUWP33qRF6VDpiLV/4EI4r6Gs0DAWQkE8pSQ=="

|

||||

},

|

||||

"js-clipper": {

|

||||

"version": "1.0.1",

|

||||

"resolved": "https://registry.npmjs.org/js-clipper/-/js-clipper-1.0.1.tgz",

|

||||

"integrity": "sha1-TWsHQ0pECOfBKeMiAc5m0hR07SE="

|

||||

},

|

||||

"number-precision": {

|

||||

"version": "1.5.2",

|

||||

"resolved": "https://registry.npmjs.org/number-precision/-/number-precision-1.5.2.tgz",

|

||||

"integrity": "sha512-q7C1ZW3FyjsJ+IpGB6ykX8OWWa5+6M+hEY0zXBlzq1Sq1IPY9GeI3CQ9b2i6CMIYoeSuFhop2Av/OhCxClXqag=="

|

||||

}

|

||||

}

|

||||

}

|

||||

@@ -0,0 +1,19 @@

|

||||

{

|

||||

"name": "paddlejs-demo",

|

||||

"version": "0.0.1",

|

||||

"description": "",

|

||||

"main": "app.js",

|

||||

"dependencies": {

|

||||

"@paddlejs/paddlejs-backend-webgl": "^1.2.0",

|

||||

"@paddlejs/paddlejs-core": "^2.1.18",

|

||||

"d3-polygon": "2.0.0",

|

||||

"js-clipper": "1.0.1",

|

||||

"number-precision": "1.5.2"

|

||||

},

|

||||

"scripts": {

|

||||

"test": "echo \"Error: no test specified\" && exit 1"

|

||||

},

|

||||

"keywords": [],

|

||||

"author": "",

|

||||

"license": "ISC"

|

||||

}

|

||||

Binary file not shown.

|

After Width: | Height: | Size: 222 KiB |

@@ -0,0 +1,337 @@

|

||||

/* global wx, Page */

|

||||

import * as paddlejs from '@paddlejs/paddlejs-core';

|

||||

import '@paddlejs/paddlejs-backend-webgl';

|

||||

import clipper from 'js-clipper';

|

||||

import { divide, enableBoundaryChecking, plus } from 'number-precision';

|

||||

// eslint-disable-next-line no-undef

|

||||

const plugin = requirePlugin('paddlejs-plugin');

|

||||

const Polygon = require('d3-polygon');

|

||||

|

||||

global.wasm_url = 'pages/index/wasm/opencv_js.wasm.br';

|

||||

const CV = require('./wasm/opencv.js');

|

||||

|

||||

plugin.register(paddlejs, wx);

|

||||

|

||||

const imgList = [

|

||||

'https://paddlejs.bj.bcebos.com/xcx/ocr.png',

|

||||

'./img/width.png'

|

||||

];

|

||||

|

||||

// eslint-disable-next-line max-lines-per-function

|

||||

const outputBox = res => {

|

||||

const thresh = 0.3;

|

||||

const box_thresh = 0.5;

|

||||

const max_candidates = 1000;

|

||||

const min_size = 3;

|

||||

const width = 960;

|

||||

const height = 960;

|

||||

const pred = res;

|

||||

const segmentation = [];

|

||||

pred.forEach(item => {

|

||||

segmentation.push(item > thresh ? 255 : 0);

|

||||

});

|

||||

|

||||

function get_mini_boxes(contour) {

|

||||

// 生成最小外接矩形

|

||||

const bounding_box = CV.minAreaRect(contour);

|

||||

const points = [];

|

||||

const mat = new CV.Mat();

|

||||

// 获取矩形的四个顶点坐标

|

||||

CV.boxPoints(bounding_box, mat);

|

||||

for (let i = 0; i < mat.data32F.length; i += 2) {

|

||||

const arr = [];

|

||||

arr[0] = mat.data32F[i];

|

||||

arr[1] = mat.data32F[i + 1];

|

||||

points.push(arr);

|

||||

}

|

||||

|

||||

function sortNumber(a, b) {

|

||||

return a[0] - b[0];

|

||||

}

|

||||

points.sort(sortNumber);

|

||||

let index_1 = 0;

|

||||

let index_2 = 1;

|

||||

let index_3 = 2;

|

||||

let index_4 = 3;

|

||||

if (points[1][1] > points[0][1]) {

|

||||

index_1 = 0;

|

||||

index_4 = 1;

|

||||

}

|

||||

else {

|

||||

index_1 = 1;

|

||||

index_4 = 0;

|

||||

}

|

||||

|

||||

if (points[3][1] > points[2][1]) {

|

||||

index_2 = 2;

|

||||

index_3 = 3;

|

||||

}

|

||||

else {

|

||||

index_2 = 3;

|

||||

index_3 = 2;

|

||||

}

|

||||

const box = [

|

||||

points[index_1],

|

||||

points[index_2],

|

||||

points[index_3],

|

||||

points[index_4]

|

||||

];

|

||||

const side = Math.min(bounding_box.size.height, bounding_box.size.width);

|

||||

mat.delete();

|

||||

return {

|

||||

points: box,

|

||||

side

|

||||

};

|

||||

}

|

||||

|

||||

function box_score_fast(bitmap, _box) {

|

||||

const h = height;

|

||||

const w = width;

|

||||

const box = JSON.parse(JSON.stringify(_box));

|

||||

const x = [];

|

||||

const y = [];

|

||||

box.forEach(item => {

|

||||

x.push(item[0]);

|

||||

y.push(item[1]);

|

||||

});

|

||||

// clip这个函数将将数组中的元素限制在a_min, a_max之间,大于a_max的就使得它等于 a_max,小于a_min,的就使得它等于a_min。

|

||||

const xmin = clip(Math.floor(Math.min(...x)), 0, w - 1);

|

||||

const xmax = clip(Math.ceil(Math.max(...x)), 0, w - 1);

|

||||

const ymin = clip(Math.floor(Math.min(...y)), 0, h - 1);

|

||||

const ymax = clip(Math.ceil(Math.max(...y)), 0, h - 1);

|

||||

// eslint-disable-next-line new-cap

|

||||

const mask = new CV.Mat.zeros(ymax - ymin + 1, xmax - xmin + 1, CV.CV_8UC1);

|

||||

box.forEach(item => {

|

||||

item[0] = Math.max(item[0] - xmin, 0);

|

||||

item[1] = Math.max(item[1] - ymin, 0);

|

||||

});

|

||||

const npts = 4;

|

||||

const point_data = new Uint8Array(box.flat());

|

||||

const points = CV.matFromArray(npts, 1, CV.CV_32SC2, point_data);

|

||||

const pts = new CV.MatVector();

|

||||

pts.push_back(points);

|

||||

const color = new CV.Scalar(255);

|

||||

// 多个多边形填充

|

||||

CV.fillPoly(mask, pts, color, 1);

|

||||

const sliceArr = [];

|

||||

for (let i = ymin; i < ymax + 1; i++) {

|

||||

sliceArr.push(...bitmap.slice(960 * i + xmin, 960 * i + xmax + 1));

|

||||

}

|

||||

const mean = num_mean(sliceArr, mask.data);

|

||||

mask.delete();

|

||||

points.delete();

|

||||

pts.delete();

|

||||

return mean;

|

||||

}

|

||||

|

||||

function clip(data, min, max) {

|

||||

return data < min ? min : data > max ? max : data;

|

||||

}

|

||||

|

||||

function unclip(box) {

|

||||

const unclip_ratio = 1.6;

|

||||

const area = Math.abs(Polygon.polygonArea(box));

|

||||

const length = Polygon.polygonLength(box);

|

||||

const distance = area * unclip_ratio / length;

|

||||

const tmpArr = [];

|

||||

box.forEach(item => {

|

||||

const obj = {

|

||||

X: 0,

|

||||

Y: 0

|

||||

};

|

||||

obj.X = item[0];

|

||||

obj.Y = item[1];

|

||||

tmpArr.push(obj);

|

||||

});

|

||||

const offset = new clipper.ClipperOffset();

|

||||

offset.AddPath(tmpArr, clipper.JoinType.jtRound, clipper.EndType.etClosedPolygon);

|

||||

const expanded = [];

|

||||

offset.Execute(expanded, distance);

|

||||

let expandedArr = [];

|

||||

expanded[0] && expanded[0].forEach(item => {

|

||||

expandedArr.push([item.X, item.Y]);

|

||||

});

|

||||

expandedArr = [].concat(...expandedArr);

|

||||

return expandedArr;

|

||||

}

|

||||

|

||||

function num_mean(data, mask) {

|

||||

let sum = 0;

|

||||

let length = 0;

|

||||

for (let i = 0; i < data.length; i++) {

|

||||

if (mask[i]) {

|

||||

sum = plus(sum, data[i]);

|

||||

length++;

|

||||

}

|

||||

}

|

||||

return divide(sum, length);

|

||||

}

|

||||

|

||||

// eslint-disable-next-line new-cap

|

||||

const src = new CV.matFromArray(960, 960, CV.CV_8UC1, segmentation);

|

||||

const contours = new CV.MatVector();

|

||||

const hierarchy = new CV.Mat();

|

||||

// 获取轮廓

|

||||

CV.findContours(src, contours, hierarchy, CV.RETR_LIST, CV.CHAIN_APPROX_SIMPLE);

|

||||

const num_contours = Math.min(contours.size(), max_candidates);

|

||||

const boxes = [];

|

||||

const scores = [];

|

||||

const arr = [];

|

||||

for (let i = 0; i < num_contours; i++) {

|

||||

const contour = contours.get(i);

|

||||

let {

|

||||

points,

|

||||

side

|

||||

} = get_mini_boxes(contour);

|

||||

if (side < min_size) {

|

||||

continue;

|

||||

}

|

||||

const score = box_score_fast(pred, points);

|

||||

if (box_thresh > score) {

|

||||

continue;

|

||||

}

|

||||

let box = unclip(points);

|

||||

// eslint-disable-next-line new-cap

|

||||

const boxMap = new CV.matFromArray(box.length / 2, 1, CV.CV_32SC2, box);

|

||||

const resultObj = get_mini_boxes(boxMap);

|

||||

box = resultObj.points;

|

||||

side = resultObj.side;

|

||||

if (side < min_size + 2) {

|

||||

continue;

|

||||

}

|

||||

box.forEach(item => {

|

||||

item[0] = clip(Math.round(item[0]), 0, 960);

|

||||

item[1] = clip(Math.round(item[1]), 0, 960);

|

||||

});

|

||||

boxes.push(box);

|

||||

scores.push(score);

|

||||

arr.push(i);

|

||||

boxMap.delete();

|

||||

}

|

||||

src.delete();

|

||||

contours.delete();

|

||||

hierarchy.delete();

|

||||

return {

|

||||

boxes,

|

||||

scores

|

||||

};

|

||||

};

|

||||

|

||||

let detectRunner;

|

||||

|

||||

Page({

|

||||

data: {

|

||||

imgList: imgList,

|

||||

imgInfo: {},

|

||||

result: '',

|

||||

loaded: false

|

||||

},

|

||||

|

||||

onLoad() {

|

||||

enableBoundaryChecking(false);

|

||||

const me = this;

|

||||

detectRunner = new paddlejs.Runner({

|

||||

modelPath: 'https://paddleocr.bj.bcebos.com/PaddleJS/PP-OCRv3/ch/ch_PP-OCRv3_det_infer_js_960/model.json',

|

||||

mean: [0.485, 0.456, 0.406],

|

||||

std: [0.229, 0.224, 0.225],

|

||||

bgr: true,

|

||||

webglFeedProcess: true

|

||||

});

|

||||

detectRunner.init().then(_ => {

|

||||

me.setData({

|

||||

loaded: true

|

||||

});

|

||||

});

|

||||

},

|

||||

|

||||

selectImage(event) {

|

||||

const imgPath = this.data.imgList[event.target.dataset.index];

|

||||

this.getImageInfo(imgPath);

|

||||

},

|

||||

|

||||

getImageInfo(imgPath) {

|

||||

const me = this;

|

||||

wx.getImageInfo({

|

||||

src: imgPath,

|

||||

success: imgInfo => {

|

||||

const {

|

||||

path,

|

||||

width,

|

||||

height

|

||||

} = imgInfo;

|

||||

|

||||

const canvasPath = imgPath.includes('http') ? path : imgPath;

|

||||

const canvasId = 'myCanvas';

|

||||

const ctx = wx.createCanvasContext(canvasId);

|

||||

let sw = 960;

|

||||

let sh = 960;

|

||||

let x = 0;

|

||||

let y = 0;

|

||||

if (height / width >= 1) {

|

||||

sw = Math.round(sh * width / height);

|

||||

x = Math.floor((960 - sw) / 2);

|

||||

}

|

||||

else {

|

||||

sh = Math.round(sw * height / width);

|

||||

y = Math.floor((960 - sh) / 2);

|

||||

}

|

||||

ctx.drawImage(canvasPath, x, y, sw, sh);

|

||||

ctx.draw(false, () => {

|

||||

// API 1.9.0 获取图像数据

|

||||

wx.canvasGetImageData({

|

||||

canvasId: canvasId,

|

||||

x: 0,

|

||||

y: 0,

|

||||

width: 960,

|

||||

height: 960,

|

||||

success(res) {

|

||||

me.predict({

|

||||

data: res.data,

|

||||

width: 960,

|

||||

height: 960

|

||||

}, {

|

||||

canvasPath,

|

||||

sw,

|

||||

sh,

|

||||

x,

|

||||

y

|

||||

});

|

||||

}

|

||||

});

|

||||

});

|

||||

}

|

||||

});

|

||||

},

|

||||

|

||||

predict(res, img) {

|

||||

const me = this;

|

||||

detectRunner.predict(res, function (data) {

|

||||

// 获取坐标

|

||||

const points = outputBox(data);

|

||||

me.drawCanvasPoints(img, points.boxes);

|

||||

me.setData({

|

||||

result: JSON.stringify(points.boxes)

|

||||

});

|

||||

});

|

||||